Paper Menu >>

Journal Menu >>

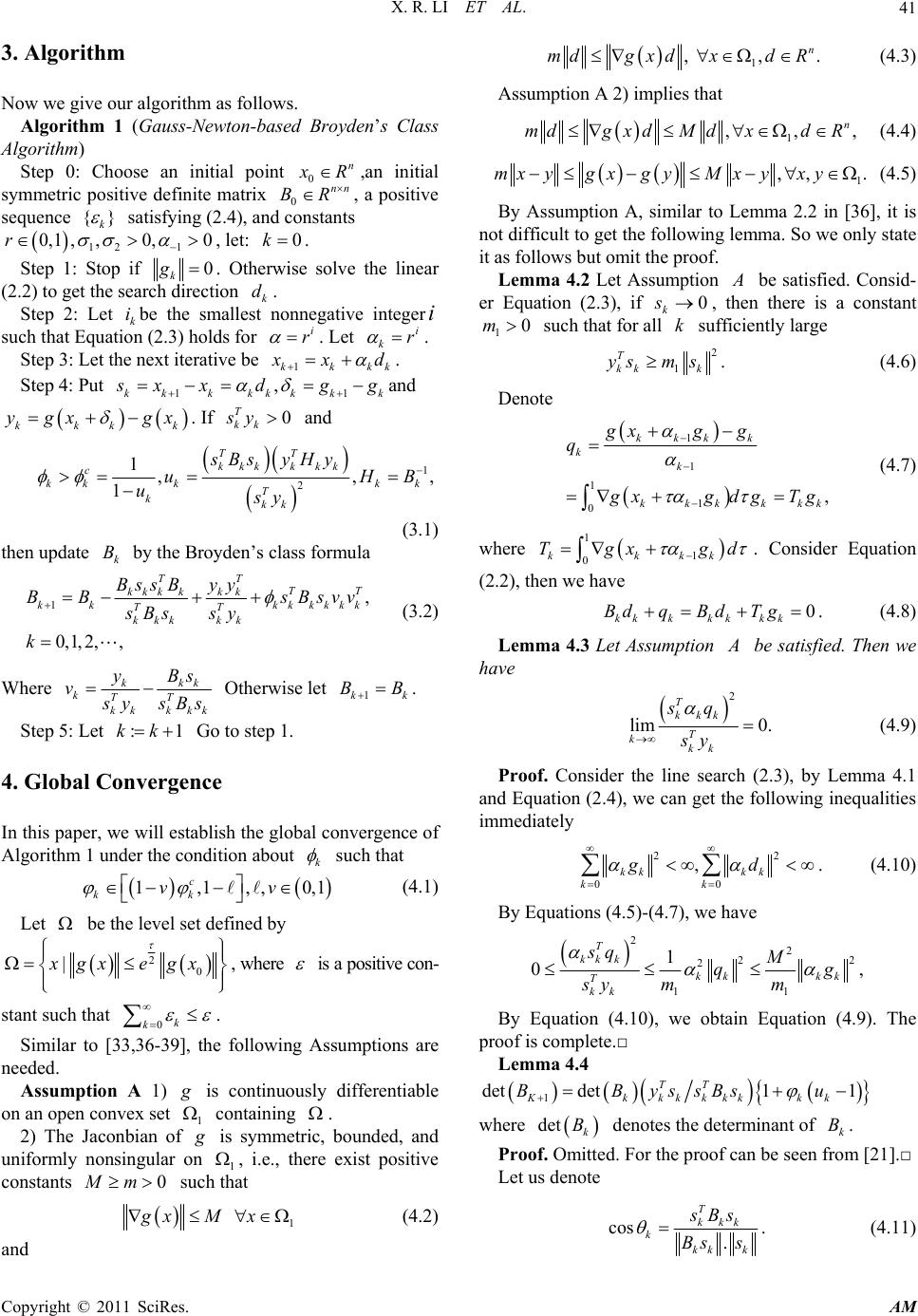

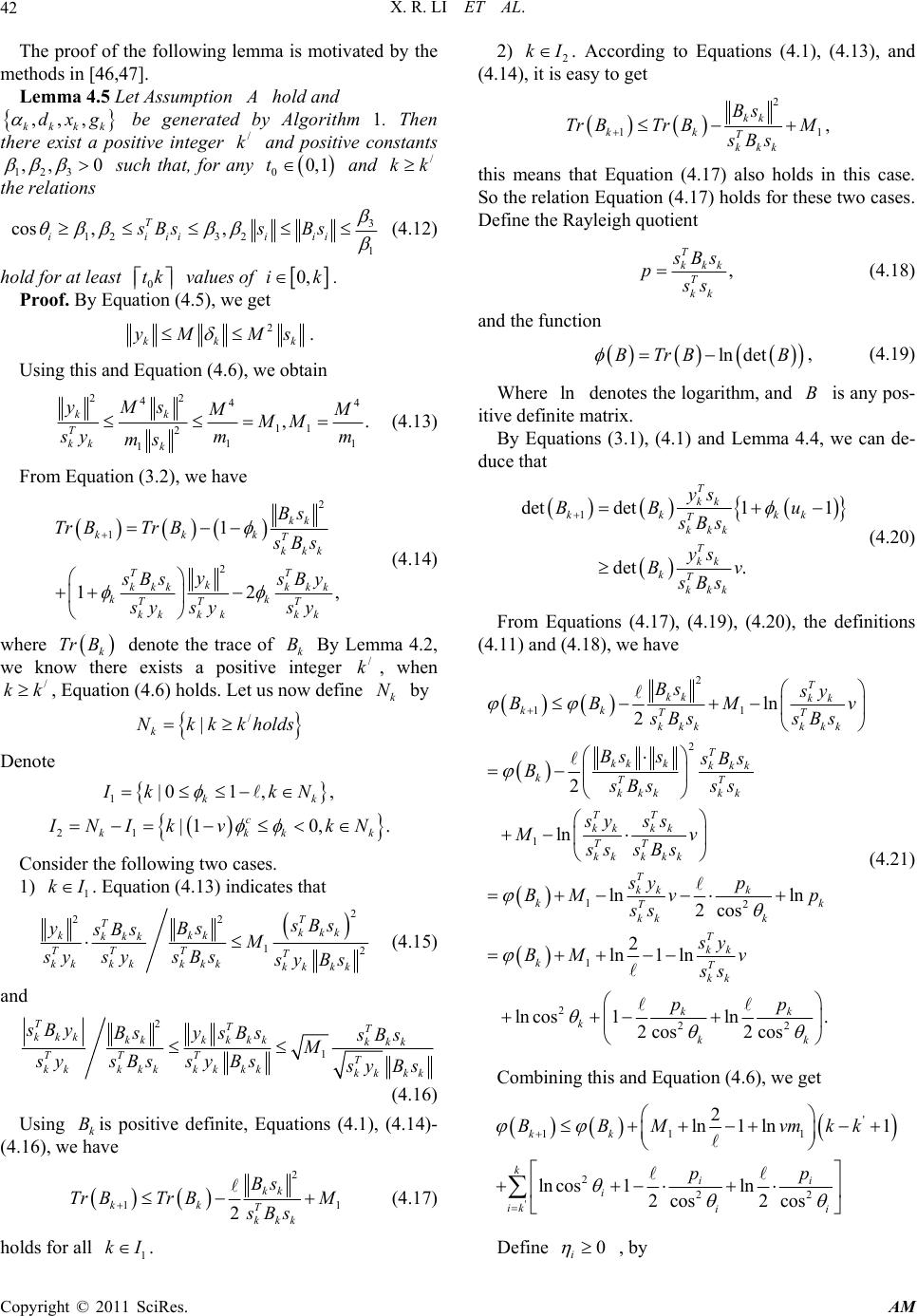

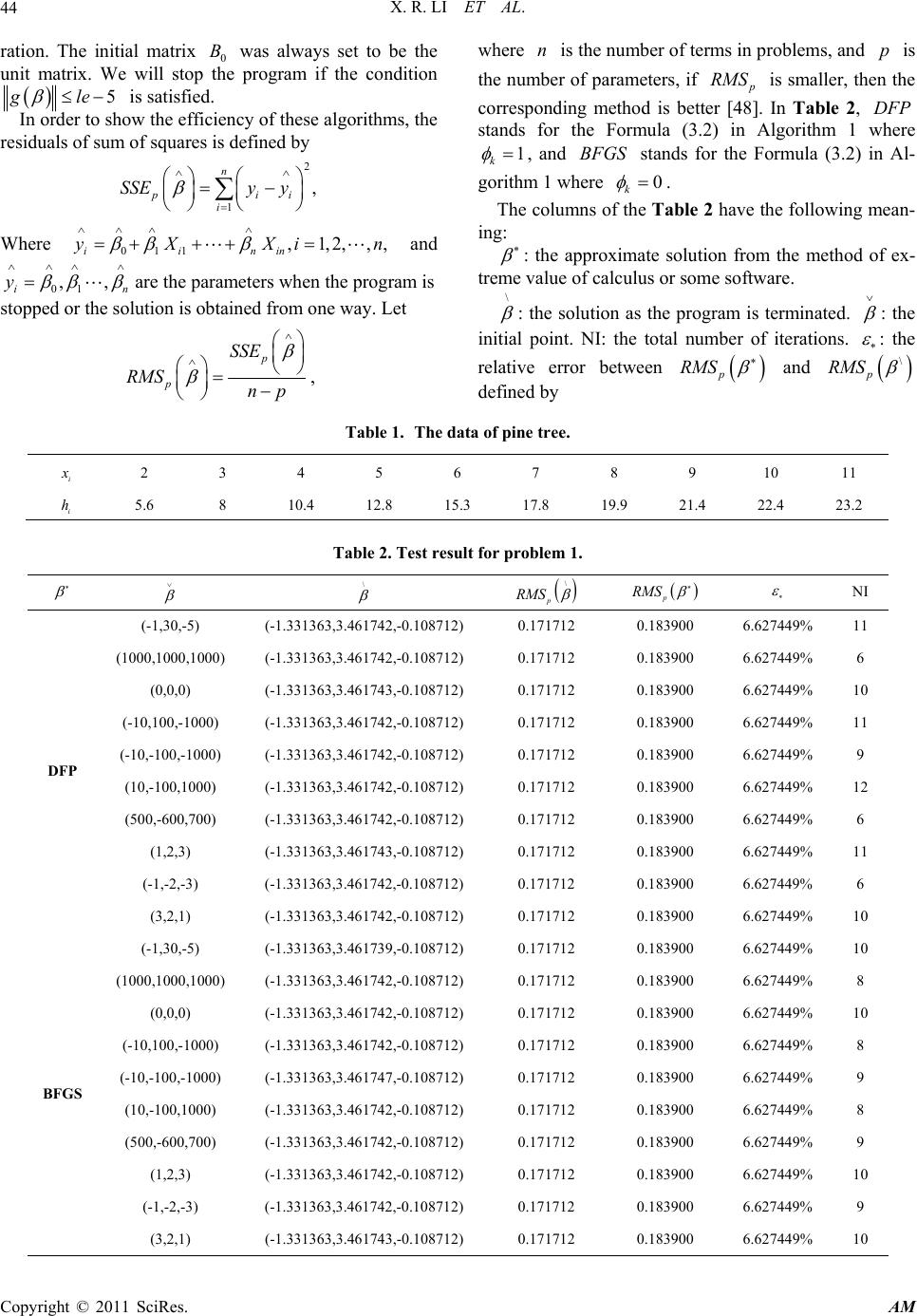

Applied Mathematics, 2011, 2, 39-46 doi:10.4236/am.2011.21005 Published Online January 2011 (http://www.SciRP.org/journal/am) Copyright © 2011 SciRes. AM A Gauss-Newton-Based Broyden’s Class Algorithm for Parameters of Regression Analysis Xiangrong Li, Xupei Zhao Department of Mathematics an d Information Science, Guangxi University, Nanning, China E-mail: xrli68@163.com Received October 14, 2010; revised November 11, 2010; November 13, 2010 Abstract In this paper, a Gauss-Newton-based Broyden’s class method for parameters of regression problems is pre- sented. The global convergence of this given method will be established under suitable conditions. Numeri- cal results show that the proposed method is interesting. Keywords: Global Convergence, Broyden’s Class, Regression Analysis, Nonlinear Equations, Gauss-Newton 1. Introduction It is well known that the regression analysis often arises in economies, finance, trade, law, meteorology, medicine, biology, chemistry, engineering, physics, education, his- tory, sociology, psychology, and so on (see [1-7]). The classical regression model is defined by 12 ,,, p YhXX X , where Y is the response variable, i X is predictor va- riable 1, 2,,,0ipp is an integer constant, and is the error. The function 12 ,,, p hX XX describe the relation between Y and 12 ,,, p X XX X. If h is linear function, then we can get the following linear re- gression model 01122 pp YXX X (1.1) which is the most simple regression model, where 01 ,,, p are regression parameters. On the other hand, the regression model is called nonlinear regression. We all know that there are many nonlinear regression could be linearization [8-13].Then many authors are de- voted to the linear model [14-19]. Now we will concen- trate on the linear model to discuss the following prob- lems. One of the most important work of the regress analy- sis is to estimate the parameters 01 ,,, p . The least squares method is an important fitting method to determined the parameters 01 ,,, p , which is defined by 1 2 01122 1 min , p m iiipip i ShXXX (1.2) where i h is the data valuation of the i th response variable, 12 ,,, ii ip X XX are p data valuation of the i th predictor variable, and m is the number of the data. If the dimension p and the number m is small, then we can obtain the parameters 01 ,,, p from extreme value of calculus. From the definition of (1.2), it is not difficult to see that this problem (1.2) is the same as the following unconstrained optimization prob- lem min n x f x (1.3) For regression problem (1.3), if the dimension n is large and the function f is complex, then it is difficult to solve this problem by the method of extreme value of calculus. In order to solve this problem, numerical me- thods are often used, such as steepest descent method, Newton method, and Guass-Newton method (see [5-7] et al.). Moreover many statical softwares are from this idea. Numerical method, i.e., the iterative method is to generates a sequence of points {} k x which will termi- nate or converge to a point x in some sense. The line search method is one of the most effective numerical method, which is defined by 1,0,1,2,, kkkk xx dk where k that is determined by a line search is the step- length, and k d which determines different line search methods [20-30] is a descent direction of f at k x . We This work is supported by China NSF grands 10761001 and Guangxi SF grands 0991028.  X. R. LI ET AL. Copyright © 2011 SciRes. AM 40 give a line search method for regression problem and get good results (see [31] in detail). In order to solve the problem (1.3), one main goal is to find some point x such that 0, n gx x (1.4) where g xfx is the gradient of () f x In this paper, we will concentrate on this equations problem (1.4) where 2 :n g is continuously differentiable (linear or nonlinear). Assume that the Jacobian g x of g is symmetric for all n x . Let be the norm func- tion defined by 2 1 2 x gx .Then the nonlinear equations problem (1.4) is equivalent to the following global optimization problem min ,n xx . (1.5) Similar to (1.3), the following iterative formula is of- ten used to solve the problem (1.4) or (1.5) 1, kkkk x xd (1.6) where k d is a search direction, k is a steplength along k d and k x is the k th iterative point. For (1.4), Griewank [32] first established a global convergence theorem for quasi-Newton method with a suitable line search. One nonmonotone backtracking inexact quasi- Newton algorithm [33] and the trust region algorithms [34,35] were presented. A Gauss-Newton-based BFGS (Broyden, Fletcher, Goldfar, and Shanno, 1970) method is proposed by Li and Fukushima [36] for solving sym- metric nonlinear equations. Inspired by their ideas, Wei [37] and Yuan [38,39] made a further study. Recently, Yuan and Lu [40-45] got some new methods for symme- tric nonlinear equations. The authors [36] only discussed that the updated ma- trices were generated by the BFGS formula. Whether the updated matrices could be produced by the more exten- sive Broyden's class? This paper gives a positive answer, moreover, the presented method is used to regression analysis. The major contribution of this paper is an ex- tension of the method in [36] to Broyden’s class, moreo- ver, to solving the regression problems. Numerical re- sults of practically statistical problems show that this given method is effective. Throughout this paper, these notations are used: is the Euclidean norm, k g x and 1k gx are replaced by k g and 1k g , respect- tively. In the next section, the method of Li and Fukushima [36] is stated. Our algorithm is proposed in Section 3. Under some reasonable conditions, the global conver- gence of the given algorithm is established in Section 4. In the Section 5, numerical results are reported. In the last section, a conclusion is stated. 2. A Gauss-Newton-Based BFGS Method [36] Li and Fukushima [36] proposed a new BFGS update formula defined by: // // 1/, TT kkk kkk kk TT kk kk BssB BB s Bs s (2.1) Where 11 , kkkkkk s xxygg , 1 , kkkkk g xy gx is the next iteration, kk g gx, 11kk ggx , and / 0 B is an initial symmetric positive definite matrix. By the secant equa- tion / 1 K kk Bs and k g is symmetric, they had ap- proximately / 11 11 T kk kkkkk Bsgyg gs , which implies that / 1k B approximates 11 T kk g g along direction k s By solving the following linear equ- ation to get the search direction k d. 1 1 0. kkk k kk k gxgg Bd (2.2) If 1kk g is sufficiently small and k B is positive definite, then they have the following approximate rela- tion 1 1 . kkk k kk kk k gxgg Bd gg Therefore, 1 1T kkkkk kkk dBggg ggg . So, the solution of (2.2) is an approximate Gauss- Newton direction. Then the methods (2.1) and (2.2) are called Gauss-Newton-based BFGS method. In order to get the steplength k by means of a backtracking process, a new line search technique is defined by 22 222 12 , kk k kkkk gx dg gdg (2.3) where 12 ,0 are constants, and the positive se- quence {} k such that 0 k k . (2.4) Li and Fukushima [36] only discussed that the updated matrices were generated by the BFGS formula. In this paper, we will prove that the updated matrices could be produced by the more extensive Broyden's class. More- over, the presented method is used to regression analysis (1.3) Numerical results show that the given method is promising.  X. R. LI ET AL. Copyright © 2011 SciRes. AM 41 3. Algorithm Now we give our algorithm as follows. Algorithm 1 (Gauss-Newton-based Broyden’s Class Algorithm) Step 0: Choose an initial point 0 n x R,an initial symmetric positive definite matrix 0 nn BR , a positive sequence {} k satisfying (2.4), and constants 12 1 0,1 ,,0,0r , let: 0k. Step 1: Stop if 0 k g. Otherwise solve the linear (2.2) to get the search direction k d. Step 2: Let k ibe the smallest nonnegative integeri such that Equation (2.3) holds for i r . Let i kr . Step 3: Let the next iterative be 1kkkk x xd . Step 4: Put 11 , kkkkkkkk s xx dgg and kkkk y gx gx . If 0 T kk sy and 1 2 1,,, 1 TT kkkkkk c kkkk k T kkk sBs yHy uHB usy (3.1) then update k B by the Broyden’s class formula 1, 0,1,2,, TT TT kkkkkk kkkk kkkk TT kkk kk BssB yy BB sBsvv sBs sy k (3.2) Where kkk kTT kk kkk yBs v s ysBs Otherwise let 1kk BB . Step 5: Let :1kk Go to step 1. 4. Global Convergence In this paper, we will establish the global convergence of Algorithm 1 under the condition about k such that 1,1,,0,1 c kk vv (4.1) Let be the level set defined by 2 0 |xgxegx , where is a positive con- stant such that 0k k . Similar to [33,36-39], the following Assumptions are needed. Assumption A 1) g is continuously differentiable on an open convex set 1 containing . 2) The Jaconbian of g is symmetric, bounded, and uniformly nonsingular on 1 , i.e., there exist positive constants 0Mm such that 1 gxM x (4.2) and 1 , ,. n mdgxdxdR (4.3) Assumption A 2) implies that 1 ,,, n mdgxdMdxdR (4.4) 1 ,, .mxygxg yMxyxy (4.5) By Assumption A, similar to Lemma 2.2 in [36], it is not difficult to get the following lemma. So we only state it as follows but omit the proof. Lemma 4.2 Let Assumption A be satisfied. Consid- er Equation (2.3), if 0 k s, then there is a constant 10m such that for all k sufficiently large 2 1. T kk k ysm s (4.6) Denote 1 1 1 1 0, kkk k kk kkkkkk gxgg q g xgdgTg (4.7) where 1 1 0 kkkk Tgx gd . Consider Equation (2.2), then we have 0 kkkkk kk BdqBd Tg . (4.8) Lemma 4.3 Let Assumption A be satisfied. Then we have 2 lim 0. T kkk T kkk sq sy (4.9) Proof. Consider the line search (2.3), by Lemma 4.1 and Equation (2.4), we can get the following inequalities immediately 22 00 , kk kk kk gd . (4.10) By Equations (4.5)-(4.7), we have 2 2 22 2 11 1 0, T kk k kk kk T kk sq M qg mm sy By Equation (4.10), we obtain Equation (4.9). The proof is complete.□ Lemma 4.4 1 det det11 TT Kkkkkkkkk BByssBsu where det k B denotes the determinant of k B. Proof. Omitted. For the proof can be seen from [21].□ Let us denote cos . T kkk kkk k s Bs Bs s . (4.11)  X. R. LI ET AL. Copyright © 2011 SciRes. AM 42 The proof of the following lemma is motivated by the methods in [46,47]. Lemma 4.5 Let Assumption A hold and ,,, kkkk dxg be generated by Algorithm 1. Then there exist a positive integer / k and positive constants 123 ,, 0 such that, for any 00,1t and / kk the relations 3 12 32 1 cos ,, T iiiiiii sBss Bs (4.12) hold for at least 0 tk values of 0,ik. Proof. By Equation (4.5), we get 2 kk k yM Ms . Using this and Equation (4.6), we obtain 22 444 11 2 11 1 ,. kk T kk k yMsMM MM mm sy ms (4.13) From Equation (3.2), we have 2 1 2 1 12, kk kkk T kkk TT k kkk kkk kk TT T kkk kkk Bs Tr BTr B s Bs y s Bs sBy sy sysy (4.14) where k Tr B denote the trace of k B By Lemma 4.2, we know there exists a positive integer / k, when / kk, Equation (4.6) holds. Let us now define k N by / | k Nkkkholds Denote 1|01 ,, kk Ik kN 21 |10, . c kkkk I NI kvkN Consider the following two cases. 1) 1 kI. Equation (4.13) indicates that 2 22 12 T Tkkk kkk kkk TT TT kk kkkkkkkkk sBs yBs sBs M sy sysBs s yBs (4.15) and 2 1 TTT kkk kkkk kkkkk TT TT kkkkkkkkkkk kk sBy Bsy sBssBs M sysBssy Bs s yBs (4.16) Using k Bis positive definite, Equations (4.1), (4.14)- (4.16), we have 2 11 2 kk kk T kkk Bs Tr BTr BM sBs (4.17) holds for all 1 kI. 2) 2 kI . According to Equations (4.1), (4.13), and (4.14), it is easy to get 2 11 kk kk T kkk Bs Tr BTr BM sBs , this means that Equation (4.17) also holds in this case. So the relation Equation (4.17) holds for these two cases. Define the Rayleigh quotient , T kkk T kk s Bs p s s (4.18) and the function ln detBTrB B , (4.19) Where ln denotes the logarithm, and B is any pos- itive definite matrix. By Equations (3.1), (4.1) and Lemma 4.4, we can de- duce that 1 det det11 det . T kk kk kk T kkk T kk kT kkk ys BB u sBs ys Bv sBs (4.20) From Equations (4.17), (4.19), (4.20), the definitions (4.11) and (4.18), we have 2 11 2 1 12 1 ln 2 2 ln ln ln 2cos 2 ln1ln lnco T kk kk kk TT kkk kkk T kk kkkk kTT kkk kk TT kk kk TT kkk kk T kk k kk T kk k T kk kT kk Bs sy BBM v sBs sBs Bs ssBs BsBs ss sy ss Mv ss sBs sy p BM vp ss sy BM v ss 2 22 s1ln. 22 cos cos kk k kk pp (4.21) Combining this and Equation (4.6), we get ' ' 11 1 2 22 2 ln1ln1 ln cos1ln 22 cos cos kk kii i ik ii BBM vmkk pp Define 0 i , by  X. R. LI ET AL. Copyright © 2011 SciRes. AM 43 2 22 ln cos1ln. 22 cos cos ii ii ii pp (4.22) Since 10 k B [or see [46]] we have ' 11 '' 12 ln1ln. 11 kk i jk BMvm kk kk (4.23) Let us define i to be a set consisting of ' 0 tkk indices corresponding to the ' 0 tkk smallest values of i for ' kik , and let max de- note the largest of the i for k iJ Then we get '' max '' , max 0 11 . 11 1. k kk ii jk ikiJ kk kk t Therefore, by Equation (4.23), we have, for all k iJ 110 0 12 ln1ln 1 ik BM vm t (4.24) Since the term inside the brackets in Equation (4.22) is less than or equal to zero, we conclude from Equations (4.22) and (4.24) that for all k iJ 2 0 ln cosi Thus, we get 02 1 cos t ie (4.25) According to Equations (4.22) and (4.24), for all k Ji we have, 0 22 1ln . 22 cos cos ii ii pp Note the function 1lnwtt t , (4.26) is nonpositive for all 0t, achiexes its maximum value at 0t, and satisfies wt both as t and 0t. Then it follows that for all k iJ ' 32 20 cos i i p , For some constants ' 2 and 3 . By Equation (4.25), we get 2' 212 3i p Using cos ii i ii Bs p s , we obtain for all k iJ, 3 2 1 ii i Bs s . Since ' k is a fixed integer and i B are positive defi- nite, we can take smaller 12 , and larger 3 if nece- ssary so that this lemma holds for all ' ik Therefore Equation (4.12) holds for at least 0 tk indices 0,ik. The proof is complete.□ Let |4.12Ni holds. Similar to [36], it is not difficult to get the global con- vergence theorem of Algorithm 1. So we only state as follows but omit the proof. Theorem 4.1 Let Assumption A and Equation (4.1) hold. Then the sequence k x generated by the Gauss-Newt o n - based Broyden’s class Algorithm. Then lim inf0 k kg . (4.27) 5. Numerical Results In this section, we report results of some numerical ex- periments with the proposed method. We will test two practically statistical problems to show the efficiency of Algorithm 1. Problem 1. In Table 1, there is data of the age x and the average height H of a pine tree: Our objective is to find out the approximate function between the demand and the price, namely, we need to find the regression equation of x to the h.It is easy to see that the age x and the average height H are pa- rabola relations. Denote the regression function by 2 01 2 hxx where 0 , 1 , and 2 are the re- gression parameters. Using least squares method, we need to solve the following problem 2 2 01 2 0 min n iii i Qh xx and obtain 0 , 1 , and 2 , where 10n. Then the corresponding unconstrained optimization problem is defined by 3 2 2 1 min1,,. nT iii i fhxx (5.1) where Y is overall appraisal to supervisor, 1 X de- notes to processes employee's complaining, 2 X refer to do not permit the privilege, 3 X is the opportunity about study, 4 X is promoted based on the work achievement, 5 X refer to too nitpick to the bad performance, and 6 X is the speed of promoting to the better work. In the experiment, all codes were written in MATLAB 7.5 and run on PC with 2.60 GHz CPU processor and 480 MB memory and Windows XP operation system. In the experiments, the parameters in Algorithm 1 were chosen as 0.1r , 0.85 , 4 12 10 , 1 = 0.0001 and 2 kk , where k is the number of ite-  X. R. LI ET AL. Copyright © 2011 SciRes. AM 44 ration. The initial matrix 0 B was always set to be the unit matrix. We will stop the program if the condition 5gle is satisfied. In order to show the efficiency of these algorithms, the residuals of sum of squares is defined by 2 1 , n pii i SSEy y Where 011 ,1,2,,, iinin yX Xin and 01 ,, in y are the parameters when the program is stopped or the solution is obtained from one way. Let p p SSE RMS np , where n is the number of terms in problems, and p is the number of parameters, if p RMS is smaller, then the corresponding method is better [48]. In Table 2, DFP stands for the Formula (3.2) in Algorithm 1 where 1 k , and BFGS stands for the Formula (3.2) in Al- gorithm 1 where 0 k . The columns of the Table 2 have the following mean- ing: : the approximate solution from the method of ex- treme value of calculus or some software. \ : the solution as the program is terminated. : the initial point. NI: the total number of iterations. : the relative error between p RMS and \ p RMS defined by Table 1. The data of pine tree. i x 2 3 4 5 6 7 8 9 10 11 i h 5.6 8 10.4 12.8 15.3 17.8 19.9 21.4 22.4 23.2 Table 2. Test result for problem 1. \ \ p RMS p RMS NI DFP (-1,30,-5) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 11 (1000,1000,1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 6 (0,0,0) (-1.331363,3.461743,-0.108712) 0.171712 0.183900 6.627449% 10 (-10,100,-1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 11 (-10,-100,-1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 9 (10,-100,1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 12 (500,-600,700) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 6 (1,2,3) (-1.331363,3.461743,-0.108712) 0.171712 0.183900 6.627449% 11 (-1,-2,-3) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 6 (3,2,1) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 10 BFGS (-1,30,-5) (-1.331363,3.461739,-0.108712) 0.171712 0.183900 6.627449% 10 (1000,1000,1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 8 (0,0,0) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 10 (-10,100,-1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 8 (-10,-100,-1000) (-1.331363,3.461747,-0.108712) 0.171712 0.183900 6.627449% 9 (10,-100,1000) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 8 (500,-600,700) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 9 (1,2,3) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 10 (-1,-2,-3) (-1.331363,3.461742,-0.108712) 0.171712 0.183900 6.627449% 9 (3,2,1) (-1.331363,3.461743,-0.108712) 0.171712 0.183900 6.627449% 10  X. R. LI ET AL. Copyright © 2011 SciRes. AM 45 \ pP p RMS RMS RMS . For Problem 1, the above problems (5.2) can be solved by extreme value of calculus. Then we get 1.33,3.46, 0.11 in Table 2. Here we also solve these two problems by Algorithm 1. These numerical results of Table 2 indicate that Algorithm 1 is better than those of these methods from extreme value of calculus or some software. Then we can conclude that the numerical method will outperform the method of extreme value of calculus in some sense, and some software for regression analysis could be further improved in the future. Moreo- ver, the initial points don not influence that the sequence k x converges to one solution x our proposed me- thod. 6. References [1] D. M. Bates and D. G. Watts, “Nonlinear Regression Analysis and Its Applications,” John Wiley & Sons, New York, 1988. doi:10.1002/9780470316757 [2] S. Chatterjee and M. Machler, “Robust Regression: A Weighted Least Squares Approach, Communications in Statistics,” Theorey and Methods, Vol. 26, No. 6, 1997, pp. 1381-1394. doi:10.1080/03610929708831988 [3] R. Christensen, “Analysis of Variance, Design and Re- gression: Applied Statistical Methods,” Chapman and Hall, New York, 1996. [4] N. R. Draper and H. Smith, “Applied Regression Analy- sis,” 3rd Edtion, John Wiley & Sons, New York, 1998. [5] F. A. Graybill and H. K. Iyer, “Regression Analysis: Concepts and Applications,” Duxbury Press, Belmont, 1994. [6] R. F. Gunst and R. L. Mason, “Regression Analysis and Its Application: A Data-Oriented Approach,” Marcel Dekker, New York, 1980. [7] R. H. Myers, “Classical and Modern Regression with Applications,” 2nd Edtion, PWS-Kent Publishing Com- pany, Boston, 1990. [8] R. C. Rao, “Linear Statistical Inference and Its Applica- tions,” John Wiley & Sons, New York, 1973. doi:10.1002/9780470316436 [9] D. A. Ratkowsky, “Nonlinear Regression Modeling: A Unified Practical Approach,” Marcel Dekker, New York, 1983. [10] D. A. Ratkowsky, “Handbook of Nonlinear Regression Modeling,” Marcel Dekker, New York, 1990. [11] A. C. Rencher, “Methods of Multivariate Analysis,” John Wiley & Sons, New York, 1995. [12] G. A. F. Seber and C. J. Wild, “Nonlinear Regression,” John Wiley & Sons, New York, 1989. doi:10.1002/ 0471725315 [13] A. Sen and M. Srivastava, “Regression Analysis: Theory, Methods, and Applications,” Springer-Verlag, New York, 1990. [14] J. Fox, “Linear Statistical Models and Related Methods,” John Wiley & Sons, New York, 1984. [15] S. Haberman and A. E. Renshaw, “Generalized Linear Models and Actuarial Science,” The Statistician, Vol. 45, No. 4, 1996, pp. 407-436. doi:10.2307/2988543 [16] S. Haberman and A. E. Renshaw, “Generalized Linear Models and Excess Mortality from Peptic Ulcers,” In- surance: Mathematics and Economics, Vol. 9, No. 1, 1990, pp. 147-154. doi:10.1016/0167-6687(90)90012-3 [17] R. R. Hocking, “The Analysis and Selection of Variables in Linear Regression,” Biometrics, Vol. 32, No. 1, 1976, pp. 1-49. doi:10.2307/2529336 [18] P. McCullagh and J. A. Nelder, “Generalized Linear Models,” Chapman and Hall, London, 1989. [19] J. A. Nelder and R. J. Verral, “Credibility Theory and Generalized Linear Models.” ASTIN Bulletin, Vol. 27, No. 1, 1997, pp. 71-82. doi:10.2143/AST.27.1.563206 [20] M. Raydan, “The Barzilai and Borwein Gradient Method for the Large Scale Unconstrained Minimization Prob- lem,” SIAM Journal on Optimization, Vol. 7, No. 1, 1997, pp. 26-33. doi:10.1137/S1052623494266365 [21] J. Schropp, “A Note on Minimization Problems and Mul- tistep Methods,” Numerical Mathematics, Vol. 78, 1997, pp. 87-101. doi:10.1007/s002110050305 [22] J. Schropp, “One-Step and Multistep Procedures for Con- strained Minimization Problems,” IMA Journal of Nu- merical Analysis, Vol. 20, No. 1, 2000, pp. 135-152. doi:10.1093/imanum/20.1.135 [23] D. J. Van Wyk, “Differential Optimization Techniques,” Applied Mathematical Modelling, Vol. 8, 1984, pp. 419-424. doi:10.1016/0307-904X(84)90048-9 [24] M. N. Vrahatis, G. S. Androulakis, J. N. Lambrinos and G. D. Magolas, “A Class of Gradient Unconstrained Mi- nimization Algorithms with Adaptive Stepsize,” Journal of Computational and Applied Mathematics, Vol. 114, No. 2, 2000, pp. 367-386. doi:10.1016/S0377-0427(99) 00276-9 [25] G. Yuan and X. Lu, “A New Line Search Method with Trust Region for Unconstrained Optimization,” Commu- nications on Applied Nonlinear Analysis, Vol. 15, 2008, No. 2, pp. 35-49. [26] G. Yuan and Z. Wei, “New Line Search Methods for Unconstrained Optimization,” Journal of the Korean Sta- tistical Society, Vol. 38, No. 1, 2009, pp. 29-39. doi: 10.1016/j.jkss.2008.05.004 [27] G. Yuan and X. Lu, “A Modified PRP Conjugate Gra- dient Method,” Annals of Operations Research, Vol. 166, No. 1, 2009, pp. 73-90. doi:10.1007/s10479-008-0420-4 [28] G. Yuan, “Modified Nonlinear Conjugate Gradient Me- thods with Sufficient Descent Property for Large-Scale Optimization Problems,” Optimization Letters, Vol. 3, No. 1, 2009, pp.11-21. doi:10.1007/s11590-008-0086-5 [29] G. Yuan, X. Lu and Z. Wei, “A Conjugate Gradient Me-  X. R. LI ET AL. Copyright © 2011 SciRes. AM 46 thod with Descent Direction for Unconstrained Optimiza- tion,” Journal of Computational and Applied Mathemat- ics, Vol. 233, No. 2, 2009, pp. 519-530. doi:10.1016/ j.cam.2009.08.001 [30] G. Yuan, “A Conjugate Gradient Method for Uncon- strained Optimization Problems,” International Journal of Mathematics and Mathematical Sciences, Vol. 2009, 2009, pp. 1-14. doi:10.1155/2009/329623 [31] G. Yuan and Z. Wei, “A Nonmonotone Line Search Me- thod for Regression Analysis,” Journal of Service Science and Management, Vol. 2, No. 1, 2009, pp. 36-42. doi: 10.4236/jssm.2009.21005 [32] A. Griewank, “The ‘Global’ Convergence of Broy- den-Like Methods with a Suitable Line Search,” Journal of the Australian Mathematical Society. Series B, Vol. 28, No. 1, 1986, pp. 75-92. doi:10.1017/S0334270000005208 [33] D. T. Zhu, “Nonmonotone Backtracking Inexact Quasi- Newton Algorithms for Solving Smooth Nonlinear Equa- tions,” Applied Mathematics and Computation, Vol. 161, No. 3, 2005, pp. 875-895. doi:10.1016/j.amc.2003.12.074 [34] J. Y. Fan, “A Modified Levenberg-Marquardt Algorithm for Singular System of Nonlinear Equations,” Journal of Computational Mathematics, Vol. 21, No. 5, 2003, pp. 625-636. [35] Y. Yuan, “Trust Region Algorithm for Nonlinear Equa- tions,” Information, Vol. 1, 1998, pp. 7-21. [36] D. Li and M. Fukushima, “A Global and Superlinear Convergent Gauss-Newton-Based BFGS Method for Symmetric Nonlinear Equations,” SIAM Journal on Nu- merical Analysis, Vol. 37, No. 1, 1999, pp. 152-172. doi: 10.1137/S0036142998335704 [37] Z. Wei, G. Yuan and Z. Lian, “An Approximate Gauss-Newton-Based BFGS Method for Solving Sym- metric Nonlinear Equations,” Guangxi Sciences, Vol. 11, No. 2, 2004, pp. 91-99. [38] G. Yuan and X. Li, “An Approximate Gauss-Newton- Based BFGS Method with Descent Directions for Solving Symmetric Nonlinear Equations,” OR Transactions, Vol. 8, No. 4, 2004, pp. 10-26. [39] G. Yuan and X. Lu, “A Nonmonotone Gauss-Newton- Based BFGS Method for Solving Symmetric Nonlinear Equations,” Journal of Lanzhou University, Vol. 41, 2005, pp. 851-855. [40] G. Yuan abd X. Lu, “A New Backtracking Inexact BFGS Method for Symmetric Nonlinear Equations,” Computer and Mathematics with Application,” Vol. 55, No. 1, 2008, pp. 116-129. doi:10.1016/j.camwa.2006.12.081 [41] G. Yuan, X. Lu and Z. Wei, “BFGS Trust-Region Me- thod for Symmetric Nonlinear Equations,” Journal of Computational and Applied Mathematics, Vol. 230, No. 1, 2009, pp. 44-58. doi:10.1016/j.cam.2008.10.062 [42] G. Yuan, Z. Wang and Z. Wei, “A Rank-One Fitting Me- thod with Descent Direction for Solving Symmetric Non- linear Equations,” International Journal of Communica- tions, Network and System Sciences, Vol. 2, No. 6, 2009, pp. 555-561. doi:10.4236/ijcns.2009.26061 [43] G. Yuan, S. Meng and Z. Wei, “A Trust-Region-Based BFGS Method with Line Search Technique for Symme- tric Nonlinear Equations,” Advances in Operations Re- search, Vol. 2009, 2009, pp. 1-20. doi:10.1155/2009/ 909753 [44] G. Yuan and X. Li, “A Rank-One Fitting Method for Solving Symmetric Nonlinear Equations,” Journal of Ap- plied Functional Analysis, Vol. 5, No. 4, 2010, pp. 389-407. [45] G. Yuan, Z. Wei and X. Lu, “A Nonmonotone Trust Re- gion Method for Solving Symmetric Nonlinear Equa- tions,” Chinese Quarterly Journal of Mathematics, Vol. 24, No. 4, 2009, pp. 574-584. [46] R. Byrd and J. Nocedal, “A Tool for the Analysis of Qua- si-Newton Methods with Application to Unconstrained Minimization,” SIAM Journal on Numerical Analysis, Vol. 26, No. 3, 1989, pp. 727-739. doi:10.1137/0726042 [47] D. Xu, “Global Convergence of the Broyden’s Class of Quasi-Newton Methods with Nonomonotone Line- search,” ACTA Mathematicae Applicatae Sinica, English Series, Vol. 19, No. 1, 2003, pp.19-24. doi:10.1007/ s10255-003-0076-4 [48] S. Chatterjee, A. S. Hadi and B. Price, “Regression Analysis by Example,” 3rd Edition, John Wiley & Sons, New York, 2000. |