Journal of Signal and Information Processing, 2013, 4, 299-307 http://dx.doi.org/10.4236/jsip.2013.43038 Published Online August 2013 (http://www.scirp.org/journal/jsip) 299 Depth-Aided Tracking Multiple Objects under Occlusion Anh Tu Tran, Koichi Harada Graduate School of Engineering, Hiroshima University, Higashihiroshima, Japan. Email: d103714@hiroshima-u.ac.jp, hrd@hiroshima-u.ac.jp Received May 12th, 2013; revised June 15th, 2013; accepted July 12th, 2013 Copyright © 2013 Anh Tu Tran, Koichi Harada. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. ABSTRACT In this paper, we have presented a novel tracking metho d aiming at detecting objects and maintaining their la-bel/iden- tification over the time. The key factors of this method are to use depth information and different strategies to track ob- jects under various occlusion scenarios. The foreground objects are detected and refin ed by backgroun d sub traction and shadow cancellation. The occlusion detection is based on information of foreground blobs in successive frames. The occlusion regions are projected to the projection plane XZ to analysis occlusion situation. According to the occlusion analysis results, different objects’ corresponding strategies are introduced to track objects under various occlusion sce- narios including tracking occluded objects in similar depth layer and in different depth layers. The experimental results show that our proposed method can track the moving objects under the most typical and challenging occlusion scenar- ios. Keywords: Visual Tracking; Multiple Object Tracking; Stereo Tracking; Occlusion Analysis 1. Introduction Object tracking in the video sequence has played an im- portant role in a research area of computer vision and a wide range of applications, such as video monitoring and surveillance, video conferencing and video summariza- tion. Based on different camera configurations, objects can be tracked by using a single camera or stereo/multi- ple cameras. Object tracking with a single camera has studied in many literatures and different methods have been developed such as tracking by model-based tracking method [1], appearance-based methods [2-4], feature- based tracking [5], and statistical methods [6-8]. Many algorithms can obtain good results in so me cases, such as when the targets are separated. However, multiple object tracking is still a challenging task due to the non-rigid motion of deformable object, persistent occlusion and the dynamic change of object attributes, such as color distri- bution, shape and visibility. In the real scene, occlusion between objects often occurs. For example, in typical surveillance scenario a person is partially or fully oc- cluded by other people. Unfortunately, these occlusions lead to failed tracking. Some classical frameworks have been extended to track multi objects. In the multi-object tracking system [9], the level set method is used to han- dle contour splitting and merging. Extensive methods, i.e. Monte Carlo based probabilistic methods [10], game theory based approaches [11] and appearance model based deterministic methods [12,13] have been presented to solve the mutual occlu sion p roblem. Ano ther attractive research direction is stereo or multiple camera based method. While object detection and tracking with a sin- gle camera are well-explored topics, the use of multi-ca- meras technology for this purpose has attracted much attentions recently du e to the availability and low price of new hardware. A multi-camera system observes the scene from two or more different views, and obtains more comprehensive information than a monocular camera system, which can take the advantage of depth informa- tion to improve the tracking system performance. Some tracking methods focus on usage of depth information only [14], or usage of depth information on better fore- ground segmentation [15], or usage depth information as a feature to be fused in a maximum likelihood model to predict 3D object positions [16]. In this work, we have presented a novel tracking method aiming at detecting objects and maintaining their label/identification across video frame sequence. The main points of this method are to use depth information and different strategies to track objects under various occlusion scenarios. Figure 1 shows the flowchart of our tracking system. The rest of this paper is organized as follows: Section 2 presents the proposed tracking method. Section 3 Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion 300 shows experimental results; and, finally, Section 4 con- cludes this paper. 2. Proposed Synthesis Method Our proposed tracking system is shown in Figure 1 and it consists of below main steps. 2.1. Depth Estimation Depth estimation aims at calculating the structure and depth of objects in a scene from two views or a set of multiple views. This topic has been attracted extensive attentions in research communities. A comprehensive survey and evaluation of dense two-view stereo matching algorithms can be found in [17]. In this work, depth is estimated based on block match- ing algorithms proposal in [18]. This block matching technique is a one-pass stereo matching algorithm that uses a sliding sums of absolute differences window be- tween pixels in the left image and the pixels in the right image. An example of depth image is shown in Figure 2. 2.2. Foreground Segmentation and Shadow Cancellation Our method performs foreground segmentation to speed up the process of object tracking. There are many fore- Figure 1. The flowchart of proposed tracking method. Figure 2. Color image and depth image. ground segmentation algor ithms for instance of Gaussian mixture model [19,20]. In our method, we use simple technique based on absolute differences between current image and background image. In some cases, we have the fixed cameras observing the scene, so we may have an image of the background of the scene. However, in most case this background is not readily available. Moreover, the background scene often evolves over time because for example the light condition might change or because of new object could be added or removed from the background. Therefore, it is necessary to dynamically build the background model by regularly updating it. This can be accomplished by computing moving average using the following formula: 1 1 tt tp , (1) where, is pixel value ate a given time , t pt 1t is the current average value, and is called the learning rate and it defines the influence of the current value. In our method, first a color background model is cre- ated by computing a moving average for each channel (R, B and G channels of color image) of each pixel of in- coming frames (around 10 frames). The decision to de- fine a foreground pixel is simply based on comparing the current frame with background model and then updating this. Specifically, c c 0if I, Iotherwise bg H pIp t Fp p (2) where, p is value of pixel in foreground image, p cbg pIp repres ents the abso lute color differ ence between the color value at pixel of current frame p c p and the color value of pixel of background frame p bg p of R, G, B channels. t is threshold and for the each color channel this threshold can be set to 0.3*bg p. An example of foreground image is shown in Figure 3. However, the segmented foreground image includes noise affected by the shadow. The shadow regions are the parts of moving objects. Shadow detection and removing will be used to refine the foreground . To avoid the effects Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion Copyright © 2013 SciRes. JSIP 301 blob area BA , and the average depth value of blob min Z. Blobs are also given temporally identification D. of shadows, a shadow detection described in [21] is em- ployed. More detail about this method, please refer to [21]. The result of the shadow detection is shown in Figure 4. We define two kinds of distance: the distance between blob and blob in the same frame and the dis- tance between blob of frame and blob of fra- ijt i tj 2.3. Blobs Extraction and Blobs’ Information Store me 1t . First, we try to extract the blobs of objects from seg- mented foreground image. Blob extraction is performed on the foreground binary image by connected component labeling using CvBlobLib library [22]. The foreground binary image can obtained from simple threshold opera- tion followed by the application of eroded and dilated operation on the segmented foreground image. CvBlob- sLib provides two basic function alities: extracting 8-con- nected components, referred to as blobs, in binary or grayscale images using Chang’s contour tracing algo- rithm [23], and filtering the obtained blobs to get the ob- jects in the image that we are interested in. In our method, we remove any blobs that have area small than 100 pix- els. The distance , tt di j between blob i and blob j in the same frame is computed by: t 2 ,, tt tt tti jij di jxxyy 2 (3) where , tt ii y and , tt j y are the center coordina- tion of blob and blob at frame t, respectively. ij Similarly, a distance 1 , tt Di j t between blob i of frame and blob of frame is calculated by: tj 1 11 22 1 ,, tt tt tijij t Di jxxyy (4) For every frame, after extracting blobs, information about blob is stored in a structured record for later proc- essing steps. The blob’s information includes total num- where , tt ii y and 11 , tt jj xy are the center coor- dination of blob at frame and blob at frame i tj ber of blob , blob’s center coordination NB , y, 1t correspondingly. 2.4. Occlusion Detection We detect the occlusion in current frame according to blobs’ information at frame t and previous frame t 1t . It is based on two clues. The first clue comes from the shortest distance between blobs at the same fra- me 1t and the second one is the difference of num- ber of blobs at frame and . First, we find the shortest distance t 1t (a) (b) 1t1 di j , t between blobs in frame 1t , assuming that it occurs between blob m and Figure 3. Foreground segmentation. (a) input image; (b) segmented foreground image. blob , i.e. n min 11 11 ,min, tt tt dnmdi j . We define an occlusion flag . This flag gets occ_s t art f value t if occlusion is found at frame and otherwise it gets value t 1 . Specially (see Equation (5)), (a) (b) where, 1t NB and are the total number of blobs in frame t NB 1t and frame respectively. is tthreshold d Figure 4. Shadow regions detection. (a) segmented fore- ground image; (b) shadow detection (the blue color pixels are the detected shadow). the threshold of blob distance at the same frame. minthreshold( 1) 11 occ_start ifand , 1otherwise tt- tt t dn,md NBNB f (5)  Depth-Aided Tracking Multiple Objects under Occlusion 302 Similarly, we also detect when the occlusion termi- nates. The end of occlusion is checked based on the shortest distance between blobs at the current frame and the difference of number of blobs at current frame t and previous frame . We define the end of occlu- sion flag as following: 1t 1 t occ_end f minthreshold 1 occ_end if and, 1otherwise tt t t tdn,md NBNB f (6) 2.5. Object Tracking According to the result of occlusion detection, the track- ing objects can be dividing into two types: tracking ob- jects without occlusion and tracking objects under occlu- sion. 2.5.1. Tracking Objects without Occlusion The video obj ects corr esponden ce und er non-o cclusio n is obtained through the shorted distance be- 1 , tt Di j tween blobs in previous frame and blobs in the current frame. This distance between blobs in previous frame and blobs in the current frame is calculated by Equation (4). For instance, once a foreground blob at frame m m t tB 1t finds its corresponding blob in frame , its label or identification is updated corre- spondingly to the of blob . Specially, 1 n t B )(ID 1 n t ID B 1 11 thres 1 of of ,min ,1,2,, if, mn tt mn mj tt tt mn tt ID B ID B DB BDB,BjNB DB BD (7) where k B denotes the blob at frame ; kj 1t NB is number of blobs in frame 1t; is distance thr Des threshold. 2.5.2. Tracking Objects under Occlusion The main idea of our tracking method is that the object label or identification (ID) is maintained constantly dur- ing occlusion and after they switch their positions. When occlusion occurs, we can detect and extract the occlusion region. We also can detect and separately ex- tract a list of objects that are non-occlusion objects in previous frame but overlaying each other in current frame. In order to track the objects under occlusion, depth in- formation is used to analysis the occlusion situation. First, the occluded regions are projected to the ground plane Z according to their horizontal position and their depth gray level (more detail in the next subsection). Then according to the Z plane, the occlusion objects can be divided into two types based on the depth ranges: 1) in the different depth layer or 2) in the same depth layer. We are dealing with these situations as following parts. 1) Project Occlusion Regions into Ground Plane XZ Each foreground pixel in occlusion region has in- formation obtained from depth map. These pixels are projected to the ground plane D3 Z according to their horizontal position and their depth gray level, where X is the width of the depth map and the range of Z is [0,255]. The projected point, which is located at , z, is de- fined as ,pxz. The value at position of pro- jection plane is the total number of points at position x in the depth maps that have same gray level (depth value z). Figure 5 illustrates the image plane XY and ground plane XZ. ,pxz In order to remove noisy points, if the value at point ,pxz is less than threshold 1 T the point ,pxz will be discarded. Then we also apply morphological operations (dilating and eroding operation) to remove noisy points and connect nearby points. The remaining points in XZ plane are grouped in to the blobs that are based on connected component analysis technique using CvBlobLib library [22]. If a projected blob is small than threshold 2, it is consider a noise and it will be re- moved. T he pr ojecte d blo bs are define d as T 1, 2,,, j PB jm where m is total number of pro- jected blobs. Each projected blobs PB is mask as ob- ject regions. Figure 6 shows an example of projected blobs in XZ plane. As mention before, according to the projected blobs in XZ plane, the occlusion objects can be divided into two types based on the depth ranges: 1) in the different depth layers or 2) in the one depth layer. 2) Tracking Occluded Objects in Different Depth Lay- ers Figure 6 shows the case of occluded objects is in dif- ferent depth layers. Once occluded objects have different YZ X Figure 5. The image plane XY and ground plane XZ . Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion 303 (a) (c) (b) (d) Figure 6. Projected for eground blobs in XZ plane. (a) input image; (b) foreground blob (occlusion region); (c) occlusion region (depth image); (d) projected blobs in XZ plane. depth layer, they can be segmented in color image by means of their depth ranges. An example of color image segmentation by means of depth ranges is shown Figure 7. In our method, object correspondence under different layer is based on Bhattacharyya distance [24] between the color histograms. In statistics, the Bhattacharyya dis- tance measures the similarity of two probability distri- butions. In our case, Bhattacharyya distance represents the similarity between two normalized histograms. The Bhattacharyya distance is calculated by: 1 1 N bb b BDp q , k (8) where, BD denotes Bhattacharyya distance; p and q are the two normalized color histograms; N is number of bin in histogram. Let is the occluded objects at frame and denotes the ex- 12 ,,, kkkm OOO O k 12 nn UU,,, n n m U U isting non-occluded objects in previous frame n (the frame before occlusion is found). The color histogram of each occluded objects and existing non-occluded objects are k i O and n U , respectively. In this paper, color histograms are created from hue component of color space. Figure 8 shows an example of the color his- togram. HSV For each occluded object , we calculate the Bhat- tacharyya distance between color histo- k i O , kn i U j BD O gram of this object and color histogram of every object n U in and then find the shortest distance . n Umin BD (a) (b) (e) (c) (f) (d) Figure 7. An example of image segmentation by means of depth ranges. (a) input image; (b) foreground blob (occlu- sion region); (c) occlusion region (depth image); (d) Pro- jected blobs in XZ plane (occluded objects in different depth layers); (e) segmented object based on depth layer 1 (red color in d); (f) segmented object based on depth layer 2 (blue color in d). Hue Frequency (a) (b) Figure 8. An example of color histogram. (a) color image; (b) color histogram. The Bhattacharyya distance is computed , kn ij BD OU according to Equation (8), specially: 1 ,1 kn ij N kn ij OU b BD OUHbHb , (9) The occluded object will update its accord- ing to k i OID n U if kn ij Umin ,BD OBD. Figure 9 shows the numeric results of calculating Bhattacharyya distance Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion 304 (a) (b) (c) 1 O H 2 O H (d) (b1) (c1) Dista nce : 0. 32 88 21 OU BD(H ,H) 1 U H Distance: 0.4106 11 OU BD(H ,H) 1 U H (e) (e1) 12 OU BD(H ,H) 2 U H Distance: 0.4253 Distance: 0.3367 22 OU BD(H ,H) 2 U H (f) (f1) Figure 9. Bhattacharyya distance between two histograms. (a) input frame (having occlusion objects); (b) segmented obj. 1 in input frame (O1); (c) segmented obj. 2 in input frame (O2); (d) Previous frame (before occlusion happened); (b1) Histogram of object O1 in (b) (); (c1) Histogram of ob- ject O2 in (c) (); (e) object 1 before occlusion happened (U1); (e1) Histogram of object U1 in (e) (); distance between and is 0.3288; distance between and is 0.4106; (f) object 2 before occlusion happened (U2); (f1) Histogram of object U2 in (f) (); distance between and is 0.4253; distance between and is 0.3367. 1 O H 2 O H 1 U H 2 U H 1 O H 1 1 O H 2 1 U H 2 U H 2 O H 2 O H U H U H between two histograms. Figure 10 illustrates an example of tracking occluded objects in diffe rent de pth laye rs. 3) Tr acking Occlude d Ob j ects in One Depth Laye r Figure 11 shows an example of occluded objects in si- milar depth layer. When occlusion objects have similar depth range or (a) (b) Figure 10. Tracking occluded objects in different depth la- yers. (a) Input frame (having occlusion objec ts); (b) Output (tracking occlusion objects in different layers). (a) (b) (c) Figure 11. An example of tracking occluded objects in one depth layer. (a) input frame (having occlusion objects); (b) occlusion region (depth image); (c) projected blobs in XZ plane (occlusion objects in one depth layer). full occlusion, it is difficult to segment and track multiple objects as above technique. To deal with this problem, we propose the tracking method based on camshift (Con- tinuously Adaptive Mean shift) algorithm [25] as fol- lowing part. Assuming that there are occluded object m 1, 2,, k i Oi m in the occlusion region , their k occ R existing corresponding tracks in the previous frame are 1k j U . Our algorithm has following steps: 1) Pre-computing the color histogram U for every existing track n U, and the color histogram for occlusion region. Here we calculate a hue histogram from SV color space. 2) Based on the average depth values, sorting the list of object 1k j U so that the object with biggest depth 1 foremost k U (i.e. the shortest distance from camera) goes first. 3) Calculating a back projection of a hue plane of oc- clusion region using the pre-computing histogram of 1 foremost k U k occ R ID . Based on the back projection image, finding in the corresponding object of using cam- shift algorithm. Label it as with according to the of . 1 foremost k U ID foremost k O 1 foremost k U Figure 12 shows an example of projection image. 4) Removing the in . Selecting the next foremo st k Ok occ R track in the sorted 1k j U list and running step (3) to Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion 305 (a) (d) (b1) (b2) (e) (c) (f) Figure 12. An example of projection image. (a) Previous frame (before occlusion happened); (b1) Object 1 before occlusion happened (with bigger depth); (b2) Object 2 be- fore occlusion happened; (c) Histogram of object 1 in (b1); (d) input frame (having occlusion objects); (e) occluded objects in input frame; (f) Projection image of occluded region in input frame using pre-computing histogram in (c). find the next k O in . k occ R 5) Repeating step (4) until all object in in k Ok occ R finds their corresponding track. Figure 13 demonstrates a result of tracking occluded objects in one depth layer. In practice, when projecting partly occluded objects or fully occluded objects with similar depth ranges into Z plane, we will obtain only one blob/region in Z plane. In the case, the objects in similar depth range are partly occluded, the above algorithm will work well (see Figure 13). However, the fully occluded objects current frame t will reappear as partial occluded objects bind their occluder in the later frame, so it will be tracked by our method (see example in Figure 14). 3. Experimental Results In this section, we show the experimental results to evaluate the proposed tracking method. We evaluate the tracking performance by the capability of detecting and maintaining constant of foreground objects during the occlusion and after the occlusion over. ID The proposed tracking method has been test on some video sequences. The input of out method is a pair of video sequence and the output is the left video sequence in which has a set of moving objects labeling with and bounding boxe s with different color. ID Figure 15 shows results of tracking non-occlusion ob- jects. In the example 15(a), in the 3 frames (left to right) each object appears one after another and in the fourth frame, object with has gone out the scene. These results illustrate the ability of our algorithm in detecting object, assigning and maintaining the objects . ID ID We demonstrate the result of tracking of occluded ob- jects in different depth layers in Figure 16. Our proposed method can successful detect the object under partial occlusion. All of objects have constantly label over the (a) (b) Figure 13. An example of tracking occluded objects in one depth layer. (a) Input frame (having occlusion objects); (b) Output (tracking occlusion objects in differe nt layers). Figure 14. Tracking fully occluded objects. “Room sequen- ce”: from left to right, the frame indexes are 67, 72, 73 and 74, respectively. (a) (b) Figure 15. Result of tracking non-occluded objects. (a) Tracking of non-occluded obje cts in “Gym sequence” (from left to right, the frame number are: 34, 42, 60 and 201); (b) Tracking of non-occluded objects in “Room sequence” (from left to right, the frame number are: 0, 19, 113 and 250, respectively. Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion 306 time even they are moving in variety of pose and posi- tion. Tracking the occluded object under the similar depth layer is shown in Figure 17. These examples show that the proposed algorithm can detect and track a partial oc- cluded object that is equivalent to at least one half a hu- man body. Figure 14 shows the case an object is fully occluded and in the similar depth layer with its occluder and our system cannot detect it. However, in the future time when this object reappears as partial occluded object, the sys- tem can detect and maintain its ID. This example demon- strate the capability of proposed algorithm in term of maintain constant label for object over the video se- quence. 4. Conclusions In this paper, we have presented a novel tracking method aiming at detecting objects and maintaining their ID over the time. The key factor of this method is to use depth information to track objects under various occlusion scenarios. Different object tracking strategies are applied (a) (b) Figure 16. Tracking occluded objects in different depth layers. (a) “Gym sequence”: from left to right, the frames indexes are 65, 73, 87 and 145, respectively; (b) “Room se- quence”: from left to right, the frame indexes are 127, 129, 132 and 135, respectively. (a) (b) Figure 17. Tracking occ luded objects in one depth lay er. (a) “Gym sequence”: from left to right, the frames indexes are 206, 207, 208 and 209, respectively; (b) “Room sequence”: from left to right, the frame indexes are 236, 237, 238 and 239, respectively. according to occlusion situation including finding corre- sponding objects based on Bhattacharyya distance be- tween two histograms and using a camshift based algo- rithm with the help of object depth ordering. The ex- perimental results have confirmed the capability of our proposed objects tracking algorithm under the most typi- cal and challenging occlusion scenarios. However, the proposed algorithm can work only in an indoor or medium sized environment since the reliability of depth information diminishes in proportion to the dis- tance from the camera and only when the moving veloc- ity of objects is slow. In the future work, to construct a robust moving object tracking system in both indoor and outdoor environment, we will study to use more object’s features to classify and track the objects. REFERENCES [1] D. Koller, K. Danilidis and H.-H. Nagel, “Model-Based Object Tracking in Monocular Image Sequences of Road Traffic Scenes,” International Journal of Computer Vi- sion, Vol. 10, No. 3, 1993, pp. 257-281. doi:10.1007/BF01539538 [2] T. Hai, H. S. Sawhney and R. Kumar, “Object Tracking with Bayesian Estimation of Dynamic Layer Representa- tions,” IEEE Transactions on Pattern Analysis and Ma- chine Intelligence, Vol. 24, No. 1, 2002, pp. 75-89. doi:10.1109/34.982885 [3] A. D. Jepson, D. J. Fleet and T. F. El-Maraghi, “Robust Online Appearance Models for Visual Tracking,” IEEE Transactions on Pattern Analysis and Machine Intelli- gence, Vol. 25, No. 10, 2003, pp. 1296-1311. doi:10.1109/TPAMI.2003.1233903 [4] D. Comaniciu, V. Ramesh and P. Meer, “Kernel-Based Object Tracking,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 25, No. 5, 2003, pp. 564- 577. doi:10.1109/TPAMI.2003.1195991 [5] J. Shi and C. Tomasi, “Good Features to Track,” Pro- ceedings IEEE Computer Society Conference on Com- puter Vision and Pattern Recognition (CVPR), Seattle, 21-23 June 1994, pp. 593-600. [6] Y. Rui and Y. Chen, “Better Proposal Distributions: Ob- ject Tracking Using Unscented Particle Filter,” Proceed- ings of the Computer Vision and Pattern Recognition, Vol. 2, 2001, pp. 786-793. [7] M. Isard and A. Blake, “CONDENSATION—Condi- tional Density Propagation for Visual Tracking,” Interna- tional Journal of Computer Vision, Vol. 29, No. 1, 1998, pp. 5-28. doi:10.1023/A:1008078328650 [8] D. Beymer, P. McLauchlan, B. Coifma n and J. Malik, “A Real-Time Computer Vision System for Measuring Traf- fic Parameters,” IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, 17-19 June 1997, pp. 495-501. [9] N. K. Paragios and R. Deriche, “A PDE-Based Level-Set Approach for Detection and Tracking of Moving Ob- jects,” The Sixth International Conference on Computer Copyright © 2013 SciRes. JSIP  Depth-Aided Tracking Multiple Objects under Occlusion Copyright © 2013 SciRes. JSIP 307 Vision, Bombay, 4-7 January 1998, pp. 1139-1145. [10] Z. Tao, R. Nevatia and W. Bo, “Segmentation and Track- ing of Multiple Humans in Crowded Environments,” IEEE Transactions on Pattern Analysis and Machine In- telligence, Vol. 30No. 7, , 2008, pp. 1198-1211. doi:10.1109/TPAMI.2007.70770 [11] X. Zhou, Y. F. Li and B. He, “Game-Theoretical Occlu- sion Handling for Multi-Target Visual Tracking,” Pattern Recognition, Vol. 46, No. 10, 2013, pp. 2670-2684. doi:10.1016/j.patcog.2013.02.013 [12] V. Papadourakis and A. Argyros, “Multiple Objects Tracking in the Presence of Long-Term Occlusions,” Computer Vision and Image Understanding, Vol. 114, No. 7, 2010, pp. 835-846. doi:10.1016/j.cviu.2010.02.003 [13] M. Wu, X. Peng, Q. Zhang and R. Zhao, “Segmenting and Tracking Multiple Objects under Occlusion Using Multi-Label Graph Cut,” Computers & Electrical Engi- neering, Vol. 36, No. 5, 2010, pp. 927-934. doi:10.1016/j.compeleceng.2009.12.013 [14] E. Parvizi and Q. M. J. Wu, “Multiple Object Tracking Based on Adaptive Depth Segmentation,” Canadian Con- ference on Computer and Robot Vision, Windsor, 28-30 May 2008, pp. 273-277. [15] S. J. Krotosky and M. M. Trivedi, “On Color-, Infrared-, and Multimodal-Stereo Approaches to Pedestrian Detec- tion,” IEEE Transactions on Intelligent Transportation Systems, Vol. 8, No. 4, 2007, pp. 619-629. doi:10.1109/TITS.2007.908722 [16] R. Okada, Y. Shirai and J. Miura, “Object Tracking Based on Optical Flow and Depth,” International Conference on Multisensor Fusion and Integration for Intelligent Sys- tems (IEEE/SICE/RSJ), Washington DC, 8-11 December 1996, pp. 565-571. [17] D. Scharstein and R. Szeliski, “A Taxonomy and Evalua- tion of Dense Two-Frame Stereo Correspondence Algo- rithms,” International Journal of Computer Vision, Vol. 47, No. 1-3, 2002, pp. 7-42. doi:10.1023/A:1014573219977 [18] K. Konolige, “Small Vision Systems: Hardware and Im- plementation,” In: Y. Shirai and S. Hirose, Eds., Robotics Research, Springer, London, 1998, pp. 203-212. [19] Z. Zivkovic, “Improved Adaptive Gaussian Mixture Model for Background Subtraction,” Proceedings of the 17th International Conference on Pattern Recognition, Vol. 2, 2004, pp. 28-31. [20] P. KaewTraKulPong and R. Bowden, “An Improved Adaptive Background Mixture Model for Real-Time Tracking with Shadow Detection,” In: P. Remagnino, G. Jones, N. Paragios and C. Regazzoni, Eds., Video-Based Surveillance Systems, Springer, Berlin, 2002, pp. 135- 144. [21] A. Prati, I. Mikic, M. M. Trivedi and R. Cucchiara, “De- tecting Moving Shadows: Algorithms and Evaluation,” IEEE Transactions on Pattern Analysis and Machine In- telligence, Vol. 25, No. 7, 2003, pp. 918-923. doi:10.1109/TPAMI.2003.1206520 [22] “OpenCV Wiki, cvBlobLib,” 2011. http://opencv.willowgarage.com/wiki/cvBlobsLib [23] F. Chang, C.-J. Chen and C.-J. Lu, “A Linear-Time Com- ponent-Labeling Algorithm Using Contour Tracing Tech- nique,” Computer Vision and Image Understanding, Vol. 93, No. 2, 2004, pp. 206-220. doi:10.1016/j.cviu.2003.09.002 [24] A. Bhattacharyya, “On a Measure of Divergence between Two Statistical Populations Defined by Their Probability Distributions,” Bulletin of the Calcutta Mathematical So- ciety, Vol. 35, 1943, pp. 99-109. [25] G. R. Bradski, “Real Time Face and Object Tracking as a Component of a Perceptual User Interface,” The Fourth IEEE Workshop on Applications of Computer Vision, Princeton, 19-21 October 1998, pp. 214-219.

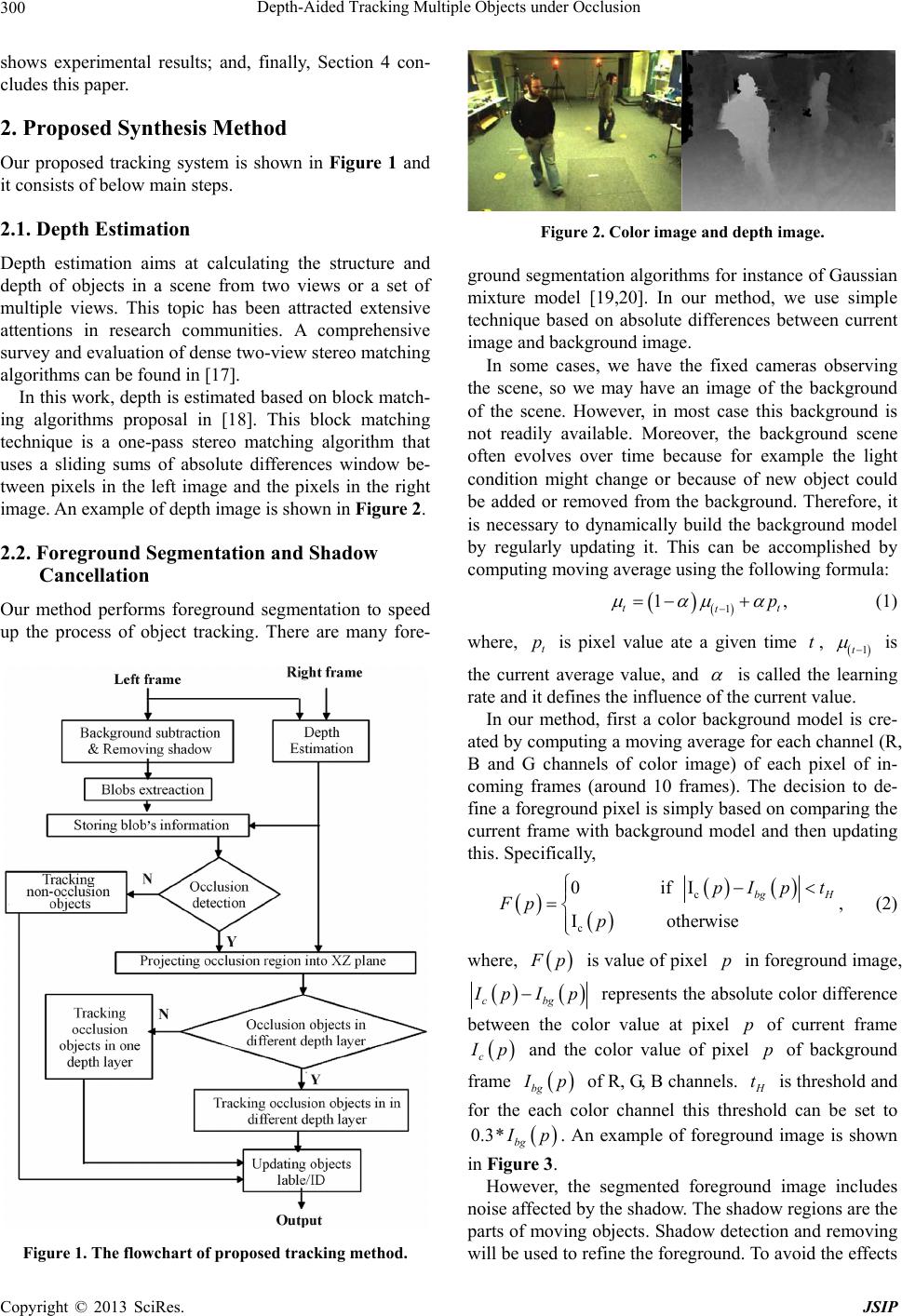

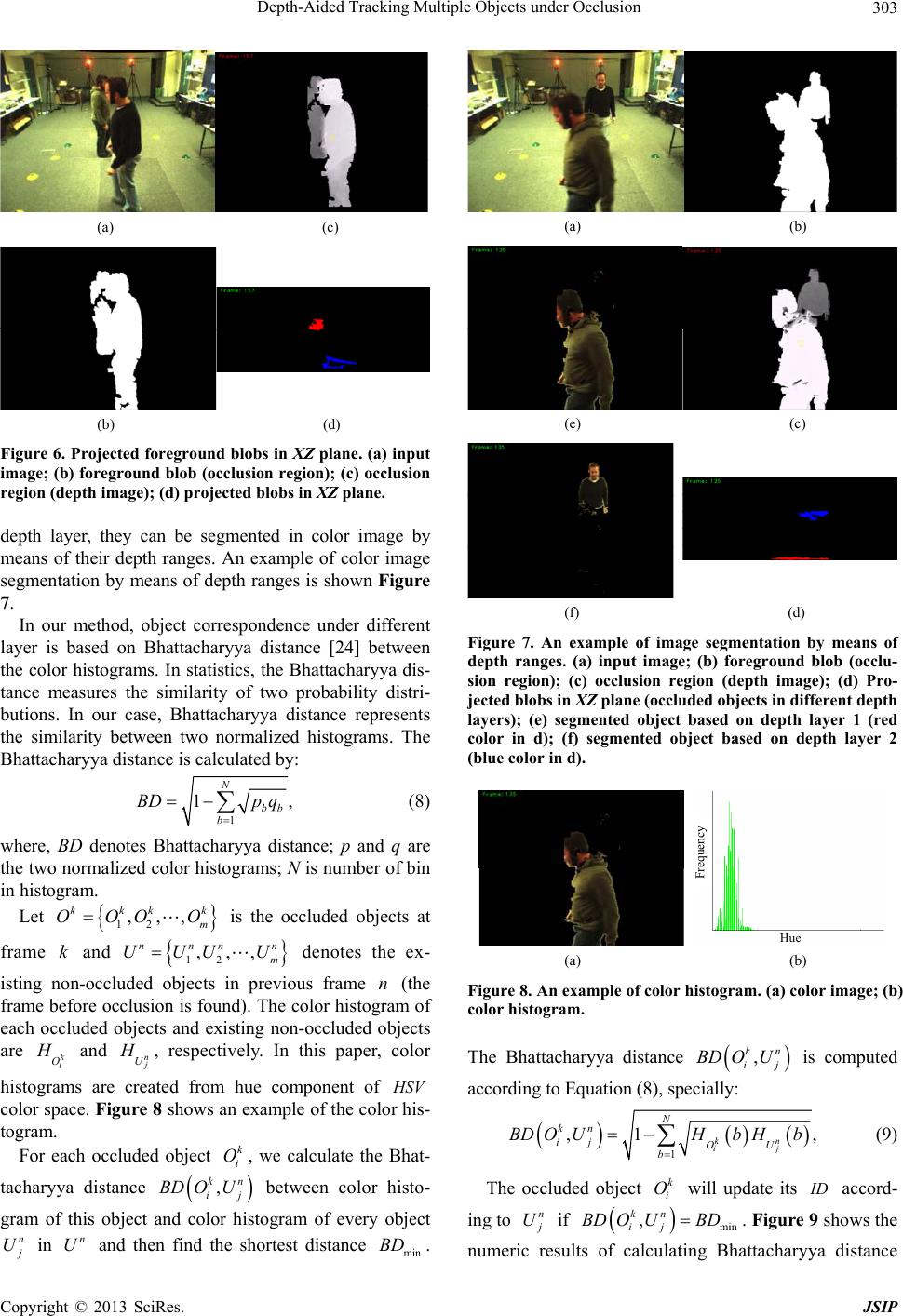

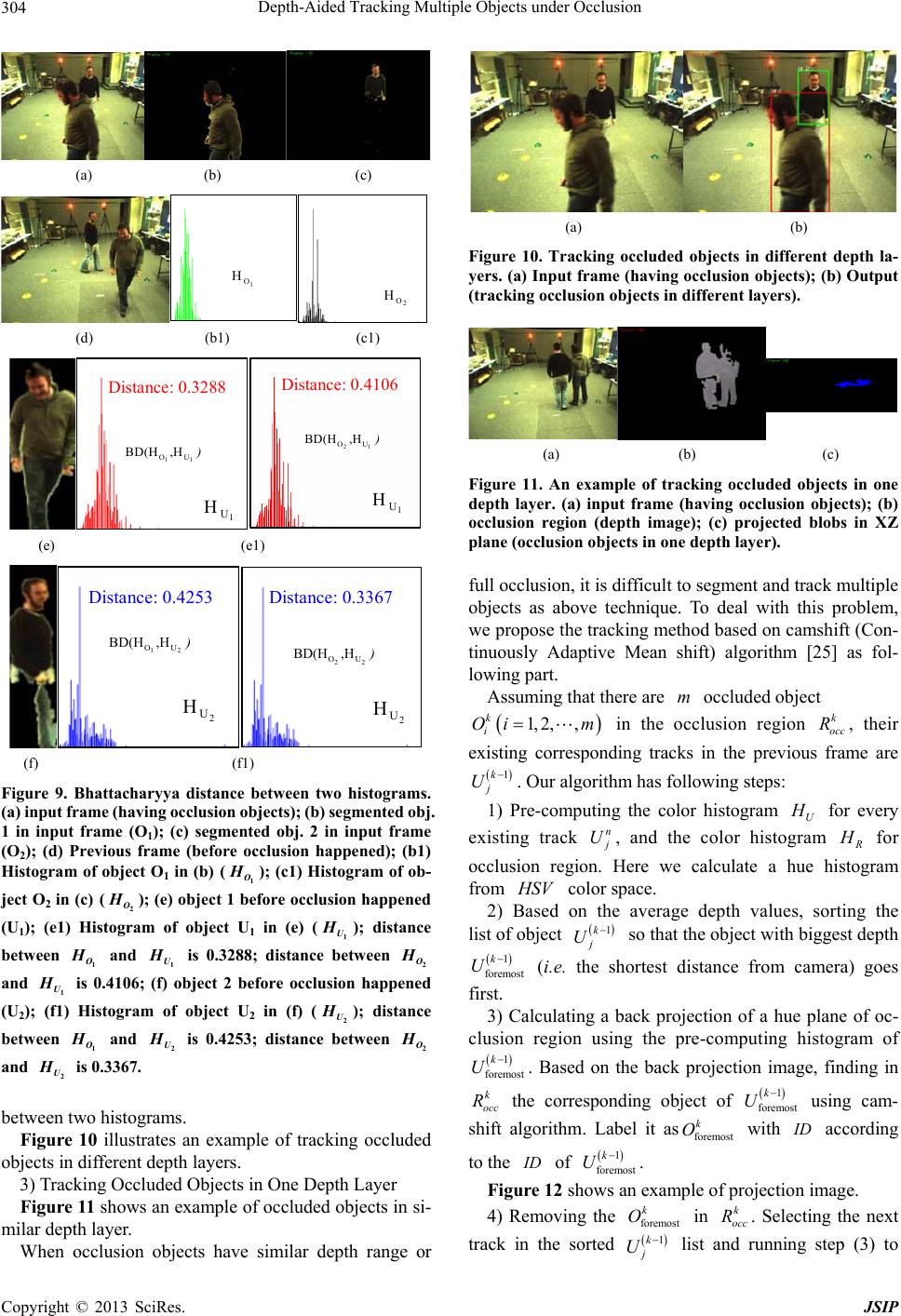

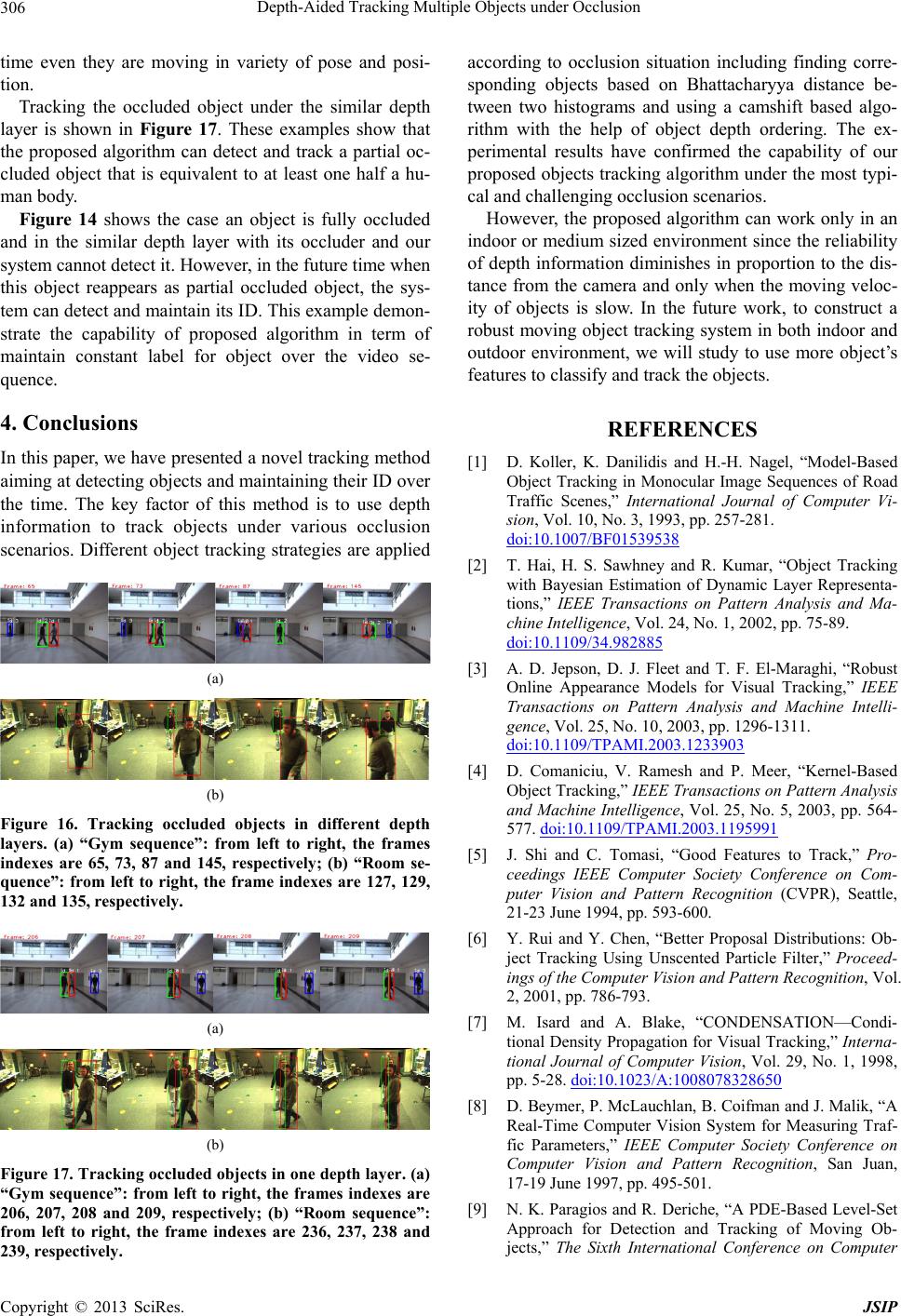

|