S. H. LUO ET AL.

Copyright © 2013 SciRes. ENG

72

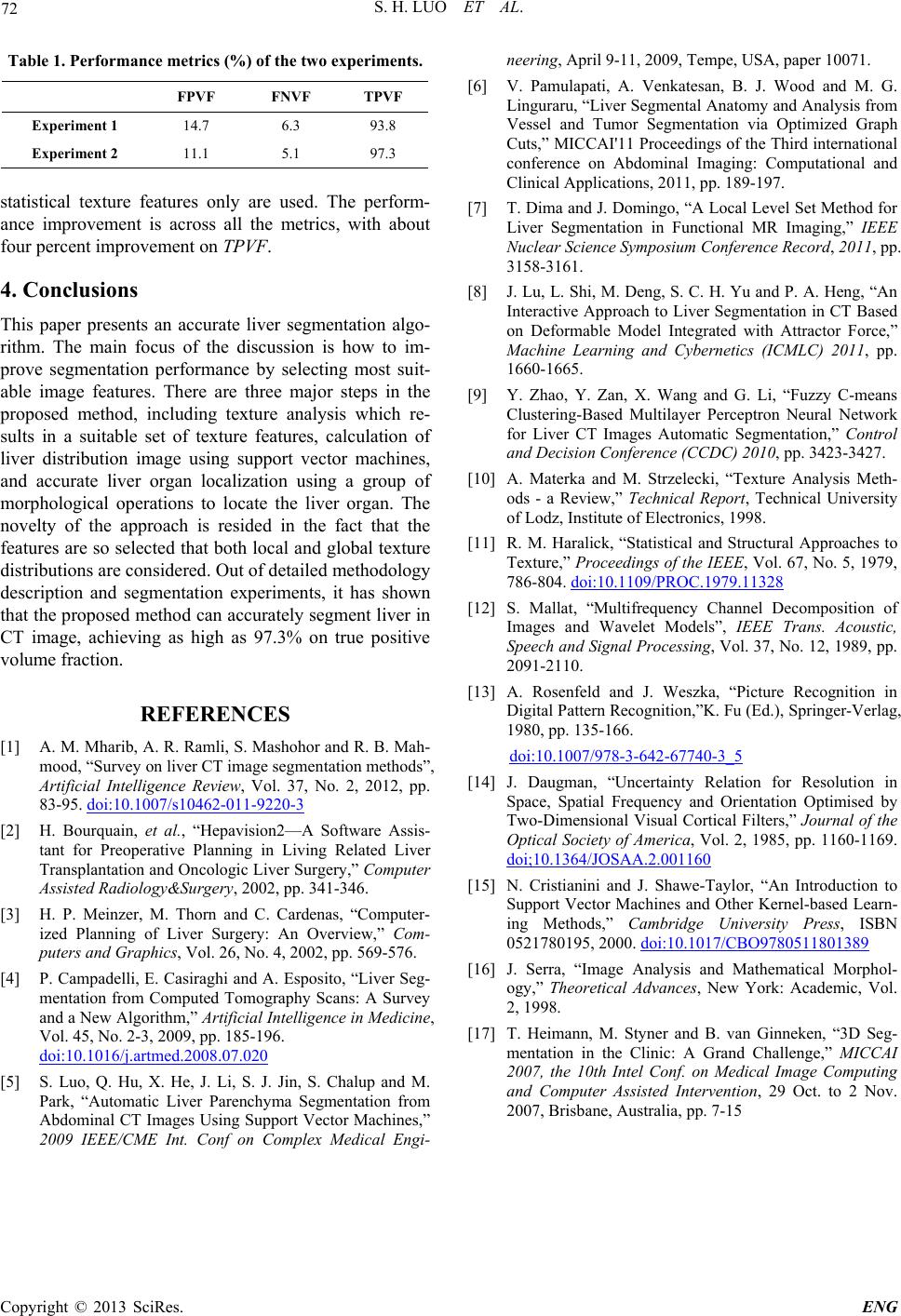

Table 1. Performance metrics (%) of the two experiments.

FPVF FNVF TPVF

Experiment 1 14.7 6.3 93.8

Experiment 2 11.1 5.1 97.3

statistical texture features only are used. The perform-

ance improvement is across all the metrics, with about

four percent improvement on TPVF.

4. Conclusions

This paper presents an accurate liver segmentation algo-

rithm. The main focus of the discussion is how to im-

prove segmentation performance by selecting most suit-

able image features. There are three major steps in the

proposed method, including texture analysis which re-

sults in a suitable set of texture features, calculation of

liver distribution image using support vector machines,

and accurate liver organ localization using a group of

morphological operations to locate the liver organ. The

novelty of the approach is resided in the fact that the

features are so selected that both local and global texture

distributions are considered. Out of detailed methodology

description and segmentation experiments, it has shown

that the proposed method can accurately segment liver in

CT image, achieving as high as 97.3% on true positive

volume fraction.

REFERENCES

[1] A. M. Mharib, A. R. Ramli, S. Mashohor and R. B. Mah-

mood, “Survey on liver CT image segmentation methods”,

Artificial Intelligence Review, Vol. 37, No. 2, 2012, pp.

83-95. doi:10.1007/s10462-011-9220-3

[2] H. Bourquain, et al., “Hepavision2—A Software Assis-

tant for Preoperative Planning in Living Related Liver

Transplantation and Oncologic Liver Surgery,” Computer

Assisted Radiology&Surgery, 2002, pp. 341-346.

[3] H. P. Meinzer, M. Thorn and C. Cardenas, “Computer-

ized Planning of Liver Surgery: An Overview,” Com-

puters and Graphics, Vol. 26, No. 4, 2002, pp. 569-576.

[4] P. Campadelli, E. Casiraghi and A. Esposito, “Liver Seg-

mentation from Computed Tomography Scans: A Survey

and a New Algorithm,” Artificial Intelligence in Medicine,

Vol. 45, No. 2-3, 2009, pp. 185-196.

doi:10.1016/j.artmed.2008.07.020

[5] S. Luo, Q. Hu, X. He, J. Li, S. J. Jin, S. Chalup and M.

Park, “Automatic Liver Parenchyma Segmentation from

Abdominal CT Images Using Support Vector Machines,”

2009 IEEE/CME Int. Conf on Complex Medical Engi-

neering, April 9-11, 2009, Tempe, USA, paper 10071.

[6] V. Pamulapati, A. Venkatesan, B. J. Wood and M. G.

Linguraru, “Liver Segmental Anatomy and Analysis from

Vessel and Tumor Segmentation via Optimized Graph

Cuts,” MICCAI'11 Proceedings of the Third international

conference on Abdominal Imaging: Computational and

Clinical Applications, 2011, pp. 189-197.

[7] T. Dima and J. Domingo, “A Local Level Set Method for

Liver Segmentation in Functional MR Imaging,” IEEE

Nuclear Science Symposium Conference Record, 2011, pp.

3158-3161.

[8] J. Lu, L. Shi, M. Deng, S. C. H. Yu and P. A. Heng, “An

Interactive Approach to Liver Segmentation in CT Based

on Deformable Model Integrated with Attractor Force,”

Machine Learning and Cybernetics (ICMLC) 2011, pp.

1660-1665.

[9] Y. Zhao, Y. Zan, X. Wang and G. Li, “Fuzzy C-means

Clustering-Based Multilayer Perceptron Neural Network

for Liver CT Images Automatic Segmentation,” Control

and Decision Conference (CCDC) 2010, pp. 3423-3427.

[10] A. Materka and M. Strzelecki, “Texture Analysis Meth-

ods - a Review,” Technical Report, Technical University

of Lodz, Institute of Electronics, 1998.

[11] R. M. Haralick, “Statistical and Structural Approaches to

Texture,” Proceedings of the IEEE, Vol. 67, No. 5, 1979,

786-804. doi:10.1109/PROC.1979.11328

[12] S. Mallat, “Multifrequency Channel Decomposition of

Images and Wavelet Models”, IEEE Trans. Acoustic,

Speech and Signal Processing, Vol. 37, No. 12, 1989, pp.

2091-2110.

[13] A. Rosenfeld and J. Weszka, “Picture Recognition in

Digital Pattern Recognition,”K. Fu (Ed.), Springer-Verlag,

1980, pp. 135-166.

doi:10.1007/978-3-642-67740-3_5

[14] J. Daugman, “Uncertainty Relation for Resolution in

Space, Spatial Frequency and Orientation Optimised by

Two-Dimensional Visual Cortical Filters,” Journal of the

Optical Society of America, Vol. 2, 1985, pp. 1160-1169.

doi;10.1364/JOSAA.2.001160

[15] N. Cristianini and J. Shawe-Taylor, “An Introduction to

Support Vector Machines and Other Kernel-based Learn-

ing Methods,” Cambridge University Press, ISBN

0521780195, 2000. doi:10.1017/CBO9780511801389

[16] J. Serra, “Image Analysis and Mathematical Morphol-

ogy,” Theoretical Advances, New York: Academic, Vol.

2, 1998.

[17] T. Heimann, M. Styner and B. van Ginneken, “3D Seg-

mentation in the Clinic: A Grand Challenge,” MICCAI

2007, the 10th Intel Conf. on Medical Image Computing

and Computer Assisted Intervention, 29 Oct. to 2 Nov.

2007, Brisbane, Australia, pp. 7-15