Open Journal of Optimization

Vol.04 No.03(2015), Article ID:59375,4 pages

10.4236/ojop.2015.43009

Solving Ordinary Differential Equations with Evolutionary Algorithms

Bakre Omolara Fatimah1, Wusu Ashiribo Senapon2, Akanbi Moses Adebowale2

1Department of Mathematics, Federal College of Education (Technical), Lagos, Nigeria

2Department of Mathematics, Lagos State University, Lagos, Nigeria

Email: larabakre@yahoo.com, wussy_ash@yahoo.com, akanbima@gmail.com

Copyright © 2015 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 2 June 2015; accepted 1 September 2015; published 4 September 2015

ABSTRACT

In this paper, the authors show that the general linear second order ordinary Differential Equation can be formulated as an optimization problem and that evolutionary algorithms for solving optimization problems can also be adapted for solving the formulated problem. The authors propose a polynomial based scheme for achieving the above objectives. The coefficients of the proposed scheme are approximated by an evolutionary algorithm known as Differential Evolution (DE). Numerical examples with good results show the accuracy of the proposed method compared with some existing methods.

Keywords:

Evolutionary Algorithm, Differential Equations, Differential Evolution, Optimization

1. Introduction

For centuries, Differential Equations (DEs) have been an important concept in many branches of science. They arise spontaneously in physics, engineering, chemistry, biology, economics and a lot of fields in between. Many Ordinary Differential Equations (ODEs) have been solved analytically to obtain solutions in a closed form. However, the range of Differential Equations that can be solved by straightforward analytical methods is relatively restricted. In many cases, where a Differential Equation and known boundary conditions are given, an approximate solution is often obtainable by the application of numerical methods.

Several numerical methods (see [1] - [3] ) have been developed to handle many classes of problems but yet, the quest for reasonably stable, fast and more accurate algorithms is still on the search in the field of calculus.

Since many evolutionary optimization techniques are methods that optimizing a problem by iteratively trying to improve a candidate solution with regard to a given measure of quality (see [4] - [7] ), interest in the adaptation of these techniques to Differential Equations is recently on the rise. Approximate solutions of Differential Equations are obtained by formulating the equations as optimization problems and then solved by using optimization techniques.

Nikos [8] in his work proposed the idea of solution of ODEs via genetic algorithm combined with collocation method. In [6] , the combination of genetic algorithm with the Nelder-Mead method was introduced and implemented for the solution of ODEs and the idea of neural network for obtaining approximate solutions of ODEs was also proposed in [9] . The author in [10] adapted the classical genetic algorithm to the solution of Ordinary Differential Equation.

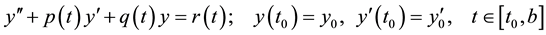

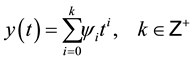

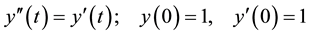

In this paper we show that the Differential Evolution (DE) algorithm can also be used to find very accurate approximate solutions of second order Initial Value Problems (IVPs) of the form

(1)

(1)

2. Basic Notions of Differential Evolution Algorithm

Formally, let  be the function which must be optimized. The function takes a candidate solution as argument in the form of a vector of real numbers and produces a real number as output which indicates the fitness of the given candidate solution. The gradient of f is not known. The goal is to find a solution m for which

be the function which must be optimized. The function takes a candidate solution as argument in the form of a vector of real numbers and produces a real number as output which indicates the fitness of the given candidate solution. The gradient of f is not known. The goal is to find a solution m for which  for all p in the search-space, which would mean m is the global minimum. Maximization can be performed by considering the function

for all p in the search-space, which would mean m is the global minimum. Maximization can be performed by considering the function  instead.

instead.

Let  designate a candidate solution (agent) in the population. The basic Differential Evolution algorithm can then be described as follows:

designate a candidate solution (agent) in the population. The basic Differential Evolution algorithm can then be described as follows:

• Initialize all agents  with random positions in the search-space;

with random positions in the search-space;

• Until a termination criterion is met (e.g. number of iterations performed, or adequate fitness reached), repeat the following.

• For each agent  in the population do:

in the population do:

* Pick three agents , and

, and  from the population at random, they must be distinct from each other as well as from agent

from the population at random, they must be distinct from each other as well as from agent ;

;

* Pick a random index  (n being the dimensionality of the problem to be optimized);

(n being the dimensionality of the problem to be optimized);

* Compute the agent’s potentially new position  as follows:

as follows:

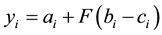

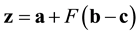

• For each i, pick a uniformly distributed number ;

;

• If  or

or  then set

then set  otherwise set

otherwise set

• (In essence, the new position is outcome of binary crossover of agent

* If

• Pick the agent from the population that has the highest fitness or lowest cost and return it as the best found candidate solution.

Note that

3. Construction of Proposed Algorithm

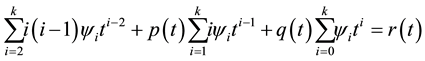

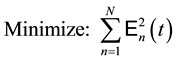

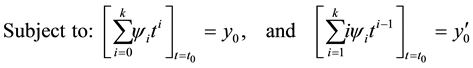

In this section, we show the steps involved in formulating the general linear second order initial value problem (1) as an optimization problem and then use the Differential Evolution algorithm to obtain approximate solution of the ODE.

Consider the second order initial value problem (1), in this work we assume a polynomial solution of the form

where

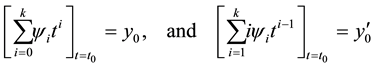

Using the initial conditions we have the constraint that

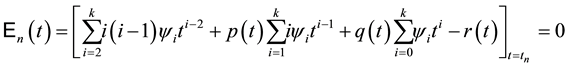

Using (3), at each node point

To solve the above problem, we need to find the set of coefficients

where

following way:

Equations (8) and (9) together is the formulated optimization problem of the IVP (1). The next objective of this work is to solve Equations (8) and (9) using the Differential Evolution algorithm.

Using the Differential Evolution algorithm we are able to obtain the set

ODEs (DEODEs)”.

4. Numerical Experiments

We now perform some numerical experiments confirming the theoretical expectations regarding the method we have proposed. The propose scheme is compared with the Runge-Kutta scheme for solving (1).

The table of “CPU-time” and the maximum error of all computations are also given.

The following parameters are used for all computations.

Differential Evolution:

Cross Probability = 0.5;

Initial Points = Automatic;

Penalty Function = Automatic;

Post Process = Automatic;

Random Seed = 0;

Scaling Factor = 0.6;

Search Points = Automatic;

Tolerance = 0.001.

All computations were carried out on a “Core i3 Intel” processor machine.

4.1. Problem 1

We examine the following linear equation

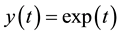

with the exact solution

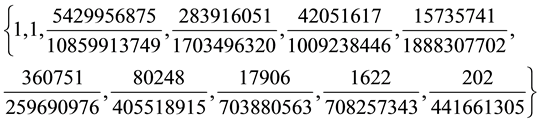

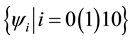

Implementing the proposed scheme with

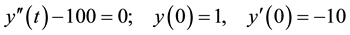

4.2. Problem 2

Consider the equation

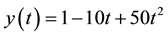

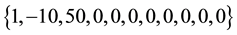

with the exact solution

Implementing the proposed scheme with

From the results obtained in Table 1, the proposed algorithm gave very accurate coefficients for the solution form for Problem 1. The algorithm gave the exact solution for Problem 2 as seen in Table 2.

Table 1. Maximum absolute error and CPU-time in seconds for Problem 1 with step-size

Table 2. Maximum absolute error and CPU-time in seconds for Problem 2 with steplength

We see that the Differential Evolution algorithm for solving ODEs gave better approximate results for different steplengths (h) compared with the Runge-Kutta Nystrom method. The proposed solution process also gave better CPU-Time for both problems solved.

5. Conclusion

In this paper, we have been able to formulate the general linear second order ODE as an optimization problem, and we have also been able to solve the formulated optimization problem using the Differential Evolution algorithm. Numerical examples also show that the method gives better approximate solutions. Other evolutionary techniques can be exploited as well.

Cite this paper

Bakre OmolaraFatimah,Wusu AshiriboSenapon,Akanbi MosesAdebowale, (2015) Solving Ordinary Differential Equations with Evolutionary Algorithms. Open Journal of Optimization,04,69-73. doi: 10.4236/ojop.2015.43009

References

- 1. Buctcher, J.C. (2008) Numerical Methods for Ordinary Differential Equations. Wiley, New York.

http://dx.doi.org/10.1002/9780470753767 - 2. Lambert, J.D. (1973) Computational Methods in ODEs. Wiley, New York.

- 3. Lambert, J.D. (1991) Numerical Methods for Ordinary Differential Systems. Wiley, New York.

- 4. Goldberg, D.E. (1989) Genetic Algorithms in Search, Optimization and Machine Learning. 2nd Edition, Addison- Wesley, Boston.

- 5. Holland, H.J. (1975) Adaptation in Natural and Artificial Systems. University of Michigan Press, Ann Arbor.

- 6. Mastorakis, N.E. (2006) Unstable Ordinary Differential Equations: Solution via Genetic Algorithms and the Method of Nelder-Mead. Proceedings of the 6th WSEAS International Conference on Systems Theory & Scientific Computation, Elounda, 21-23 August 2006, 1-6.

- 7. Michalewiz, Z. (1994) Genetic Algorithm + Data Structure = Evolution Programs. 2nd Edition, Springer-Verlag, Berlin.

http://dx.doi.org/10.1007/978-3-662-07418-3 - 8. Mastorakis, N.E. (2005) Numerical Solution of Non-Linear Ordinary Differential Equations via Collocation Method (Finite Elements) and Genetic Algorithms. Proceedings of the 6th WSEAS International Conference on Evolutionary Computing, Lisbon, 16-18 June 2005, 36-42.

- 9. Junaid, A., Raja, A.Z. and Qureshi, I.M. (2009) Evolutionary Computing Approach for the Solution of Initial Value Problems in Ordinary Diffential Equations. International Scholarly and Scientific Research & Innovation, 3, 516-519.

- 10. George, D.M. (2006) On the Application of Genetic Algorithms to Differential Equations. Romanian Journal of Economic Forecasting, 2, 5-9.