Open Journal of Medical Imaging

Vol.06 No.01(2016), Article ID:65011,12 pages

10.4236/ojmi.2016.61003

Markerless Respiratory Motion Tracking Using Single Depth Camera

Shinobu Kumagai1, Ryohei Uemura1,2, Toru Ishibashi1, Susumu Nakabayashi1, Norikazu Arai2, Takenori Kobayashi1, Jun’ichi Kotoku1,2

1Graduate School of Medical Technology, Teikyo University, Tokyo, Japan

2Central of Radiology, Teikyo University Hospital, Tokyo, Japan

Copyright © 2016 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 20 January 2016; accepted 22 March 2016; published 25 March 2016

ABSTRACT

The aim of this study is to propose a novel system that has an ability to detect intra-fractional motion during radiotherapy treatment in real-time using three-dimensional surface taken by a depth camera, Microsoft Kinect v1. Our approach introduces three new aspects for three-dimensional surface tracking in radiotherapy treatment. The first aspect is a new algorithm for noise reduction of depth values. Ueda’s algorithm was implemented and enabling a fast least square regression of depth values. The second aspect is an application for detection of patient’s motion at multiple points in thracoabdominal regions. The third aspect is an estimation of three-dimensional surface from multiple depth values. For evaluation of noise reduction by Ueda’s algorithm, two respiratory patterns are measured by the Kinect as well as a laser range meter. The resulting cross correlation coefficients between the laser range meter and the Kinect were 0.982 for abdominal respiration and 0.995 for breath holding. Moreover, the mean cross correlation coefficients between the signals of our system and the signals of Anzai with respect to participant’s respiratory motion were 0.90 for thoracic respiration and 0.93 for abdominal respiration, respectively. These results proved that the performance of the developed system was comparable to existing motion monitoring devices. Reconstruction of three-dimensional surface also enabled us to detect the irregular motion and breathing arrest by comparing the averaged depth with predefined threshold values.

Keywords:

Depth Camera, Markerless, Motion Tracking, Intra-Fractional Motion, Three-Dimensional Surface

1. Introduction

Intensity Modulated Radiotherapy (IMRT), Volumetric Modulated Arc Therapy (VMAT), and Stereotactic Body Radiotherapy (SBRT), which are in common to deliver higher dose to a specific target, have become widely used techniques recently. If higher dose delivery to normal tissue occurs due to an irregular motion of a patient, this may cause the worse damage than that with the conventional radiotherapy technique. Thus, real- time patient monitoring during radiotherapy treatment is essential for high precision radiotherapy.

Patient motions are classified into two categories: inter-fractional patient motion and intra-fractional motion. Inter-fractional patient motion is a setup error or a reproducibility error of body contour between treatments. Intra-fractional motion is a movement during treatment due to respiratory motion, irregular motion, and physiological migration of organ. In prostate IMRT, changes in bladder and rectum filling volume cause the prostate intra-fractional motion [1] [2] .

A patient’s immobilization and an anchorage or respiratory monitoring systems have been invented to minimize the intra-fractional motion. Immobilization of patient such as abdominal compression used in lung SBRT is popular technique for reducing the amplitude of respiratory-induced tumor motion [3] . Those techniques require, however, enormous efforts for elder patients. Additionally, attaching respiratory monitoring sensors are a time-consuming process that leads to prolonged treatment time for patients. These systems can monitor only one point per patient although amplitudes and phases of respiration intricately change at multiple locations simultaneously.

Noninvasive patient motion monitoring and re-positioning devices have been used recently; e.g. multiple infrared external markers placed on the chest and abdominal surface of a patient with ceiling mounted cameras [4] or CCD cameras [5] . The researches on temporal and spatial accuracy of video-based or laser/camera-based three-dimensional optical surface imaging devices such as Sentinel [6] or VisionRT [7] have been reported. These systems can acquire the surface image of the patient during radiotherapy and compare it with a reference surface for patient re-positioning in real-time [8] - [11] . In this way, detection of patient motion and re-positioning without radiation exposure in real-time has become a hot topic, while these researches focused on the patient re-positioning accuracy, i.e. reducing the inter-fractional patient motion.

We have been developing a noninvasive motion monitoring system for radiotherapy to reduce intra-fractional patient motion using a consumer camera. To develop the system, we selected a depth camera, Kinect version 1 (v1) released by Microsoft [12] . Kinect v1 can measure the distance to a target (which is called “depth”) in real time using infrared random-dot patterns with the covering range from 0.8 m to 4.0 m. The depth information is obtained as a depth image. Kinect is used for the human detection in environment, location estimation and motion tracking [13] - [15] . Therefore, Kinect has strong potential for noncontact and noninvasive motion monitoring during radiotherapy.

Motion monitoring applications based on depth cameras have been reported for radiotherapy or radiation diagnosis. Xia et al. showed that a respiratory curve was measured from depth image taken by Kinect v1 [16] . They evaluated the cross correlation coefficients between detected respiratory curves by a Kinect and a strain gauge system. They also reported an applicator monitoring system for localization of image-guided brachytherapy. Their approach also needs the reflective markers for detection [17] .

Aoki et al. [18] measured the volume of region of interest in thoraco-abdominal through respiratory motion. They found the correlation between the volume obtained by Kinect and the respiratory curve obtained by a spirometer for measuring expiratory flow volume. Obviously, this approach could not distinguish the difference between the thoracic respiratory motion and the abdominal respiratory motion.

Jochen et al. reconstructed respiratory curve from three-dimensional surface measured by a time of flight (TOF) method [19] . Micro HeB et al. developed a method to detect a global motion of the whole human torso surface using dual-Kinect for respiratory motion correction in PET images [20] .

In this paper, we propose a contact-less and noninvasive intra-fractional motion monitoring system. Our approach introduces three new aspects for three-dimensional surface tracking. The first aspect is an implementation of an algorithm originally proposed by Ueda [21] [22] for noise reduction and fast regression of depth values. This algorithm calculates a mean value of an ROI with same time interval and fitted a quadratic curve to them. The second aspect is an application for detection of patient’s respiratory motions at multiple points. Although amplitudes and phases of respiratory curve change at multiple points simultaneously, recent respiratory management systems measure the participant’s motion or respiratory curves at only one point. The third aspect is an estimation of the three-dimensional surface from depth values at multiple regions over the whole thoracoabdominal area.

2. Methodology

2.1. Method Overview

In this section, we will present a novel approach for intra-fractional motion monitoring system using three-di- mensional surface based on the depth data from Kinect. First, Ueda’s algorithm was applied to filter the depth values from Kinect for real-time monitoring. We examined the performance of this algorithm by measuring the oscillating phantom. Two breathing patterns measured by our system were also compared with those by a laser range meter as a further performance test. As a next step, four types of motions from a participant were acquired to validate our system to track the respiratory motions of a participant. The cross correlation coefficients of respiratory curves between our system and a commercially available strain gauge system are calculated for comparison. Finally, we describe a method for three-dimensional surface reconstruction based on a multiple regions measurement.

2.2. Setup

Our system was composed of a Kinect v1 sensor and a laptop PC (Windows8). Kinect has an infrared sensor and a projector emitting a random dot patterns. Kinect detects the shift of patterns, and calculates a depth image based on triangulation method, which is also known as Light Coding [23] . Using Kinect SDK we directly obtained depth values, which is proportional to the distance to the target. The depth image is 640 pixels × 480 pixels in size and generated in real time (30 frames per second). The system code was written in C# supported by Kinect SDK library. For data processing including visualization, Python 2.7.5 with matplotlib [24] and Numpy [25] modules were used.

2.3. Ueda’s Algorithm

Detection of intra-fractional patient motion during radiotherapy requires real-time processing and high precision motion tracking techniques. Intra-fractional patient motion is usually so small that the motion signal could be buried in noises. Efficient real-time noise reduction is one of the key tasks to construct the intra-fractional motion monitoring system.

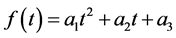

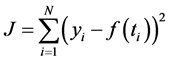

In this paper, we introduced Ueda’s algorithm as a fast and effective noise reduction method. In his algorithm the local time-profile of depth values is approximated to a quadratic form;

. (1)

. (1)

With defining the squared residual error J by

(2)

(2)

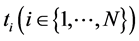

where  denotes the measured depth value at time

denotes the measured depth value at time , each coefficient

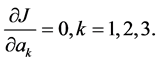

, each coefficient  is determined by least square method as follows,

is determined by least square method as follows,

(3)

(3)

The largest advantage of applying this algorithm is that those coefficients are able to be solved analytically resulting in significant reduction of the computational time. Ueda reported that this algorithm successfully tracked the respiratory motion in real-time [21] [22] .

2.3.1. Evaluation of the Noise Reduction Performance

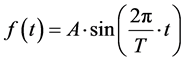

To evaluate the performance of Ueda’s algorithm for noise reduction, we measured the oscillating object with and without the noise reduction filter. As shown in Figure 1(a), the QUASAR Programmable Respiratory Motion Platform (Modus Medical Devices, Inc., London, ON, Canada) [26] was used for simulating respiratory motion. QUASAR has an insert phantom whose motion is programmable. We set control parameters to make a simple sinusoidal vibration as follows,

(4)

(4)

Figure 1. (a) A QUASAR phantom was used to simulate respiratory motion. (b) The experimental setup. We placed a Kinect sensor 100 cm from a QUASAR phantom.

where A and T are an amplitude and a period of a sinusoidal motion of the phantom. In this experiment, the control parameters were set as  and T = 6 s (10 Breaths per Minutes) for simulating a human breathing motion. Kinect was placed at 100 cm away from the centroid of the sinusoidal motion of the insert phantom (Figure 1(b)). To compare with the time profile of the insert phantom, i.e. Equation (4), we calculated the measured time profile by subtracting 100 cm from the measured depth values.

and T = 6 s (10 Breaths per Minutes) for simulating a human breathing motion. Kinect was placed at 100 cm away from the centroid of the sinusoidal motion of the insert phantom (Figure 1(b)). To compare with the time profile of the insert phantom, i.e. Equation (4), we calculated the measured time profile by subtracting 100 cm from the measured depth values.

2.3.2. Comparison with the Laser Range Meter

We demonstrated an experiment using a laser range meter to evaluate characteristic of noise reduction by Ueda’s algorithm. We used the laser range meter, IL-2000 manufactured by Keyence, which enables us to measure distances from 1000 mm to 3000 mm. Both IL-2000 and the Kinect were set on 110 cm above the participant.

We measured the two kinds of motion, abdominal respiration and breath holding, at the same time. We continuously monitored the distance changes observed every 200 μs by the laser range meter at one point on the participant’s abdomen. In contrast to the laser range meter, Kinect measured average depths of the each region of interest (50 pixels × 50 pixels) including the monitored point by the laser range meter. After data taking, acquired data were normalized using the maximum value and the minimum value. We calculated the cross correlation coefficients between the laser range meter and the Kinect.

2.4. Comparison with a Consumer Respiratory Monitor

We must validate that our system has an ability to track the respiratory motions of a participant. To verify whether the different types of respiratory motion can be distinguished, a participant was suggested to take four different types of motions e.g. thoracic respiration, abdominal respiration, breathing arrest and irregular motion. We measured the depth curves for each motion using a commercially available strain gauge system, AZ733V known as Anzai-belt (Anzai Medical Co., Tokyo, Japan) as well as Kinect. Time offsets had been observed even though the signals of Kinect and Anzai-belt were simultaneously acquired. To extract the cross correlation coefficient, we started with defining the cross correlation function  as follows,

as follows,

(5)

(5)

where,  represents the normalized depth values of Kinect and

represents the normalized depth values of Kinect and  represents the normalized signals of Anzai-belt shifted by time lag k. The maximum value of this function is regarded to be the cross correlation coefficient. These calculations were performed in 42 regions over a participant’s thraco-abdominal area.

represents the normalized signals of Anzai-belt shifted by time lag k. The maximum value of this function is regarded to be the cross correlation coefficient. These calculations were performed in 42 regions over a participant’s thraco-abdominal area.

2.5. Calculation Method of Three-Dimensional Surface

Figure 2 shows the experimental setup for a detection of participant’s motion. The Kinect sensor was placed on 100 cm above the participant during a treatment, so as to cover whole thoracoabdominal area of the participant. Each region of interest for calculating the average depth value is 50 pixels × 50 pixels in size. 42 regions overlap by 25 pixels in the horizontal and the vertical direction. We reconstructed the three dimensional surface of the participant by interpolating those 42 average depth values according to a thin-plate spline method (Figure 3).

2.6. Intra-Fractional Motion Method

Irregular motion and breathing arrest are major concerns during radiotherapy treatment. Averaged depth data

Figure 2. The experimental setup for detection of participant motion. The Kinect sensor was positioned 100cm above patient’s surface.

Figure 3. Reconstruction of three-dimensional surface. The 42 squared regions were configured from the participant’s chest to abdomen (Left). The average depth values were calculated in each region. Subsequently, the three dimensional surface was calculated by thin plate spline interpolation method (right).

taking over 42 regions of a participant’s thoracoabdominal surface make it possible to detect these motion errors and issue a warning alert. To provide the motion error detecting system, we started with checking the fluctuation of depth values in time by defining the absolute difference

where

As long as the patient stays still and keeps normal respiration,

If

3. Results

3.1. Performance of Ueda’s Algorithm

We used our developed motion monitoring system to measure the oscillating QUASAR phantom with amplitude of 11 mm and a period of 6 s, which was 100 cm away from the Kinect. Figure 4 shows that our system with the noise reduction filter based on Ueda’s algorithm successfully tracked the sinusoidal oscillation of the phantom, resulting in the mean residual errors of 0.00 ± 1.94 mm. On the other hand, the measured time profile without Ueda’s algorithm showed a poor tracking result with the mean residual errors of 3.00 ± 4.67 mm. Ueda’s algorithm for noise reduction evidently improved not only the accuracy of the centroid of the oscillation but also the precision of the measurements.

We also measured two kinds of breathing patterns, abdominal respiration and breath holding, to examine the performance of our system. Breathing patterns measured by the Kinect and by the laser range meter, IL-2000, are compared in Figure 5. Our results (white circles) were in good agreement with ones by the laser range meter (black lines). The cross correlation coefficients between them were 0.982 (p < 0.001) for abdominal respiration and 0.995 (p < 0.001) for breath holding (t-test).

3.2. Detection of Respiratory Signal in Multiple Regions

To confirm whether our system can distinguish the respiratory motions from irregular motions, we have examined our system with four different types of motions, thoracic respiration, abdominal respiration, breath holding, and body motion.

The difference between thoracic respiratory motion and abdominal one was expectedly not easy to be identified, while irregular motion and breath holding are easily distinguished themselves from other motions because of their characteristic depth patterns. Figure 6 shows that our system clearly distinguishes the four types of motions. It should be noted that our system successfully identify the difference between the thoracic respiratory motion and abdominal one, comparing the depth curves over one respiratory period (Figure 7).

In Figure 8, the crosses represent the depth values measured by the Kinect and the dotted line by the Anzai- belt. The cross correlation coefficients between them were obtained by maximizing the cross correlation function

Figure 4. The results for tracking a oscillating phantom. In upper figure, black squares represent the time profile of raw depth values, white circles represent one with Ueda’s algorithm, comparing with the sinusoidal oscillation curve in bold dashed line. The sinusoidal oscillation of the phantom was set with an amplitude of 11 mm (thin dashed line) and the period of 6 s. The residual errors between the theoretical sinusoidal oscillation curve and the measured time profile of the phantom are also shown in lower figure. Black crosses and black dots represent the results with and without Ueda’s algorithm, respectively.

3.3. Intra-Fractional Motion Detection Using Three-Dimensional Surface

According to the method described in section 2.6, we demonstrated how our intra-fractional motion detecting system worked. First we set threshold value T to 20 mm and 0.5 mm in case of irregular motion and breathing arrest respectively. These threshold values were carefully determined after repeating a test many times. The participant was ordered to take various kinds of motions including breath holding. Every time the participant made irregular motions, our system successfully demonstrated detecting motion errors with issuing a warning message and alert sound shown in Figure 10.

4. Discussion

We have developed an intra-fractional patient motion detection system for radiotherapy treatments by using

Figure 5. The comparison of measured respiratory patterns for the abdominal respiration (left) and the breath holding (right). The white circles represent the respiratory motion patterns measured by Kinect and the black line by the laser range meter, IL-2000.

Figure 6. Monitoring four types of respiratory motion. Upper left, lower left, upper right, lower right figures correspond to thoracic respiration, abdominal respiration, respiratory holdings, irregular motion, respectively.

depth camera, Microsoft Kinect. High accuracy and real-time noise reduction of the depth data are essential for detecting of the intra-fractional motion. First, we introduced Ueda’s algorithm for real-time noise reduction, since it makes it possible to directly implement an analytical solution of least square method. To validate its performance, two breathing patterns were measured by the laser range meter as well as Kinect. The resulting cross correlation coefficients between them, 0.982 for abdominal respiration and 0.995 for breath holding, indicates that the developed system has an ability to track human respiratory motions.

Second, we detected patient motions at multiple points unlike other conventional respiratory monitoring systems, such as Anzai. There are some reports regarding the measurements of the respiratory curve using a Kinect sensor, but the number of region for monitoring was, however, limited. In our newly developed system, we set multiple regions to acquire the respiratory curve in the whole thoracoabdominal region of the participant.

The respiratory curve was measured by Kinect and by Anzai simultaneously and the cross correlation coefficients between them are compared. As a result, the average of the cross correlation coefficients of the thoracic region and abdominal region were 0.90 and 0.93, respectively. These results were consistent with the report of Xia and Jochen, proving that the performance of our system was comparable to an existing monitoring system.

Figure 7. The comparison of the respiratory patterns for the thoracic respiratory motion (left) and for the abdominal respiratory motion (right).

Figure 8. The comparison between the depth values measured by Kinect (black crosses) and Anzai (dotted line). Upper figure represents an abdominal respiratory motion and lower for a thoracic respiratory motion.

Figure 9. Distributions of cross correlation coefficients on the surface of the participant (100 s measurement).

Figure 10. Detection of the intra-fractional motion. The system sends the warning message on screen promptly once the averaged depth fluctuation exceeds the predefined threshold.

Third, we were able to reconstruct the three-dimensional surface by using spline interpolation of depth data obtained from all thoracoabdominal regions. Furthermore, comparing the average depth value with the predefined threshold values every frame, this system successfully detected motion errors and issued warning messages on the monitor screen in real-time.

Although the Kinect sensor was placed 100 cm above the participant in most cases of this study, this setup is, however, not always possible for daily clinical uses in a radiotherapy treatment room. If we place the Kinect on the wall of the treatment room, which is considered as a realistic solution for clinical uses, the longer distance and the smaller incident angle of infrared light to a patient may cause the worse resolution in measured depth. This must be seriously considered before adapting this system to a daily clinical use.

Even though the Kinect only covered the relatively narrow region of the participant body, this study proved that the developed system based on the depth camera has potential to reconstruct the three dimensional surface of the patient without any contacts to the patient. If depth data become available for wider range of the patient body, the detection efficiency of intra-fractional patient motion could be increased considerably. The multi- depth camera system would make this possible in near future.

5. Conclusion

We constructed a three-dimensional noninvasive and contact-less motion tracking system by the use of a depth camera. The proposed method enabled us to monitor respiratory curves at multiple points simultaneously unlike other conventional devices. Owing to the Ueda’s algorithm, we obtained the noise-less depth values rapidly and accurately so that the patient surface is reconstructed by using thin-plate spline method.

Acknowledgements

This work was supported by JSPS KAKENHI Grant Number 15K08703 and partly supported by JSPS Core-to- Core Program (No. 23003).

Cite this paper

Shinobu Kumagai,Ryohei Uemura,Toru Ishibashi,Susumu Nakabayashi,Norikazu Arai,Takenori Kobayashi,Jun’ichi Kotoku, (2016) Markerless Respiratory Motion Tracking Using Single Depth Camera. Open Journal of Medical Imaging,06,20-31. doi: 10.4236/ojmi.2016.61003

References

- 1. Judit, B.-H., Frederick, M., Hansjorg, W., Michael, E., Brigitte, H., Nadja, R., Beate, K., Frank, L. and Fredrik, W. (2008) Intrafraction Motion of the Prostate during an IMRT Session: A Fiducial-Based 3D Measurement with Cone-Beam CT. Radiation Oncology, 3, 37.

http://dx.doi.org/10.1186/1748-717X-3-37 - 2. Zhao, B., Yang, Y., Li, T.F., Li, X., Heron, D.E. and Huq, M-S. (2012) Dosimetric Effect of Intrafractional Tumor Motion in Phase Gated Lung Stereotactic Body Radiotherapy. Medical Physics, 39, 6629-6637.

http://dx.doi.org/10.1118/1.4757916 - 3. Mampuya, W.A., Nakamura, M., Matsuo, Y., Ueki, N., Fujimoto, T., Yano, S., Monzen, H., Minozaki, H. and Hiraoka, M. (2013) Interfraction Variation in Lung Tumor Position with Abdominal Compression during Stereotactic Body Radiotherapy. Medical Physics, 40, Article ID: 091718.

http://dx.doi.org/10.1118/1.4819940 - 4. Jin, J.-Y., Ajiouni, M., Ryu, S., Chen, Q., Li, S.D. and Movsas, B. (2007) A Technique of Quantitatively Monitoring both Respiratory and Nonrespiratory Motion in Patients Using External Body Markers. Medical Physics, 34, 2875.

http://dx.doi.org/10.1118/1.2745237 - 5. Kim, H., Park, Y.-K., Kim, I.H., Lee, K. and Ye, K. (2014) Development of an Optical-Based Image Guidance System: Technique Detecting External Markers behind a Full Facemask. Medical Physics, 38, 3006-3012.

- 6. Vision RT Website.

http://www.visionrt.com - 7. Sentinel (ELEKTA) Website.

http://www.elekta.com - 8. Pallotta, S., Simontacchi, G., Marrazzo, L., Ceroti, M., Palar, F., Viti, G. and Bucciolini, M. (2013) Accuracy of a 3D Laser/Camera Surface Imaging System for Setup Verification of the Pelvic and Thoracic Regions in Radiotherapy Treatments. Medical Physics, 40, Article ID: 011710.

http://dx.doi.org/10.1118/1.4769428 - 9. Wiersma, M., Tomarken, S.L., Grelewicz, Z., Belcher, Z. and Kang, H. (2013) Spatial and Temporal Performance of 3D Optical Surface Imaging for Real-Time Head Position Tracking. Medical Physics, 40, 111712.

- 10. Peng, J.L., Kahler, D., Li, J.G., Samant, S., Yan, G.H., Ambur, R. and Liu, C. (2010) Characterization of a Real-Time Surface Image-Guided Stereotactic Positioning System. Medical Physics, 37, 5421.

- 11. Kauweloa, K., Ruan, D., Park, J.C., Sandhu, A., Kim, G.Y., Pawlicki, T., Tyler Watkins, W., Song, B. and Song, W.Y. (2012) Gate CTTM Surface Tracking System for Respiratory Signal Reconstruction in 4DCT Imaging. Medical Physics, 39, 492-502.

- 12. Microsoft Kinect for Windows.

http://www.microsoft.com/en-us/kinectforwindows/ - 13. Helten, T., Muller, M., Seidel, H.-P. and Theobalt, C. (2013) Real-Time Body Tracking with One Depth Camera and Inertial Sensors. Proceedings of the 2013 IEEE International Conference of Computer Vision, Sydney,1-8 December 2013, 1105-1112.

http://dx.doi.org/10.1109/ICCV.2013.141 - 14. Tong, J., Zhou, J., Liu, L.G., Pan, Z.G. and Yan, H. (2012) Scanning 3D Full Human Bodies Using Kinects. Visualization and Computer Graphics, 18, 643-650.

- 15. Takeda, Y., Huang, H.-H. and Kawagoe, K. (2013) In-Door Positioning for Supporting the Elderly Who Live Alone with Multiple Kinects. The 27th Annual Conference of Japanese Society for Artificial Intelligence, Toyama, 4-7 June 2013, 1-2.

- 16. Xia, J. and Siochi, R.A. (2012) A Real-Time Respiratory Motion Monitoring System Using KINECT: Proof and Concept. Medical Physics, 39, 2682-2685.

http://dx.doi.org/10.1118/1.4704644 - 17. Xia, J., Timothy Waldron, R. and Kim, Y. (2014) A Real-Time Applicator Position System for Gynecologic Intracavitary Brachytherapy. Medical Physics, 41, Article ID: 011703.

http://dx.doi.org/10.1118/1.4842555 - 18. Aoki, H., Miyazaki, M., Nakamura, H., Furukawa, R., Sagawa, R. and Kawasaki, H. (2012) Non-Contact Respiration Measurement Using Structured Light 3-D Sensor. SICE Annual Conference Proceedings, 1, 614-618.

- 19. Jochen, P., Chiristian, S., Jochaim, H. and Torsten, K. (2008) Robust Real-Time 3D Respiratory Motion Detection Using Time-of-Flight Cameras. International Journal of Computer Assisted Radiology and Surgery, 3, 427-431.

http://dx.doi.org/10.1007/s11548-008-0245-2 - 20. Heb, M., Buther, F., Gigengack, F., Dawood, M. and Schafers, K.P. (2015) A Dual-Kinect Approach to Determine Torso Surface Motion for Respiratory Motion Correction in PET. Medical Physics, 42, 2276-2286.

- 21. Ueda, T. (2013) Transistor Technology. CQ Publishing Co., Ltd., Tokyo, 119-124.

- 22. Ueda, T. (2013) Interface. CQ Publishing Co., Ltd., Tokyo, 98-105.

- 23. Khoshelham, K. (2011) Accuracy Analysis of Kinect Depth Data. ISPRS Workshop, Calgary, 29-31 August 2011, 133-138.

- 24. John, H. Matplotlib Website.

http://matplotlib.org - 25. Numpy Website.

http://www.numpy.org - 26. modusQA Website.

http://modusqa.com/radiotherapy/phantoms/respiratory-motion