Paper Menu >>

Journal Menu >>

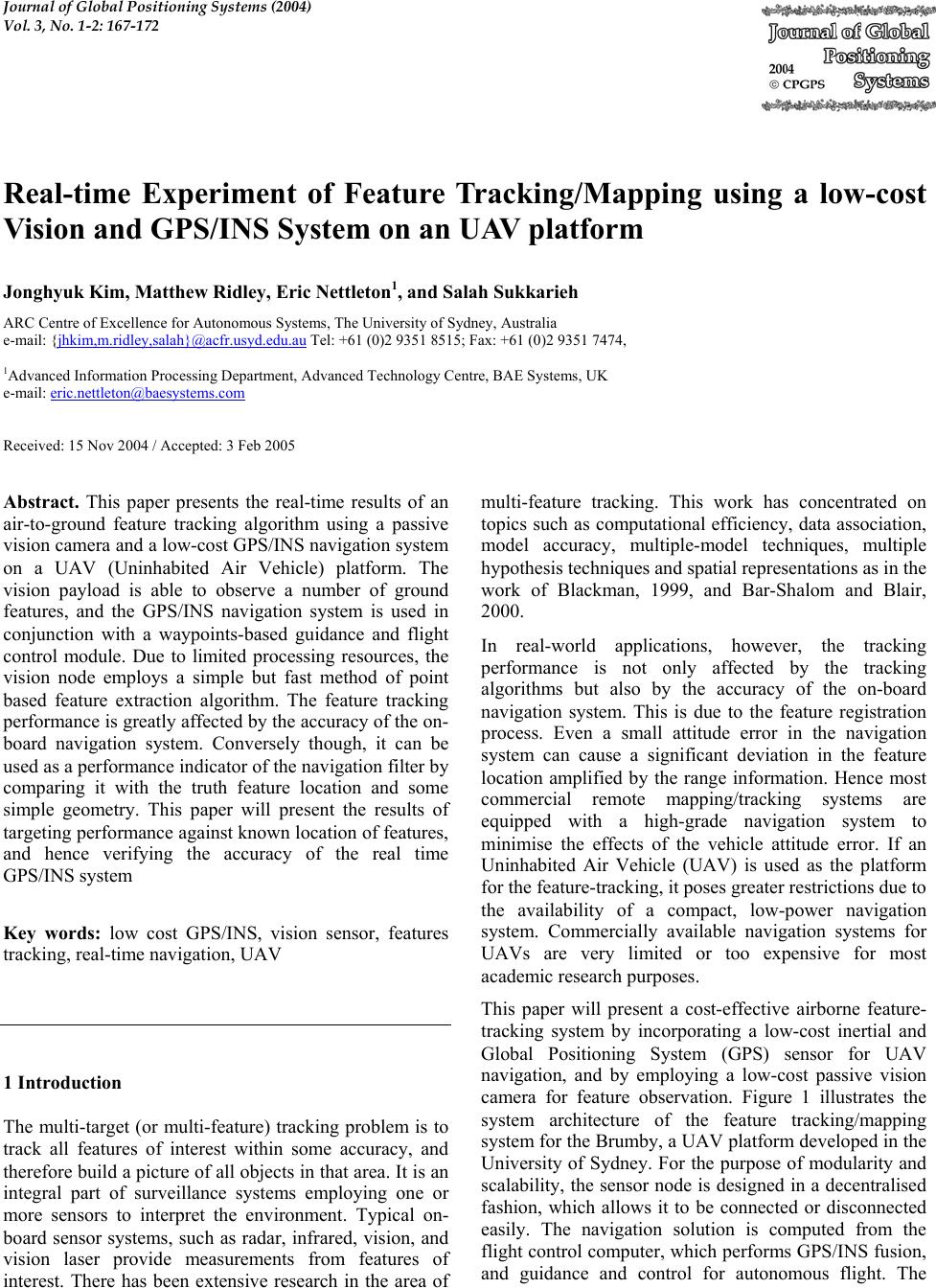

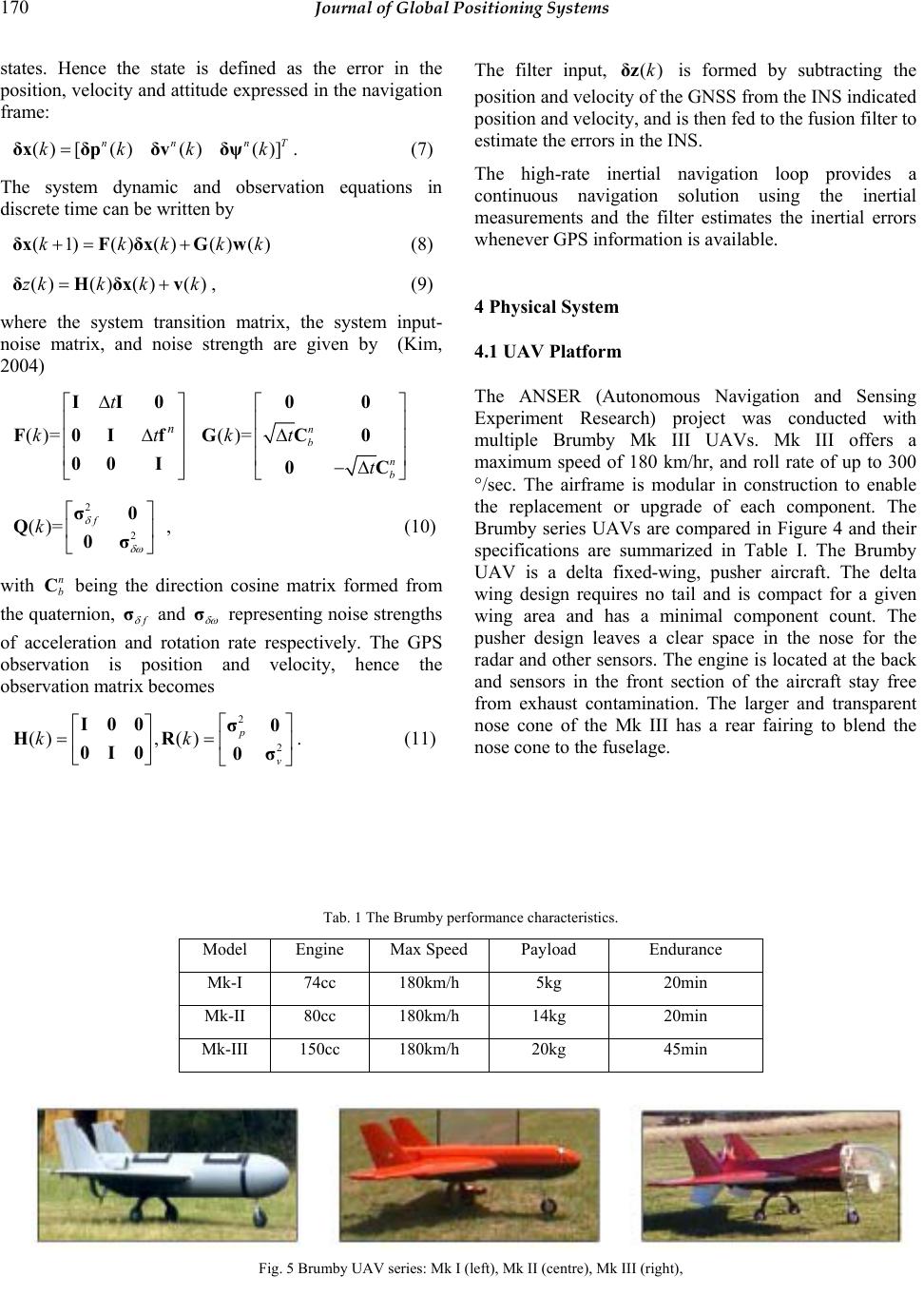

Journal of Global Positioning Systems (2004) Vol. 3, No. 1-2: 167-172 Real-time Experiment of Feature Tracking/Mapping using a low-cost Vision and GPS/INS System on an UAV platform Jonghyuk Kim, Matthew Ridley, Eric Nettleton1, and Salah Sukkarieh ARC Centre of Excellence for Autonomous Systems, The University of Sydney, Australia e-mail: {jhkim,m.ridley,salah}@acfr.usyd.edu.au Tel: +61 ( 0)2 9351 8515; Fax: +61 (0) 2 9351 7 474, 1Advanced Information Processing Departme nt, Adv ance d Technology Centre , BAE Systems, UK e-mail: eric.nettleton@baesystems.com Received: 15 Nov 2004 / Accepted: 3 Feb 2005 Abstract. This paper presents the real-time results of an air-to-ground feature tracking algorithm using a passive vision camera and a low-cost GPS/INS navigation system on a UAV (Uninhabited Air Vehicle) platform. The vision payload is able to observe a number of ground features, and the GPS/INS navigation system is used in conjunction with a waypoints-based guidance and flight control module. Due to limited processing resources, the vision node employs a simple but fast method of point based feature extraction algorithm. The feature tracking performance is greatly affected by the accuracy of the on- board navigation system. Conversely though, it can be used as a performance indicator of the navigation filter by comparing it with the truth feature location and some simple geometry. This paper will present the results of targeting performance against known location of features, and hence verifying the accuracy of the real time GPS/INS system Key words: low cost GPS/INS, vision sensor, features tracking, real-time navigation, UAV 1 Introduction The multi-target (or multi-feature) tracking problem is to track all features of interest within some accuracy, and therefore build a picture of all objects in that area. It is an integral part of surveillance systems employing one or more sensors to interpret the environment. Typical on- board sensor systems, such as radar, infrared, vision, and vision laser provide measurements from features of interest. There has been extensive research in the area of multi-feature tracking. This work has concentrated on topics such as computational efficiency, data association, model accuracy, multiple-model techniques, multiple hypothesis techniques and spatial representatio ns as in the work of Blackman, 1999, and Bar-Shalom and Blair, 2000. In real-world applications, however, the tracking performance is not only affected by the tracking algorithms but also by the accuracy of the on-board navigation system. This is due to the feature registration process. Even a small attitude error in the navigation system can cause a significant deviation in the feature location amplified by the range information. Hence most commercial remote mapping/tracking systems are equipped with a high-grade navigation system to minimise the effects of the vehicle attitude error. If an Uninhabited Air Vehicle (UAV) is used as the platform for the feature-tracking, it poses greater restrictions due to the availability of a compact, low-power navigation system. Commercially available navigation systems for UAVs are very limited or too expensive for most academic research purposes. This paper will present a cost-effective airborne feature- tracking system by incorporating a low-cost inertial and Global Positioning System (GPS) sensor for UAV navigation, and by employing a low-cost passive vision camera for feature observation. Figure 1 illustrates the system architecture of the feature tracking/mapping system for the Brumby, a UAV platform developed in the University of Sydney. For the purpose of modularity and scalability, the sensor node is designed in a decentralised fashion, which allows it to be connected or disconnected easily. The navigation solution is computed from the flight control computer, which performs GPS/INS fusion, and guidance and control for autonomous flight. The  168 Journal of Global Positioning Systems sensor payloads connected to the vehicle bus use this navigation solution for the feature tracking, radar gimbal control, and time synchronisation. GPS Logger IMU AIR DATA SYSTEM GPS/INS Gui dance Control Radar Payload Vision System Logger Vehicle Bus Fig. 1 Modular structure of t he fea tu re tracking and navigation sy st e m Section 2 will present the vision system including the sensor and tracking algorithm and Section 3 will provide the GPS/INS integration. In Section 4, details of the flight vehicle and on-board system will be presented. Section 5 will present the real-time flight results based on the Brumby UAV, and then Section 6 will provide conclusions and future work. 2 Vision System 2.1 Passive Vision Camera An on-board vision sensor provides feature observations to the feature-tracking computer. The vision system makes use of a low cost, lightweight, monochrome CCS- SONY-HR camera from Sony as shown in Figure 2. This imaging sensor has a resolution of 600 horizontal lines using a 12V power source. It has a composite video output, which gives images at up to 50Hz, or 25Hz when the images are interlaced. This occurs as the odd and even lines are used to form separate images 20ms apart. The vision sensor is mounted in the second payload bay of the Brumby Mk-III, immediately behind the forward bulkhead. The sensor is mounted pointing down as shown in Figure 2. Typical airborne images from this sensor are shown in Figure 3. Artificial landmarks were placed on the ground before flight. These are plastic sheets for easier identification from the vision system. Due to limited Fig. 2 Vision camera used (top) and body and camera frames whose x- axis is aligned to point downward (bottom) Fig. 3 Aerial images during flight test which show several white artificial features as well as some natural features such as road, dam, and trees. processing resources, a simple but fast method of point based feature extraction is employed. All pixels above a threshold are converted into line segments. A range gate  Kim et al.: Real-time Experiment of Feature Tracking/Mapping using a low-cost Vision and GPS/INS System 169 performs data association on these segments and the centre of mass of the pixels is obtained. The mass, aspect ratio and density of the cluster of pixels is then utilised for feature identification. The bearing and elevation to the feature can then be generated. Although the vision sensor does not provide range directly, an estimated value is generated based on the known size of the features. 2.2 Feature Tracking Algorithm The locations of the sensor and platform are provided from the GPS/INS system through the vehicle bus. This location information is used to convert all relative observations to a global Cartesian frame in which tracking takes place. This conversion is performed in the sensor pre-processing stage, which makes the filter observation model a simple linear model. In global coordinates, the x and y position and velocity are modelled as an integrated Ornstein-Uhlenbeck process as in paper by Stone et al, 1999. This process models the velocity as Brownian motion, which can be bounded by appropriate choice of the model parameter γ . The z position is modelled as a simple Brownian process. This can be expressed as: (1) ()() ()()kkkkk+= +xFxGw (1) ()()()()kkkk=+zHxv, (2) where the state vector is [] ()()() () ()() T k xkxkykykzk=x . (3) The state transition matrix for this system is given by (Ridley, 2002) 1000 0000 ()= 0010 000 0 00001 v v t F kt F ∆ ⎡⎤ ⎢⎥ ⎢⎥ ⎢⎥ ∆ ⎢⎥ ⎢⎥ ⎢⎥ ⎣⎦ F 000 (1 )00 ()= 000 0(1)0 001 v v tF k tF ⎡⎤ ⎢⎥ ∆− ⎢⎥ ⎢⎥ ⎢⎥ ⎢⎥ ∆− ⎢⎥ ⎢⎥ ⎣⎦ G (4) 2 2 2 00 ()=00 00 vx vy z k ⎡⎤ ⎢⎥ ⎢⎥ ⎢⎥ ⎣⎦ σ Qσ σ with v F being defined as t e γ −∆ using Brownian motion parameter. To simplify the filter observation model, the sensor observations in range, bearing and elevation are converted into Cartesian coordinates [] T x yz in a global reference frame during the sensor pre-processing stage. The observations are in the form [] () T kxyz=z, hence the observation matrix and noise strength matrix are 2 2 2 10000 ()0 0 1 0 0, () 00001 x xy xz yx y yz zx zyz kk ⎡⎤ ⎡⎤ ⎢⎥ ⎢⎥ == ⎢⎥ ⎢⎥ ⎢⎥ ⎢⎥ ⎣⎦ ⎣⎦ σσ σ HRσσσ σσ σ . (5) The noise strength matrix are computed by using the Jacobians of the polar to Cartesian transformation function, hence it contains cross-correlation terms. Using this feature model and vision observation model, the tracking filter estimates the position and velocity of the features on the ground. Data association between the observation and feature are performed by using the innovation gate method within the tracking filter. 3 Navigation System 3.1 Inertial Navigation The inertial navigation algorithm is required to predict the high-dynamic vehicle motions using the Inertial Measurement Unit (IMU). In this implementation a quaternion-based strapdown INS algorithm formulated in earth-fixed tangent frame is used (Kim, 2004): nn nnnb n n* n nn (1) (1) () (1)[((1) ()) ()= ()( 1)] () (1) (1) n kkt kkkk kkt kkk ⎡ ⎤ −+ −∆ ⎡⎤ ⎢ ⎥ −+ −⊗ ⎢⎥ ⎢ ⎥ ⎢⎥ ⎢ ⎥ ⊗−+∆ ⎢⎥ ⎢ ⎥ ⎣⎦ ⎢ ⎥ −⊗∆− ⎣ ⎦ pv pvqf vqg qqq , (6) where (), (), () nnn kkkpvq represent position, velocity, and quaternion respectively at discrete time k, t ∆ is the time for the position and velocity update interval, * ()() nkq is a quaternion conjugate for the vector transformation, ⊗ represents a quaternion multiplication, and () nk∆q is a delta quaternion computed from gyroscope readings during the attitude update interval. 3.2 GPS/INS Integration In the complementary GPS/INS architecture, the fusion filter estimates the errors in INS by observing vehicle  170 Journal of Global Positioning Systems states. Hence the state is defined as the error in the position, velocity and attitude expressed in the navigation frame: () [()()()] nn nT kkkk=δxδpδvδψ. (7) The system dynamic and observation equations in discrete time can be written by (1) ()()()()kkkkk+= +δxFδxGw (8) ()() ()()zkk kk=+δHδxv, (9) where the system transition matrix, the system input- noise matrix, and noise strength are given by (Kim, 2004) ()= n t kt ∆ ⎡⎤ ⎢⎥ ∆ ⎢⎥ ⎢⎥ ⎣⎦ II0 F0If 00 I ()= n b n b kt t ⎡⎤ ⎢⎥ ∆ ⎢⎥ ⎢⎥ −∆ ⎣⎦ 00 GC0 0C 2 2 ()= f k δ δω ⎡⎤ ⎢⎥ ⎣⎦ σ0 Q0σ, (10) with n b C being the direction cosine matrix formed from the quaternion, f δ σ and δ ω σ representing noise strengths of acceleration and rotation rate respectively. The GPS observation is position and velocity, hence the observation matrix becomes 2 2 (), ()p v kk ⎡⎤ ⎡⎤ == ⎢⎥ ⎢⎥ ⎣⎦ ⎣⎦ I00σ0 HR 0I0 0σ. (11) The filter input, ()kδz is formed by subtracting the position and velocity of the GNSS from the INS indicated position and velocity, and is then fed to the fusion filter to estimate the errors in the INS. The high-rate inertial navigation loop provides a continuous navigation solution using the inertial measurements and the filter estimates the inertial errors whenever GPS information is available. 4 Physical System 4.1 UAV Platform The ANSER (Autonomous Navigation and Sensing Experiment Research) project was conducted with multiple Brumby Mk III UAVs. Mk III offers a maximum speed of 180 km/hr, and roll rate of up to 300 °/sec. The airframe is modular in construction to enable the replacement or upgrade of each component. The Brumby series UAVs are compared in Figure 4 and their specifications are summarized in Table I. The Brumby UAV is a delta fixed-wing, pusher aircraft. The delta wing design requires no tail and is compact for a given wing area and has a minimal component count. The pusher design leaves a clear space in the nose for the radar and other sensors. The engine is located at the back and sensors in the front section of the aircraft stay free from exhaust contamination. The larger and transparent nose cone of the Mk III has a rear fairing to blend the nose cone to the fuselage. Tab. 1 The Brumby performance characteristics. Model Engine Max Speed Payload Endurance Mk-I 74cc 180km/h 5kg 20min Mk-II 80cc 180km/h 14kg 20min Mk-III 150cc 180km/h 20kg 45min Fig. 5 Brumby UAV series: Mk I (left), Mk II (centre), Mk III (right),  Kim et al.: Real-time Experiment of Feature Tracking/Mapping using a low-cost Vision and GPS/INS System 171 4.2 On-board Computing System The hardware of the feature-tracking and navigation system are installed on the fuselage of the Brumby Mk III. The embedded PC104 platform is used as a flight control system performing navigation, guidance and control by fusing data from the IMU, GPS receivers and two tilt sensors. The IMU, from Inertial Science Inc., is very light and has a small form-factor, which makes it suitable for UAV applications. Two CMC Allstar GPS receivers are stacked on the flight control computer with the antennae installed on each of the wings. The vision camera is installed next to the IMU to minimise the lever- arm offset. The camera is connected to a secondary PC104 vision computer which performs the feature extraction and tracking tasks. Each computing node communicates by the Ethernet bus. Fig. 6. Flight control system with IMU (top left), tilt sensor (bottom left), GPS (top right) and vision camera (centre). 5 Results Intensive flight tests were performed to demonstrate the ANSER program at the test site. The results shown in this paper are from the real-time flight test on June 2002. Figures 6 and 7 illustrate real-time feature observations, which are converted from range, bearing and elevation to Cartesian coordinates, then transformed to the navigation frame. The real-time GPS/INS navigation solution is used for the coordinate transformation. During level flight paths, it can be observed that the x and y position of the observed features are fairly close to the true feature positions. This is firstly due to the high accuracy of the bearing and elevation solution in the camera which was 0.16° and 0.12° respectively, and due to the consistent accuracy in the navigation solution. During banking however, large horizontal errors are introduced as can be seen clearly in Figure 8. This is due to the large range errors reflected in the horizontal plane. The range information extracted based on the size of the feature gives extremely poor quality information ranging from 20m to 100m. In addition, during the experiments the GPS satellite coverage was quite poor, with only 6 satellites in view. When the aircraft banked it often lost lock of some of these satellites, which degraded the height estimate, and subsequently the estimated height of the features. This highlights the importance of an accurate estimate of the platform state in feature-tracking as any error here will result in an error in the feature location. During high banking lots of spurious observations, such as water reflection, are detected. These can be seen in the left corner of flight path and these cause the estimated ranges to be extremely noisy and unreliable at banking. Hence observations taken when the roll angle was greater than 30° are discarded in the tracking system. The results after this filtering still indicate that there are several areas, particularly on the lower left side of the plot, where clusters of observations are seen away from features. Rather than being spurious observations, these are actually natural features such as patches of sand (Nettleton, 2003), which appear in the images very similar to the artificial white features. They are detected consistently during every flight. Figures 8 and 9 show the tracked feature positions within the vision node during the flight test. As the targets are known to be stationary, the tracking process model is tuned to decay velocity to zero in the filter prediction. Therefore, the errors in velocity states are essentially zero over the duration of the flight. The horizontal plot shows feature positions during the first three rounds. The estimated feature positions are close to the true positions, but some covariance ellipses fail to include the true position. The main reason is due to the poor range performance coupled with the INS error especially in roll angle. With successive observations of the feature, the effect of the range error can be reduced. The final tracking result after nine rounds shows that most of estimated feature positions are within the 2σ uncertainty boundary of the true position. These results show that the low-cost GPS/INS navigation system developed can be effectively used for the airborne feature -trac kin g pur pose . 6 Conclusions This paper presented real-time results of the airborne feature-tracking system on the UAV platform. The system incorporated a cost effective vision system and GPS/INS navigation system. The vision system provides bearing and elevation observation as well as range information based on known feature size information.  172 Journal of Global Positioning Systems The feature position is computed using the GPS/INS solution and then it is used as the observation of the tracking filter. In spite of the large range error in the vision and the low cost sensors used, the tracked feature positions showed quite promising performance with several metres in accuracy. This also validates the accuracy of the on-board navigation system. Fig. 7 Vision observations plo t t ed i n the navigation frame. Horizontal feature position shows good p e rformance due to the accurate bearing and elevation observation. Fig. 8 3D-view of the vision observa tions in the navigation frame. The vertical position shows large errors due to the poor range accuracy in vision system. Fig. 9 Feature tracking re s ul t o f final feature positions with 2σ uncertainty ellipsoids. Observations when the aircraft is banking at greater than 30deg are ignored in the tracking filter (plots from Nettleton, 2003). Fig. 9 Enhanced view of some fe at ures with 2σ uncertainty ellipsoids. Acknowledgements This work is supported in part by the ARC Centre of Excellence programme, funded by the Australian Research Council (ARC) and the New South Wales State Government. The Autonomous Navigation and Sensing Experimental Research (ANSER) Project is funded by BAE SYSTEMS UK and BAE SYSTEMS Australia. References Blackman S.: (1986) Multiple-Target Tracking with Radar Application. Artech House, Norwood. Bar-Shalom Y; and Blair W. (2000): Multitarget-Multisensor Tracking: Applications and Advances Volume 3, Artech House, Norwood. Nettleton E. (2003): Decentralised Architectures for Tracking and Navigation with Multiple Flight Vehicles, PhD thesis, Australian Centre for Field Robotics, The University of Sydney. Kim J. (2004): Autonomous Navigation for Airborne Applications, PhD thesis, Australian Centre for Field Robotics, The University of Sydney. Ridley M.; Nettleton E.; Sukkarieh S.; Durrant-Whyte H. (2002): Tracking in Decentralised Air-Ground Sensing Networks, In International Conference on Information Fusion, July, Annapolis, Maryland, USA Stone D. L.; and Barlow C. A.; and Corwin T. L. (1999): Bayesian Multiple Target Tracking, Artech House. |