Comparison between Neural Network and Adaptive Neuro-Fuzzy Inference System for

Forecasting Chaotic Traffic Volumes

253

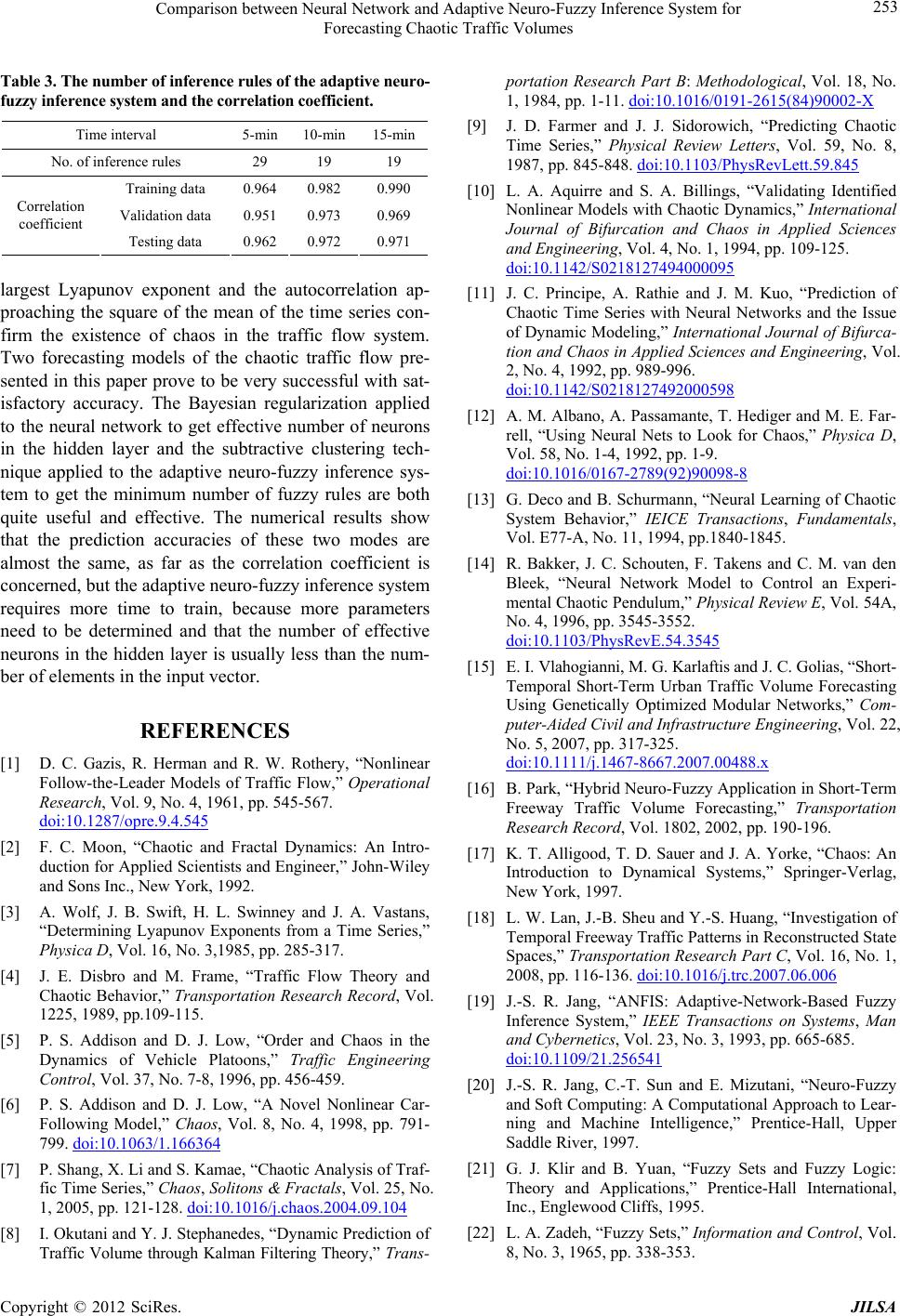

Table 3. The number of inference rules of th e ad ap tive neuro-

fuzzy inference system and the correlation coefficient.

Time interval 5-min 10-min 15-min

No. of inference rules 29 19 19

Training data 0.964 0.982 0.990

Validation data 0.951 0.973 0.969

Correlation

coefficient

Testing data 0.962 0.972 0.971

largest Lyapunov exponent and the autocorrelation ap-

proaching the square of the mean of the time series con-

firm the existence of chaos in the traffic flow system.

Two forecasting models of the chaotic traffic flow pre-

sented in this paper prove to be very successful with sat-

isfactory accuracy. The Bayesian regularization applied

to the neural network to get effective number of neurons

in the hidden layer and the subtractive clustering tech-

nique applied to the adaptive neuro-fuzzy inference sys-

tem to get the minimum number of fuzzy rules are both

quite useful and effective. The numerical results show

that the prediction accuracies of these two modes are

almost the same, as far as the correlation coefficient is

concerned, but the adaptive neuro-fuzzy inference system

requires more time to train, because more parameters

need to be determined and that the number of effective

neurons in the hidden layer is usually less than the num-

ber of elements in the input vector.

REFERENCES

[1] D. C. Gazis, R. Herman and R. W. Rothery, “Nonlinear

Follow-the-Leader Models of Traffic Flow,” Operational

Research, Vol. 9, No. 4, 1961, pp. 545-567.

doi:10.1287/opre.9.4.545

[2] F. C. Moon, “Chaotic and Fractal Dynamics: An Intro-

duction for Applied Scientists and Engineer,” John-Wiley

and Sons Inc., New York, 1992.

[3] A. Wolf, J. B. Swift, H. L. Swinney and J. A. Vastans,

“Determining Lyapunov Exponents from a Time Series,”

Physica D, Vol. 16, No. 3,1985, pp. 285-317.

[4] J. E. Disbro and M. Frame, “Traffic Flow Theory and

Chaotic Behavior,” Transportation Research Record, Vol.

1225, 1989, pp.109-115.

[5] P. S. Addison and D. J. Low, “Order and Chaos in the

Dynamics of Vehicle Platoons,” Traffic Engineering

Control, Vol. 37, No. 7-8, 1996, pp. 456-459.

[6] P. S. Addison and D. J. Low, “A Novel Nonlinear Car-

Following Model,” Chaos, Vol. 8, No. 4, 1998, pp. 791-

799. doi:10.1063/1.166364

[7] P. Shang, X. Li and S. Kamae, “Chaotic Analysis of Traf-

fic Time Series,” Chaos, Solitons & Fractals, Vol. 25, No.

1, 2005, pp. 121-128. doi:10.1016/j.chaos.2004.09.104

[8] I. Okutani and Y. J. Stephanedes, “Dynamic Prediction of

Traffic Volume through Kalman Filtering Theory,” Trans-

portation Research Part B: Methodological, Vol. 18, No.

1, 1984, pp. 1-11. doi:10.1016/0191-2615(84)90002-X

[9] J. D. Farmer and J. J. Sidorowich, “Predicting Chaotic

Time Series,” Physical Review Letters, Vol. 59, No. 8,

1987, pp. 845-848. doi:10.1103/PhysRevLett.59.845

[10] L. A. Aquirre and S. A. Billings, “Validating Identified

Nonlinear Models with Chaotic Dynamics,” International

Journal of Bifurcation and Chaos in Applied Sciences

and Engineering, Vol. 4, No. 1, 1994, pp. 109-125.

doi:10.1142/S0218127494000095

[11] J. C. Principe, A. Rathie and J. M. Kuo, “Prediction of

Chaotic Time Series with Neural Networks and the Issue

of Dynamic Modeling,” International Journal of Bifurca-

tion and Chaos in Applied Sciences and Engineering, Vol.

2, No. 4, 1992, pp. 989-996.

doi:10.1142/S0218127492000598

[12] A. M. Albano, A. Passamante, T. Hediger and M. E. Far-

rell, “Using Neural Nets to Look for Chaos,” Physica D,

Vol. 58, No. 1-4, 1992, pp. 1-9.

doi:10.1016/0167-2789(92)90098-8

[13] G. Deco and B. Schurmann, “Neural Learning of Chaotic

System Behavior,” IEICE Transactions, Fundamentals,

Vol. E77-A, No. 11, 1994, pp.1840-1845.

[14] R. Bakker, J. C. Schouten, F. Takens and C. M. van den

Bleek, “Neural Network Model to Control an Experi-

mental Chaotic Pendulum,” Physical Review E, Vol. 54A,

No. 4, 1996, pp. 3545-3552.

doi:10.1103/PhysRevE.54.3545

[15] E. I. Vlahogianni, M. G. Karlaftis and J. C. Golias, “Short-

Temporal Short-Term Urban Traffic Volume Forecasting

Using Genetically Optimized Modular Networks,” Com-

puter-Aided Civil and Infrastructure Engineering, Vol. 22,

No. 5, 2007, pp. 317-325.

doi:10.1111/j.1467-8667.2007.00488.x

[16] B. Park, “Hybrid Neuro-Fuzzy Application in Short-Term

Freeway Traffic Volume Forecasting,” Transportation

Research Record, Vol. 1802, 2002, pp. 190-196.

[17] K. T. Alligood, T. D. Sauer and J. A. Yorke, “Chaos: An

Introduction to Dynamical Systems,” Springer-Verlag,

New York, 1997.

[18] L. W. Lan, J.-B. Sheu and Y.-S. Huang, “Investigation of

Temporal Freeway Traffic Patterns in Reconstructed State

Spaces,” Transportation Research Part C, Vol. 16, No. 1,

2008, pp. 116-136. doi:10.1016/j.trc.2007.06.006

[19] J.-S. R. Jang, “ANFIS: Adaptive-Network-Based Fuzzy

Inference System,” IEEE Transactions on Systems, Man

and Cybernetics, Vol. 23, No. 3, 1993, pp. 665-685.

doi:10.1109/21.256541

[20] J.-S. R. Jang, C.-T. Sun and E. Mizutani, “Neuro-Fuzzy

and Soft Computing: A Computational Approach to Lear-

ning and Machine Intelligence,” Prentice-Hall, Upper

Saddle River, 1997.

[21] G. J. Klir and B. Yuan, “Fuzzy Sets and Fuzzy Logic:

Theory and Applications,” Prentice-Hall International,

Inc., Englewood Cliffs, 1995.

[22] L. A. Zadeh, “Fuzzy Sets,” Information and Control, Vol.

8, No. 3, 1965, pp. 338-353.

Copyright © 2012 SciRes. JILSA