B. KUMPHON 419

21

1

1

ln ln

11

1l

ni

i

n

ii

x

n

L

x

n

ii

xx

,0x

(25)

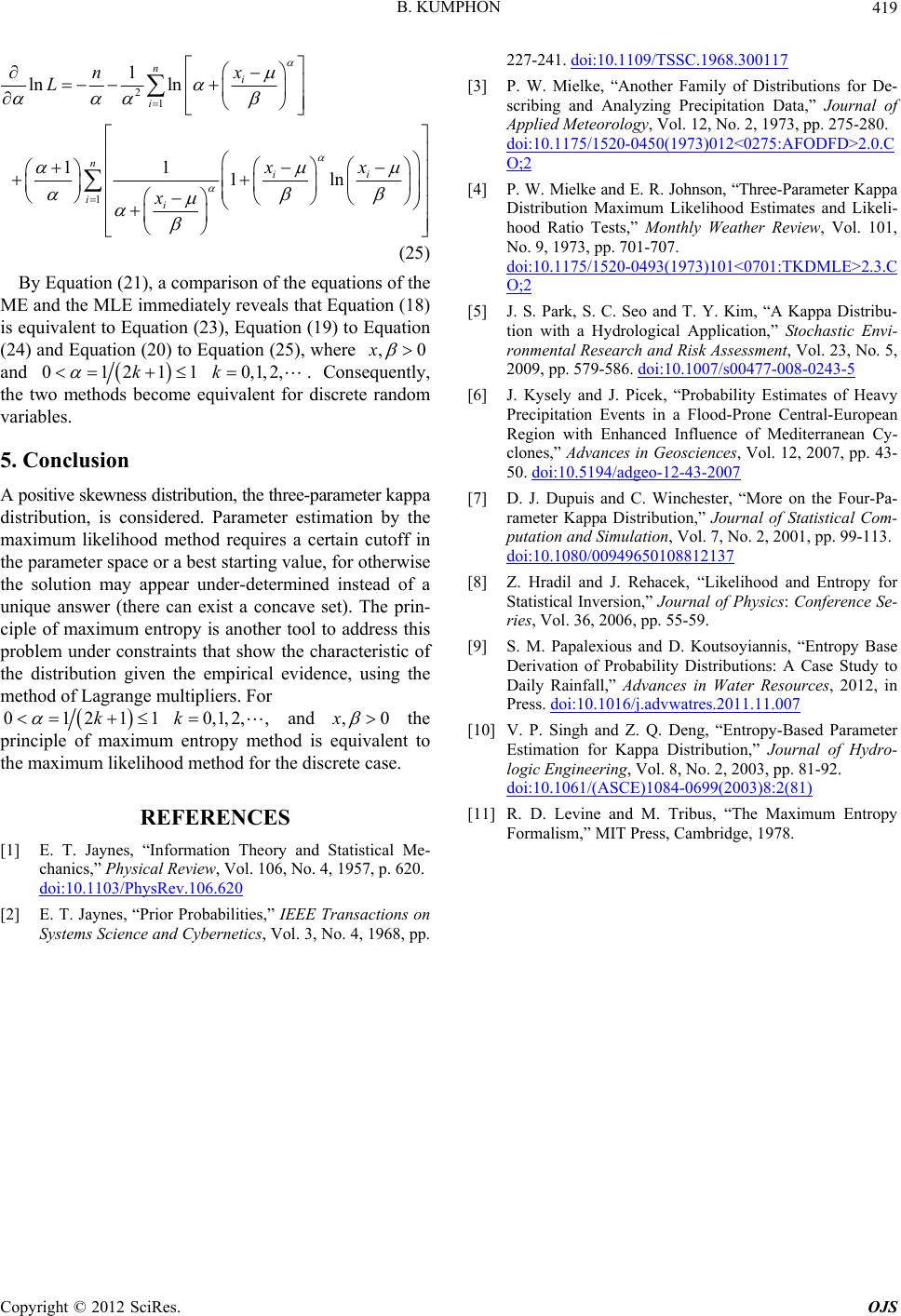

By Equation (21), a comparison of the equations of the

ME and the MLE immediately reveals that Equation (18)

is equivalent to Equation (23), Equation (19) to Equation

(24) and Equation (20) to Equation (25), where

and

01211kk

0,1,2,. Consequently,

the two methods become equivalent for discrete random

variables.

5. Conclusion

A positive skewness distribution, the three-parameter ka p pa

distribution, is considered. Parameter estimation by the

maximum likelihood method requires a certain cutoff in

the parameter space or a best starting value, for otherwise

the solution may appear under-determined instead of a

unique answer (there can exist a concave set). The prin-

ciple of maximum entropy is another tool to address this

problem under constraints that show the characteristic of

the distribution given the empirical evidence, using the

method of Lagrange multipliers. For

01211kk

,0x0,1,2,, and

the

principle of maximum entropy method is equivalent to

the maximum likelihood method for the discrete case.

REFERENCES

[1] E. T. Jaynes, “Information Theory and Statistical Me-

chanics,” Physical Review, Vol. 106, No. 4, 1957, p. 620.

doi:10.1103/PhysRev.106.620

[2] E. T. Jaynes, “Prior Probabilities,” IEEE Transactions on

Systems Science and Cybernetics, Vol. 3, No. 4, 1968, pp.

227-241. doi:10.1109/TSSC.1968.300117

[3] P. W. Mielke, “Another Family of Distributions for De-

scribing and Analyzing Precipitation Data,” Journal of

Applied Meteorology, Vol. 12, No. 2, 1973, pp. 275-280.

doi:10.1175/1520-0450(1973)012<0275:AFODFD>2.0.C

O;2

[4] P. W. Mielke and E. R. Johnson, “Three-Parameter Kap pa

Distribution Maximum Likelihood Estimates and Likeli-

hood Ratio Tests,” Monthly Weather Review, Vol. 101,

No. 9, 1973, pp. 701-707.

doi:10.1175/1520-0493(1973)101<0701:TKDMLE>2.3.C

O;2

[5] J. S. Park, S. C. Seo and T. Y. Kim, “A Kappa Distribu-

tion with a Hydrological Application,” Stochastic Envi-

ronmental Research and Risk Assessment, Vol. 23, No. 5,

2009, pp. 579-586. doi:10.1007/s00477-008-0243-5

[6] J. Kysely and J. Picek, “Probability Estimates of Heavy

Precipitation Events in a Flood-Prone Central-European

Region with Enhanced Influence of Mediterranean Cy-

clones,” Advances in Geosciences, Vol. 12, 2007, pp. 43-

50. doi:10.5194/adgeo-12-43-2007

[7] D. J. Dupuis and C. Winchester, “More on the Four-Pa-

rameter Kappa Distribution,” Journal of Statistical Com-

putation and Simulation, Vol. 7, No. 2, 2001, pp. 99-113.

doi:10.1080/00949650108812137

[8] Z. Hradil and J. Rehacek, “Likelihood and Entropy for

Statistical Inversion,” Journal of Physics: Conference Se-

ries, Vol. 36, 2006, pp. 55-59.

[9] S. M. Papalexious and D. Koutsoyiannis, “Entropy Base

Derivation of Probability Distributions: A Case Study to

Daily Rainfall,” Advances in Water Resources, 2012, in

Press. doi:10.1016/j.advwatres.2011.11.007

[10] V. P. Singh and Z. Q. Deng, “Entropy-Based Parameter

Estimation for Kappa Distribution,” Journal of Hydro-

logic Engineering, Vol. 8, No. 2, 2003, pp. 81-92.

doi:10.1061/(ASCE)1084-0699(2003)8:2(81)

[11] R. D. Levine and M. Tribus, “The Maximum Entropy

Formalism,” MIT Press, Cambridge, 1978.

Copyright © 2012 SciRes. OJS