F. PORZSOLT ET AL.

Acad.

teaching

and CME

Real world

outcomes

Clinical

guidelines

Health care

expenditure

Clinical

practice

Approval of

innovation

Clinical

research

Publication

of the results

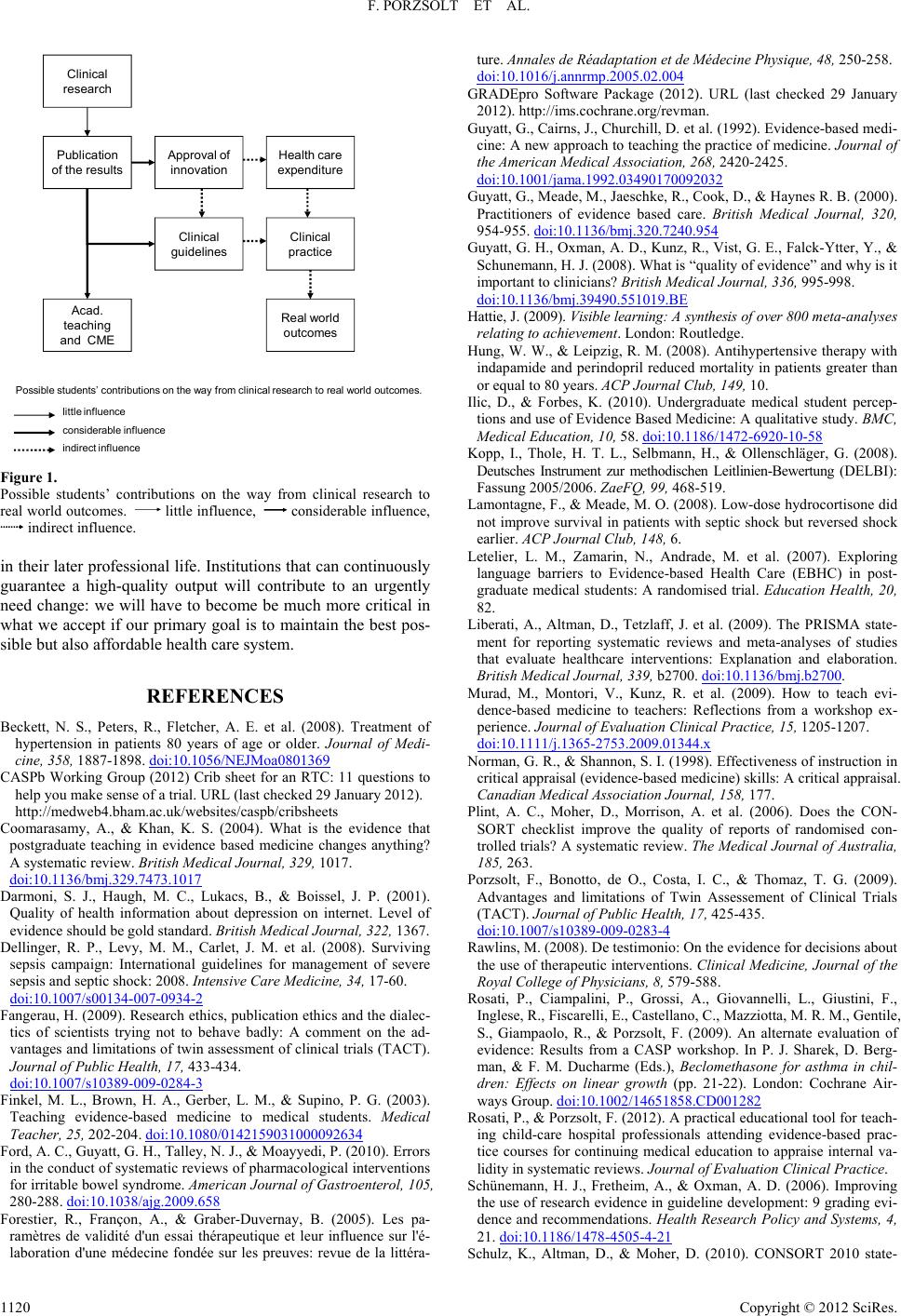

Possible students’ contributions on the way from clinical research to real world outcomes.

little influence

considerable influence

indirect influence

Figure 1.

Possible students’ contributions on the way from clinical research to

real world outcomes. little influence, considerable influence,

indirect influence.

in their later professional life. Institutions that can continuously

guarantee a high-quality output will contribute to an urgently

need change: we will have to become be much more critical in

what we accept if our primary goal is to maintain the best pos-

sible but also affordable health care system.

REFERENCES

Beckett, N. S., Peters, R., Fletcher, A. E. et al. (2008). Treatment of

hypertension in patients 80 years of age or older. Journal of Medi-

cine, 358, 1887-1898. doi:10.1056/NEJMoa0801369

CASPb Working Group (2012) Crib sheet for an RTC: 11 questions to

help you make sense of a trial. URL (last checked 29 January 2012).

http://medweb4.bham.ac.uk/websites/caspb/cribsheets

Coomarasamy, A., & Khan, K. S. (2004). What is the evidence that

postgraduate teaching in evidence based medicine changes anything?

A systematic review. British Medical Journal, 329, 1017.

doi:10.1136/bmj.329.7473.1017

Darmoni, S. J., Haugh, M. C., Lukacs, B., & Boissel, J. P. (2001).

Quality of health information about depression on internet. Level of

evidence should be gold standard. Brit i s h Medical Journal, 322, 1367.

Dellinger, R. P., Levy, M. M., Carlet, J. M. et al. (2008). Surviving

sepsis campaign: International guidelines for management of severe

sepsis and septic shock: 2008. Intensive C ar e M ed ic in e, 3 4, 17-60.

doi:10.1007/s00134-007-0934-2

Fangerau, H. (2009). Research ethics, publication ethics and the dialec-

tics of scientists trying not to behave badly: A comment on the ad-

vantages and limitations of twin assessment of clinical trials (TACT).

Journal of Public Health, 17, 433-434.

doi:10.1007/s10389-009-0284-3

Finkel, M. L., Brown, H. A., Gerber, L. M., & Supino, P. G. (2003).

Teaching evidence-based medicine to medical students. Medical

Teacher, 25, 202-204. doi:10.1080/0142159031000092634

Ford, A. C., Guyatt, G. H., Talley, N. J., & Moayyedi, P. (2010). Errors

in the conduct of systematic reviews of pharmacological interventions

for irritable bowel syndrome. American Journal of Gastroenterol, 105,

280-288. doi:10.1038/ajg.2009.658

Forestier, R., Françon, A., & Graber-Duvernay, B. (2005). Les pa-

ramètres de validité d'un essai thérapeutique et leur influence sur l'é-

laboration d'une médecine fondée sur les preuves: revue de la littéra-

ture. Annales de Réadaptation et de Médecin e Physique, 48, 250-258.

doi:10.1016/j.annrmp.2005.02.004

GRADEpro Software Package (2012). URL (last checked 29 January

2012). http://ims.cochrane.org/revman.

Guyatt, G., Cairns, J., Churchill, D. et al. (1992). Evidence-based medi-

cine: A new approach to teaching the practice of medicine. Journal of

the American Medical Association, 268, 2420-2425.

doi:10.1001/jama.1992.03490170092032

Guyatt, G., Meade, M., Jaeschke, R., Cook, D., & Haynes R. B. (2000).

Practitioners of evidence based care. British Medical Journal, 320,

954-955. doi:10.1136/bmj.320.7240.954

Guyatt, G. H., Ox man, A. D., Kunz, R., Vist , G. E., Falck-Ytter, Y., &

Schunemann, H. J . (2008). What is “quality of evidence” and why is it

important to clinicians? British Medical Journal, 336, 995-998.

doi:10.1136/bmj.39490.551019.BE

Hattie, J. (2009). Visible learning: A synthesis of over 800 meta-analyses

relating to achievement. London: Routledge.

Hung, W. W., & Leipzig, R. M. (2008). Antihypertensive therapy with

indapamide and perindopril reduced mortality in patients greater than

or equal to 80 ye a r s. ACP Journal Club, 149, 10.

Ilic, D., & Forbes, K. (2010). Undergraduate medical student percep-

tions and use of Evidence Based Medicine: A qualitative study. BMC,

Medical Education, 10, 58. doi:10.1186/1472-6920-10-58

Kopp, I., Thole, H. T. L., Selbmann, H., & Ollenschläger, G. (2008).

Deutsches Instrument zur methodischen Leitlinien-Bewertung (DELBI):

Fassung 2005/2006. ZaeFQ, 99, 468-519.

Lamontagne, F., & Meade, M. O. (2008). Low-dose hydrocortisone did

not improve survival in patients with septic shock but reversed shock

earlier. ACP Journal Club, 148, 6.

Letelier, L. M., Zamarin, N., Andrade, M. et al. (2007). Exploring

language barriers to Evidence-based Health Care (EBHC) in post-

graduate medical students: A randomised trial. Education Health, 20,

82.

Liberati, A., Altman, D., Tetzlaff, J. et al. (2009). The PRISMA state-

ment for reporting systematic reviews and meta-analyses of studies

that evaluate healthcare interventions: Explanation and elaboration.

British Medical Journal, 339, b2700. doi:10.1136/bmj.b2700.

Murad, M., Montori, V., Kunz, R. et al. (2009). How to teach evi-

dence-based medicine to teachers: Reflections from a workshop ex-

perience. Journal of Evaluation Clini c a l Practice, 15, 1205-1207.

doi:10.1111/j.1365-2753.2009.01344.x

Norman, G. R., & Shannon, S. I. (1998). Effectiveness of instruction in

critical appraisal (evidence-based medicine) skills: A critical appraisal.

Canadian Medical Association Journal, 158, 177.

Plint, A. C., Moher, D., Morrison, A. et al. (2006). Does the CON-

SORT checklist improve the quality of reports of randomised con-

trolled trials? A systematic review. The Medical Journal of Australia,

185, 263.

Porzsolt, F., Bonotto, de O., Costa, I. C., & Thomaz, T. G. (2009).

Advantages and limitations of Twin Assessement of Clinical Trials

(TACT). Journal of Public Health, 17, 425-435.

doi:10.1007/s10389-009-0283-4

Rawlins, M. (2008). De testimonio: On the e vi de nc e f or decisions about

the use of therapeutic interventions. Clinical Medicine, Journal of the

Royal College of Physicians, 8, 579-588.

Rosati, P., Ciampalini, P., Grossi, A., Giovannelli, L., Giustini, F.,

Inglese, R., Fiscarelli, E., Castellano, C., Mazziotta, M. R. M., Gentile,

S., Giampaolo, R., & Porzsolt, F. (2009). An alternate evaluation of

evidence: Results from a CASP workshop. In P. J. Sharek, D. Berg-

man, & F. M. Ducharme (Eds.), Beclomethasone for asthma in chil-

dren: Effects on linear growth (pp. 21-22). London: Cochrane Air-

ways Group. doi:10.1002/14651858.CD001282

Rosati, P., & Porzsolt, F. (2012). A practical educational tool for teach-

ing child-care hospital professionals attending evidence-based prac-

tice courses for continuing medical education to appraise internal va-

lidity in systematic reviews. Journal of Evaluation Clinical Practice.

Schünemann, H. J., Fretheim, A., & Oxman, A. D. (2006). Improving

the use of research evidence in guideline development: 9 grading evi-

dence and recommendations. Health Research Policy and Systems, 4,

21. doi:10.1186/1478-4505-4-21

Schulz, K., Altman, D., & Moher, D. (2010). CONSORT 2010 state-

Copyright © 2012 SciRes.

1120