Int'l J. of Communications, Network and System Sciences

Vol.7 No.8(2014), Article

ID:48471,14

pages

DOI:10.4236/ijcns.2014.78029

QoE Assessment of Will Transmission Using Vision and Haptics in Networked Virtual Environment

Pingguo Huang1, Yutaka Ishibashi2

1Department of Management Science, Tokyo University of Science, Tokyo, Japan

2Department of Scientific and Engineering Simulation, Nagoya Institute of Technology, Nagoya, Japan

Email: huang@ms.kagu.tus.ac.jp, Ishibasi@nitech.ac.jp

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 26 June 2014; revised 20 July 2014; accepted 30 July 2014

ABSTRACT

In this paper, we handle collaborative work in which two users move an object together to eliminate a target in a 3-D virtual space. In the work, the users transmit their wills about movement direction of the object to each other by only haptics and by haptics and vision (including with/ without drawing an arrow to indicate the direction of force applied to the object by the other user). We carry out QoE (Quality of Experience) assessment subjectively and objectively to investigate the influence of network delay on will transmission. As a result, we clarify the effects of vision on the transmissibility of haptic will transmission.

Keywords:Networked Virtual Environment, Vision, Haptics, Will Transmission, Network Delay, QoE

1. Introduction

In recent years, a number of researchers have been directing their attention to networked haptic environments. Since we can largely improve the efficiency of collaborative work and get high realistic sensations by using haptic sensation together with other sensations such as visual and auditory sensations [1] -[3] , haptic sensation is utilized in various fields such as medical, artistic, and educations fields [4] . In haptic collaborative work, users can feel the sense of force from each other interactively while doing the work. In the previous studies, effects of visual sensation on sensitivity of elasticity [5] and virtual manipulation assistance by haptics [6] have been investigated. Also, the influences of network delay on the operability of haptic interface devices have been investigated [7] -[12] . Especially in [5] , experimental results show that the accuracy of the sensitivity’s threshold of elasticity is improved by visual sensation. However, there are very few researches which focus on will (for example, object’s movement direction) transmission among users. In [7] through [12] , since the role of each user, master-slave relationship, and/or direction in which each user wants to move an object (i.e., user’s will) in collaborative work are determined in advance, it is not so important to transmit their wills to each other. In [13] , two users lift and move an object cooperatively while one of the two users is instructing the direction of the object’s movement by voice. Also, the efficiency between the work with voice is compared to that without voice, in which one of the two users is asked to follow the other’s movement.

However, the role of each user, master-slave relationship, and/or movement direction of object are not always determined beforehand, and there exists many cases in which users stand on an equal footing. In these cases, it is necessary to transmit users’ wills to each other. Traditionally, wills can be transmitted by audio and video. However, it needs much more time to transmit the wills, and it may be difficult to transmit the wills in delicate manipulation such as remote surgery training. In contrast, will transmission using haptic may reduce the transmission time, and it is possible to transmit wills in delicate manipulation work in which it is difficult to transmit wills only by audio and video. Therefore, it is very important to establish an efficient method to transmit wills by haptics for collaborative work, especially for delicate manipulation work.

This paper focuses on will transmission using haptics. We investigate how to transmit wills by haptics, what type of forces are more suitable for will transmission, and the influences of vision on will transmission using haptics. Specifically, we deal with collaborative work in which users stand on an equal footing and object’s movement directions are not determined in advance. In the work, two users collaboratively lift and move an object, and each user tries to transmit his/her will about the object’s movement direction (i.e., user’s will) by haptics (for example, when the user wants to move the object to the right, he/she impresses a rightward force upon the object) to the other user. We deal with two types of will transmission methods in which the users transmit their wills to each other by only haptics and by haptics and vision (including with/without drawing an arrow to indicate the direction of force applied to the object by the other user). We carry out QoE (Quality of Experience) [14] assessment subjectively and objectively [15] to investigate the influences of network delay on will transmission.

The remainder of this paper is organized as follows. Section 2 describes the collaborative work and the method of will transmission. Section 3 introduces our assessment system. Section 4 explains force calculation of the assessment system and assessment methods are explained in Section 5. Assessment results are presented in Section 6, and Section 7 concludes the paper.

2. Will Transmission

2.1. Collaborative Work

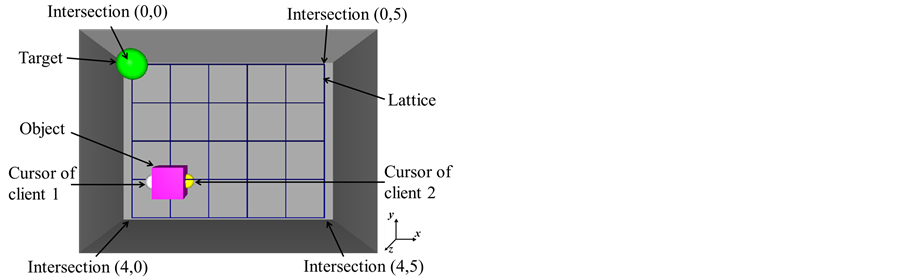

In the collaborative work, we employ a client-server model [13] which consists of a server and two clients (clients 1 and 2). In a 3-D virtual space (height: 120 mm, width: 160 mm, depth: 70 mm) shown in Figure 1, two users manipulate haptic interface devices PHANToM Omnis [16] (called PHANToMs in this paper) which are connected to the two clients, and cooperatively lift and move an object (a rigid cube with a side of 20 mm and a mass of 500 g. The cube does not tilt) by putting the object between the two cursors (denote positions of PHANToM styli’s tips in the virtual space) [13] . The static friction coefficient and dynamic friction coefficient between the cursors and object are set to 0.6 and 0.4, respectively. Since the gravitational acceleration in the virtual space is set to 2.0 m/s2†1, the object drops on the floor if it is not pushed from both sides strongly to some extent. Furthermore, at the location where is 35 mm from the back to the front of the virtual space, there exists a lattice which consists of 20 squares (25 mm on a side). The two users cooperatively lift and move the object along the lines of lattice to contain a target (a sphere with a radius of 20 mm) [13] . An intersection of the lattice in the i-th row (0 ≤ i ≤ 4) and the j-th column (0 ≤ j ≤ 5) is referred to as intersection (i, j) here (in Figure 1, the object is at intersection (3, 1)). When the target is contained by the object, it disappears and then appears at a randomly-selected intersection.

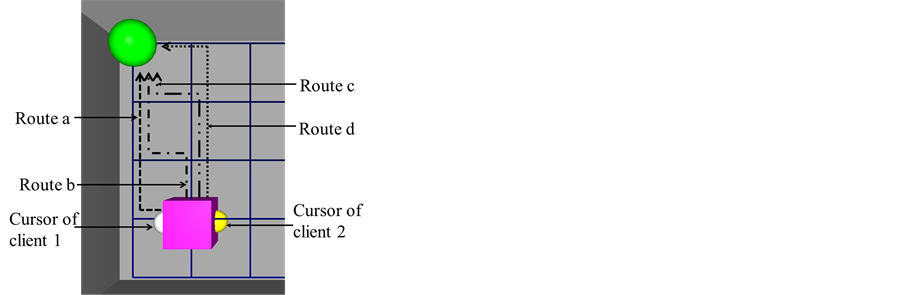

When the users move the object to contain the target, they are asked to move the object via one of the shortest routes. For example, when the positions of the target and object are shown in Figure 1, there exist multiple routes (called routes a through d here) from the object to the target (see Figure 2). Therefore, at the intersec-

Figure 1. Displayed image of virtual space.

Figure 2. Example of shortest routes.

tions where the users need to determine the object’s movement direction (for example, at intersections (3, 1) and (2, 1) in route b, and at intersections (3, 1), (2, 1), and (1, 1) in routes c and d), they try to transmit their wills to each other by haptics. If one user can know the other’s will, he/she tries to follow the will. Otherwise, he/she moves the object based on his/her own will.

2.2. Will Transmission Methods

As described in Subsection 2.1, at the intersections where the users need to determine the object’s movement direction, they try to transmit their wills to each other. We deal with three cases (cases 1, 2, and 3) of will transmission. In case 1, the users transmit their wills to each other by only haptics. In case 2, they transmit their wills to each other by haptics and vision without drawing an arrow to indicate the direction of force applied to the object by the other user [17] , and in case 3, they do by haptics and vision with drawing the arrow.

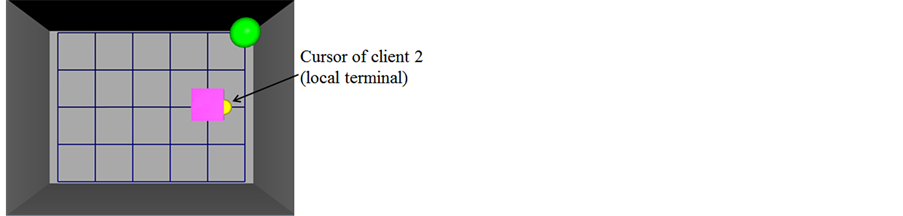

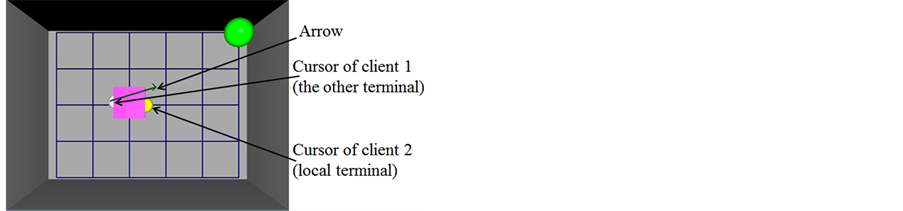

In case 1, for example, when the target and object are located at the positions shown in Figure 2, if the user at client 2 wants to move the object along route a, he/she needs to push the object to the left strongly. At this time, if the user at client 1 feels a strong leftward-force through the object, he/she makes a judgement that the other user wants to move the object to the left. If the user at client 2 wants to move the object along the other routes (i.e., routes b, c and d), that is, the user wants to move the object upward, he/she tries to push the object to the upper left. At this time, since the user at client 1 feels a little weaker leftward-force than that of the previous situation through the object, he/she judges that the other wants to move the object upward. If the user at client 1 wants to move the object to the left, he/she applies a weak rightward-force to the object so that the object does not fall down. At this time, the user at client 2 feels a weak rightward force, and judges that the other wants to move the object to the left. If the user at client 1 wants to move the object upward, he/she applies a stronger rightward force than that when he/she wants to move the object to the upper left. At this time, the user at client 2 feels a strong rightward force, and knows that the other wants to move the object upward. In this case, their wills are transmitted by only haptics, and the other’s movement (i.e., the movement of the other’s cursor) cannot be seen at the local terminal (see Figure 3). In case 2, the user at each client can see the other’s movement (see Figure 1), and it may be possible for the user to know the other’s will by the movement of the other’s cursor. In case 3, at each client, we display the movement of the other’s cursor and draw an arrow to indicate the direction of force applied to the object by the other user (see Figure 4). The arrow is drawn with a starting point at the front side of the other’s cursor’s center point. The direction of the arrow is the same as the direction of the force applied by the other user, and its length is proportional to the magnitude of the force.

3. Assessment System

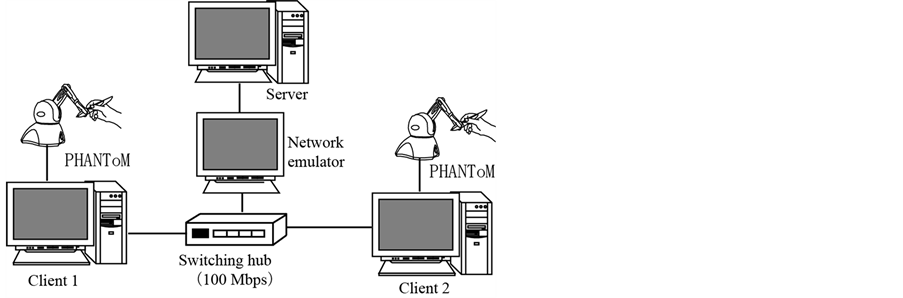

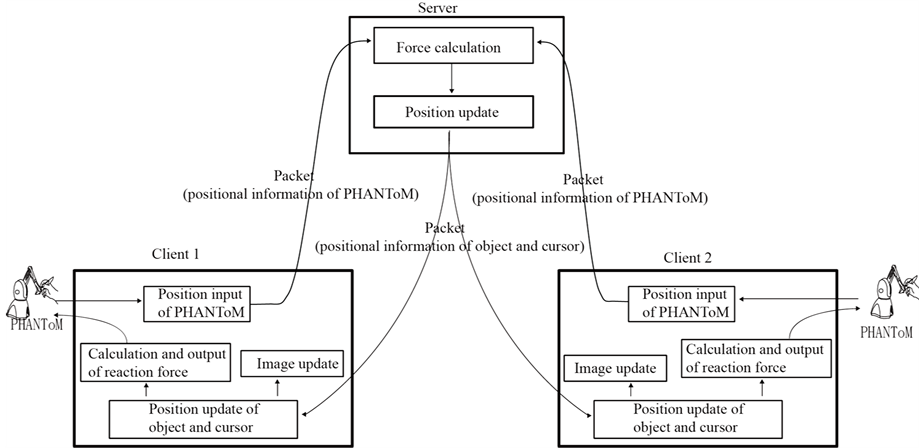

As shown in Figure 5, the assessment system consists of a server and two clients which are connected via a switching hub and a network emulator (NIST Net [18] ) by using Ethernet cables (100 Mbps). The packet size of each position information transmitted from the server to each client is 72 bytes, and that transmitted from each client to the server is 32 bytes. By using the network emulator, we generate a constant delay for each packet transmitted from each client to the server. We employ UDP as the transport protocol to transmit the packets. Functions of the server and clients are shown in Figure 6. We will explain the functions in what follows.

Figure 3. Displayed image of virtual space (will transmission by only haptics).

Figure 4. Displayed image of virtual space (will transmission by haptics and vision (with drawing cursor and arrow)).

Figure 5. Configuration of assessment system.

Figure 6. Functions at server and clients.

Each client performs haptic simulation by repeating the servo loop [16] at a rate of 1 kHz, and the client inputs/outputs position information at the rate. The client transmits the position information by adding the timestamp and sequence number to the server. When the server receives the position information from the two clients, it calculates the position of the object every millisecond by using the spring-damper model [16] and transmits the position information to the two clients every millisecond. When each client receives the position information, the client calculates the reaction force applied to the user after updating the position of the object. The image is updated at a rate of about 60 Hz at each client.

4. Force Calculation

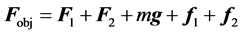

When the two users (the user at client i (i = 1, 2) is called user i) collaboratively lift and move the object, there are forces exerted by the two users, gravitation, and frictional force applied on the object. The resultant force Fobj applied to the object is calculated as follows:

(1)

(1)

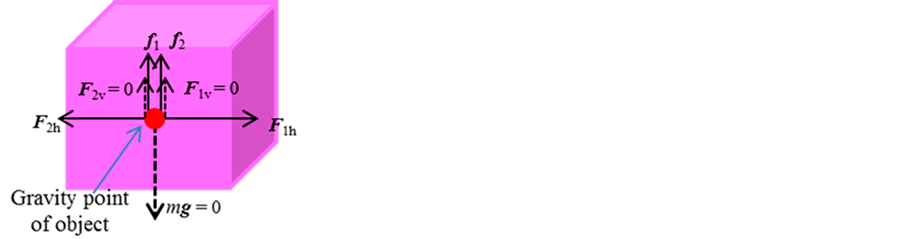

where Fi is the force that user i exerts to the object, fi is the frictional force between the object and the cursor of user i, m is the object’s mass, and g is the gravitational acceleration in the virtual space.

When the two users lift the object by putting it between the two cursors, we set the gravity of the object and the vertical component of force exerted by user i (Fiv) to 0 so that the users can lift and move the object easily (see Figure 7). It means that there exist only the horizontal components of forces (F1h, F2h) exerted by the two users and frictional force applied on the object at this time. The object is moved in the same direction as the resultant force. There are the kinetic friction force and static frictional force applied on the object. The static frictional force is calculated by using the mass of the cursor (45 g) and the acceleration of the cursor. When the static frictional force is larger than the maximum static frictional force which is calculated by multiplying the horizontal component of forces exerted by user i by the coefficient of static friction (0.6), the kinetic friction force works. The kinetic friction force is calculated by multiplying the horizontal component of forces exerted by user i by the coefficient of kinetic friction (0.4).

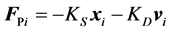

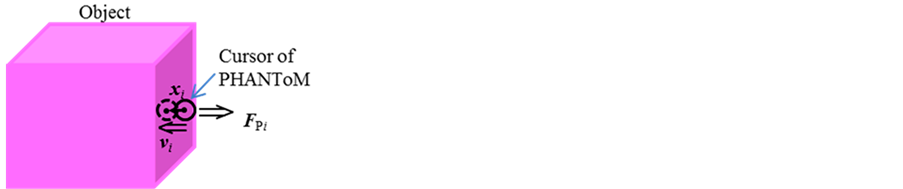

The reaction force applied to user i through PHANToM is calculated by using spring-damper model as follows:

(2)

(2)

where KS (0.8 N/mm) and KD (0.2 N∙ms/mm) are the spring coefficient and damper coefficient, respectively, and xi is the vector of the depth that the cursor of user i penetrates into the object, and vi is the relative velocity of cursor to the object (see Figure 8).

Figure 7. Force applied to object when users cooperatively lift and move object.

Figure 8. Force applied to user.

5. Assessment Methods

In the assessments, each pair of subjects is in two separate rooms. The pair of subjects is asked to do the collaborative work described in Subsection 2.1 while transmitting their wills to each other. If one of the pair can know the other’s will, he/she tries to follow the other’s will. Otherwise, he/she moves the object based on his/her own will. In order to clarify whether the wills are transmitted accurately or not, after passing each intersection at which the subjects need to transmit their wills, each subject is asked to stop moving†2 and tell whether he/she can know the other’s will, the will if he/she can know it, and his/her own will. Each subject is asked to determine the movement direction as randomly as possible at each intersection at which the subject needs to transmit his/her will in order to avoid moving the object in the same type of routes (for example, the routes which have the least intersections at which subjects need to transmit their wills ) every time. We carry out QoE assessments subjectively and objectively to assess the influences of will transmission by changing the constant delay.

For subjective assessment, we enhance the single-stimulus method of ITU-R BT. 500-12 [19] . Before the assessment, each pair of subjects is asked to practice on the condition that there is no constant delay. In the practice, in order to avoid establishing the way of will transmission before the assessment, after the target is eliminated, it appears at a randomly-selected adjacent intersection. In this case, the object’s movement direction is already determined, and the subjects do not need to transmit their wills. After the practice, they are asked to do the collaborative work in case 3 once on the condition that there is no additional delay. The quality at this time is the standard in the assessment. Then, the subjects are asked to give a score from 1 through 5 (see Table 1) about the operability of PHANToM (whether it is easy to operate the PHANToM stylus), transmissibility of wills (whether their own wills can be transmitted to each other), and comprehensive quality (a synthesis of the operability and transmissibility) according to the degree of deterioration on the condition that there exist constant delays†3 to obtain the mean opinion score (MOS) [15] in cases 1 through 3.

Objective assessment is carried out at the same time as the subjective assessment. As performance measures, we employ the average number of eliminated targets [13] , the average number of dropping times, the average number of passed intersections at which the subjects needed to transmit their wills, the average number of intersections at which one thought that he/she knew the other’s wills, the average number of intersections at which

one could know the other’s will accurately, and the percentage of questions answered correctly (the percentage of the average number of intersections at which one could know the other’s will accurately to the average number of passed intersections at which the subjects needed to transmit their wills).

In order to investigate the influence of constant delay, the delay is changed from 0 ms to 25 ms at intervals of 5 ms. We select the constant delay and the cases in random order for each pair of subjects. In the assessments, each test is done for 80 seconds, and it takes about 50 minutes for each pair of subjects to finish all the tests. The number of subjects whose ages are between 21 and 30 is twenty.

6. Assessment Results

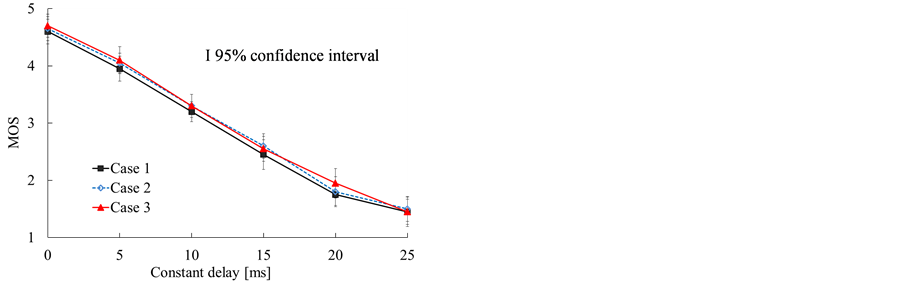

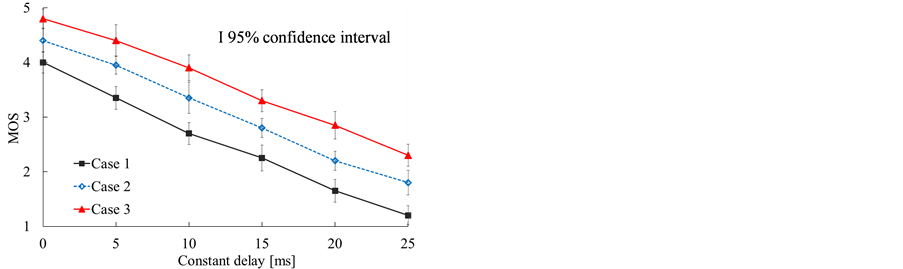

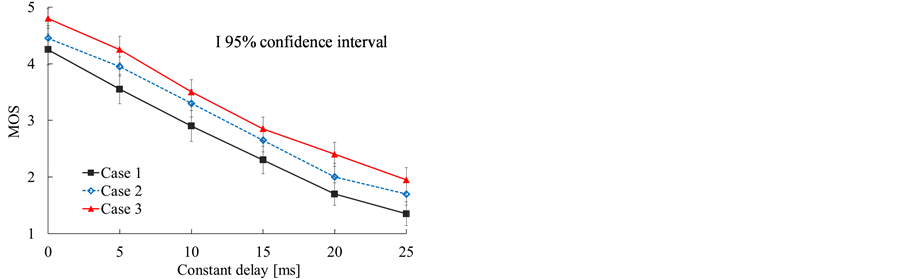

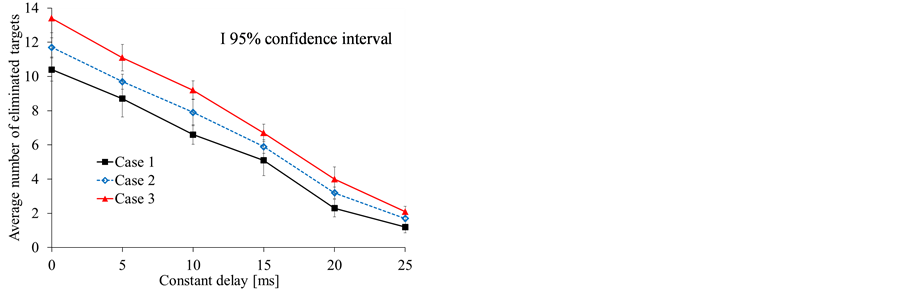

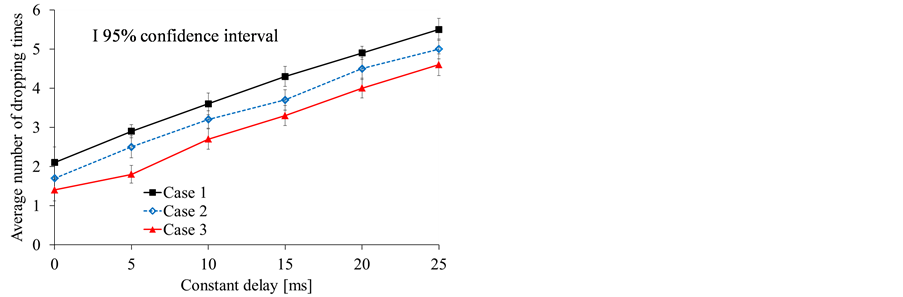

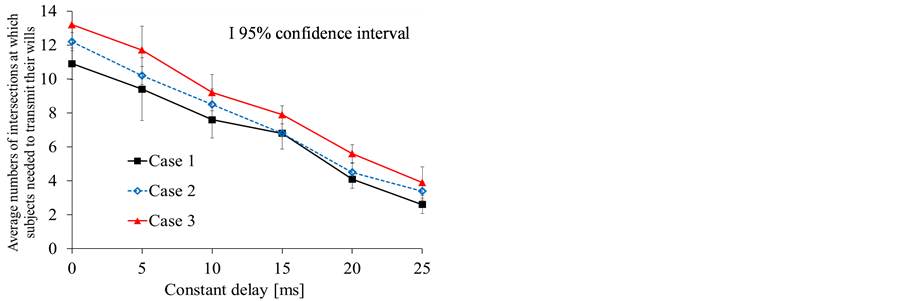

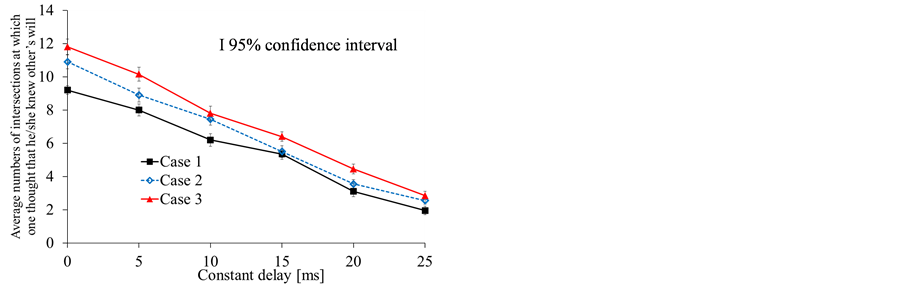

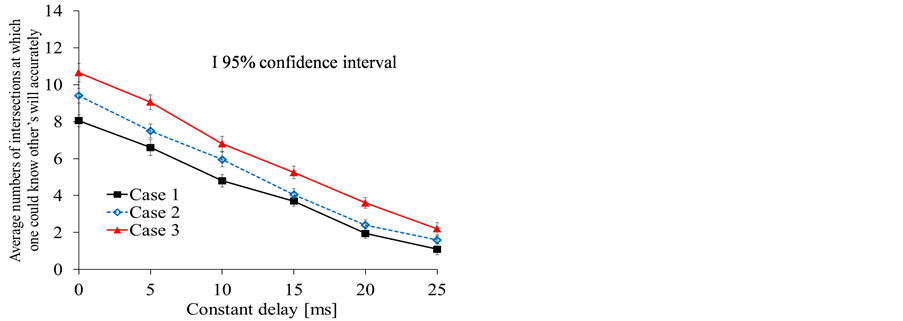

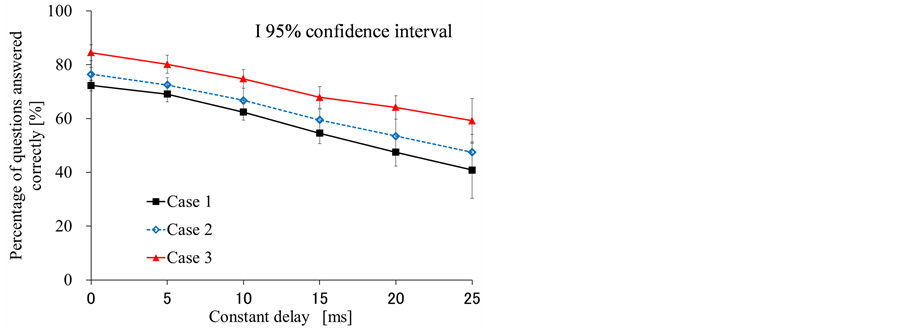

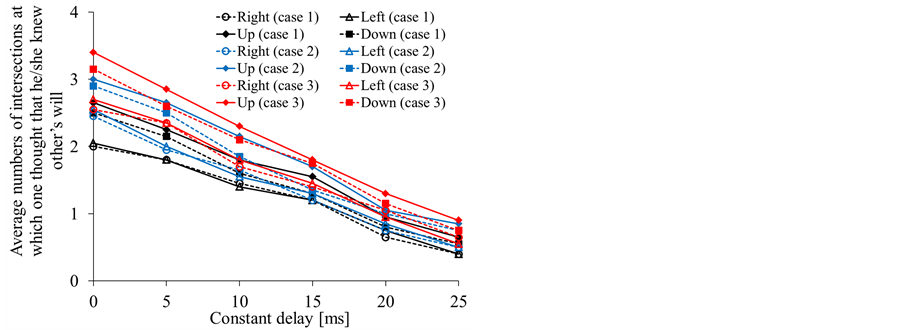

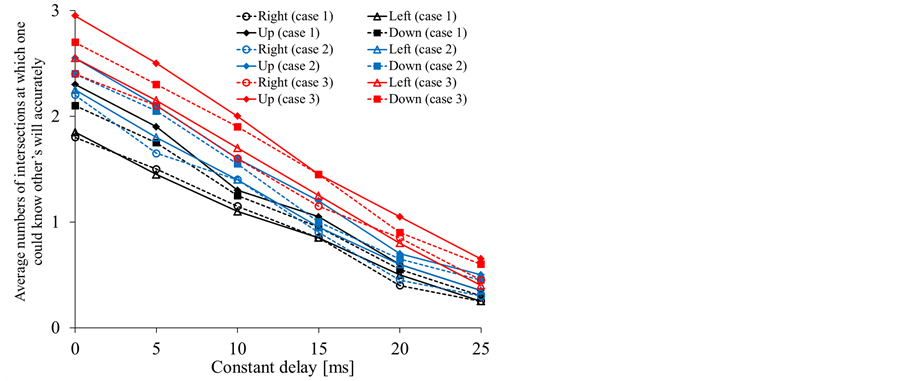

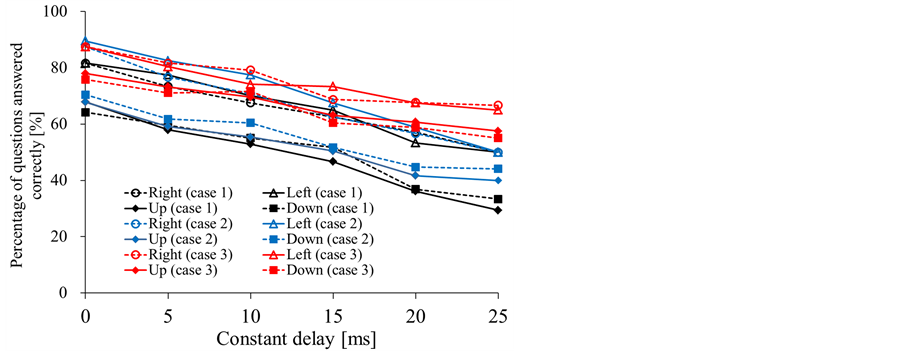

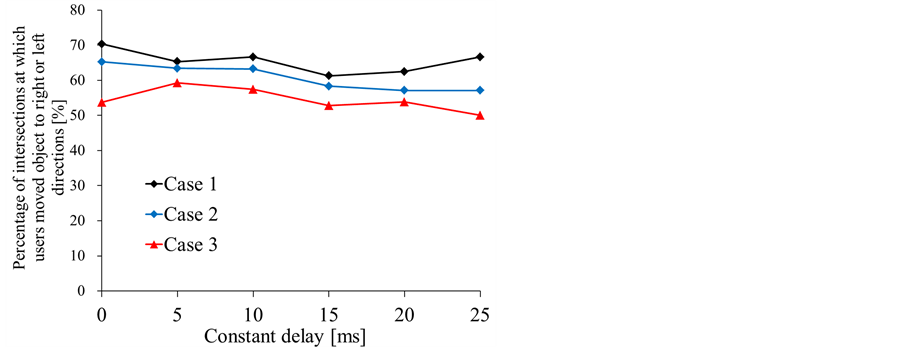

We show MOS values of the operability of PHANToM, transmissibility of wills, and comprehensive quality versus the constant delay in Figures 9-11, respectively. The average number of eliminated targets is shown in Figure 12, and Figure 13 shows the average number of dropping times. The average number of passed intersections at which the subjects needed to transmit their wills, the average number of intersections at which one thought that he/she knew the other’s will, and the average number of intersections at which one could know the other’s will accurately are shown in Figures 14-16, respectively. The percentage of questions answered correctly is shown in Figure 17. In the figures, we also plot the 95% confidence intervals.

From Figures 9-11, we see that the MOS values of the operability, transmissibility of wills, and comprehensive quality deteriorate as the constant delay increases. The tendency is almost the same as those of the results in [8] , [9] , and [13] , where the operability of haptic interface devices becomes worse as the network delay increases. Furthermore, we find in the figures that when the constant delay is smaller than about 10 ms, the MOS values of the operability of PHANToM are larger than 3.5 in the three cases; this means that although subjects can perceive the deterioration, they do not think that it is annoying, and the deterioration in QoE is allowable [22] . We also assume that it is allowable when the MOS value is larger than 3.5 in this paper. From the figures, we notice that the MOS values of the transmissibility of wills are larger than 3.5 when the constant delay is smaller than about 5 ms, 10 ms, and 15 ms in cases 1, 2, and 3, respectively. For the comprehensive quality, the MOS values are larger than 3.5 when the constant delay is smaller than about 5 ms in case 1 and smaller than about 10 ms in cases 2 and 3.

From Figure 9, we also find that the MOS values of the operability of PHANToM in all the cases are almost the same, and they do not depend on the cases. From Figure 10, we see that the MOS values in case 2 are larger than those in case 1, and the MOS values in case 3 are the largest. In order to confirm whether there are statistically significant differences among the results of the three cases, we carried out the two way ANOVA for the results shown in Figure 10 (factor A: network delay, factor B: cases). As a result, we found that the P-value of factor A is 1.50 × 10−108, and that of factor B is 8.35 × 10−44. That is, there are statistically significant differences among the network delay and among the three cases. It means that vision (the movement of the other’s cursor) affects haptic will transmission, and the transmissibility of wills is improved by denoting the direction and magnitude of the other’s force. Therefore, we can say that vision affects haptic will transmission and improves the transmissibility of wills.

From Figure 12, we notice that as the constant delay increases, the average numbers of eliminated targets decrease. We also see that the average number in case 2 is larger than that in case 1, and the average number in case 3 is the largest. The tendency of the average number of eliminated targets is almost the same as that of the transmissibility of wills. From these results, we can also say that vision affects haptic will transmission, and the efficiency of the collaborative work is improved.

In Figure 13, we observe that the average number of dropping times in case 2 is less than that in case 1, and

Figure 9. MOS of operability of PHANToM.

Figure 10. MOS of transmissibility of wills.

Figure 11. MOS of comprehensive quality.

Figure 12. Average number of eliminated targets.

Figure 13. Average number of dropping times.

Figure 14. Average numbers of intersections at which subjects needed to transmit their wills.

Figure 15. Average numbers of intersections at which one thought that he/she knew other’s will.

that in case 3 is the least. From this, we can also say that vision affects haptic will transmission and efficiency of the collaborative work is improved, as in Figure 12.

From Figures 14-16, we find that the average number of passed intersections at which the subjects needed to transmit their wills, the average number of intersections at which one thought that he/she knew the other’s wills, and the average number of intersections at which one could know the other’s will accurately of case 2 are larger than those in case 1, and those in case 3 are the largest. Furthermore, from Figure 17, we see that the percentage of questions answered correctly in case 2 is larger than that in case 1, and that in case 3 is the largest. From these results, we can also say that vision affects haptic will transmission and accuracy of the collaborative work is improved.

Figure 16. Average numbers of intersections at which one could know other’s will accurately.

Figure 17. Percentage of questions answered correctly.

We also carried out two way ANOVA for the results shown in Figures 10-17 to confirm whether there are statistically significant differences among the results in the three cases. As a result, we found that the P-values are smaller than 0.05. It means that there are statistically significant differences among the three cases.

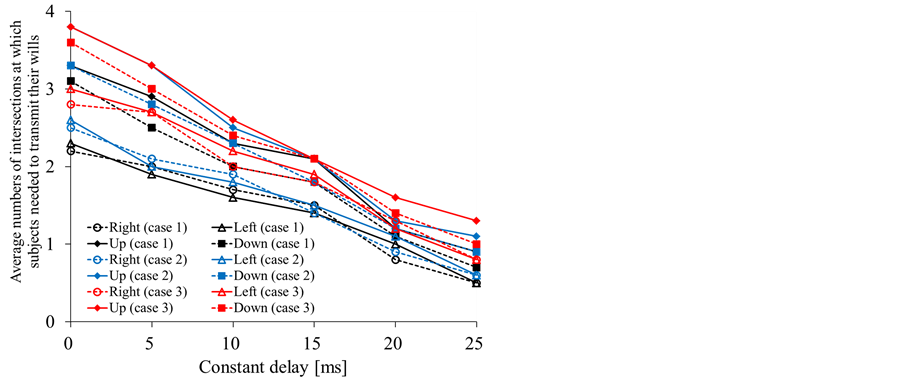

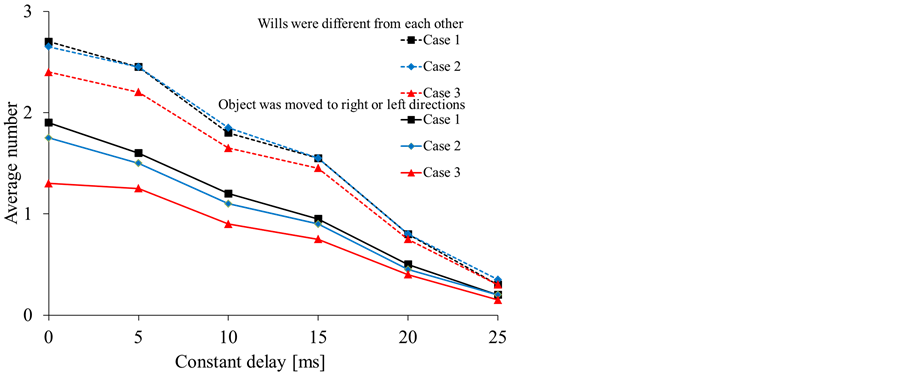

From the above results, we can say that vision affects haptic will transmission and improves the accuracy of the transmissibility of wills. However, there are four directions (right, left, up or down) and subjects need to decide a direction from “up or right,” “up or left,” “down or right,” or “down or left” at each intersection where they need to transmit their wills in the assessment. Since the transmissibility of wills may be different in directions (right, left, up or down), we divide the average number of passed intersections at which the subjects needed to transmit their wills, the average number of intersections at which one thought that he/she knew the other’s wills, and the average number of intersections at which one could know the other’s will accurately on the basis of the four directions and show the results in Figures 18-21. We also show the average number of intersections at which the two subjects’ wills were different from each other and that at which the subjects moved the object to the right or left directions in Figure 22. We further show the percentage of intersections at which the subjects moved the object to the right or left direction when the two subjects’ wills were different from each other in Figure 23.

From Figure 18, we see that the average number of intersections at which the subjects move the object upward or downward is larger than that of intersections at which the subjects move the object to the right or left. This is because it is more difficult to perceive the perpendicular force than the horizontal force in the three cases. In Figure 21, we find that when the movement direction is right or left, the percentage of questions answered correctly is larger than the percentage of questions answered correctly when the movement direction is upward or downward. From Figures 19-21, we notice that the average number of intersections at which one thought

Figure 18. Average numbers of intersections at which subjects needed to transmit their wills (right or left and up or down).

Figure 19. Average numbers of intersections at which one thought that he/she knew other’s will (right or left and up or down).

Figure 20. Average numbers of intersections at which one could know other’s will accurately (right or left and up or down).

Figure 21. Percentage of questions answered correctly (right or left and up or down).

Figure 22. Average number of intersections at which two users’ wills were different from each other and that at which users moved object to right or left directions.

Figure 23. Percentage of intersections at which users moved object to right or left directions when two users’ wills were different from each other.

that he/she knew the other’s wills, the average number of intersections at which one could know the other’s will accurately, and the percentage of questions answered correctly in case 3 are the largest, and those in case 1 are the smallest.

Furthermore, we find in Figure 22 that when the two subjects’ wills were different from each other, the average numbers of intersections at which the movement direction is right or left is larger than that of intersections at which the movement direction is upward or downward. From Figure 23, we notice that the percentage of intersections at which the subjects moved object to the right or left direction when the two subjects’ wills were different from each other in case 3 is about 50% to 60%, which is the smallest in the three cases.

7. Conclusions

In this paper, we handled collaborative work in which two users stand on an equal footing and the role of each user, master-slave relationship, and/or movement direction of object (i.e., user’s will) are not determined in advance, and we investigated the effects of wills transmission by using vision and haptics. In the experiment, we dealt with three cases of wills transmission. In case 1, wills were transmitted by only haptics. In case 2, wills were transmitted by haptics and vision (without drawing an arrow to indicate the direction of force applied to the object by the other user), and in case 3, wills were transmitted by haptics and vision (with drawing an arrow to indicate the direction of force applied to the object by the other user). As a result, we find that as the network delay increases, it becomes more difficult to transmit the wills accurately, and when the network delay is smaller than about 5 ms in case 1, about 10 ms in case 2, and about 15 ms in case 3, the MOS values are larger than 3.5 and the deterioration in QoE is allowable. We also notice that vision affects haptic will transmission and accuracy of the collaborative work is improved. Furthermore, we see that when the will (the direction in which each user wants to move the object) is right or left, the efficiency of will transmission is higher than that when the will is up or down.

As the next step of our research, we plan to clarify the process of consensus formation using haptics and study how to improve the transmissibility of wills. Also, we need to improve the transmissibility of the perpendicular force and investigate the influences of network delay jitter and packet loss on will transmission using haptics. We will further investigate the effects of will transmission using haptics in other directions.

Acknowledgements

The authors thank Prof. Hitoshi Watanabe and Prof. Norishige Fukushima for their valuable comments. They also thank Ms. Qi Zeng who was a student of Nagoya Institute of Technology for her help in QoE assessment.

References

- Srinivasan, M.A. and Basdogan, C. (1997) Haptics in Virtual Environments: Taxonomy, Research Status, and Challenges. Computers and Graphics, 21, 393-404. http://dx.doi.org/10.1016/S0097-8493(97)00030-7

- Kerwin, T., Shen, H. and Stredney, D. (2009) Enhancing Realism of Wet Surfaces in Temporal Bone Surgical Simulation. IEEE Transactions on Visualization and Computer Graphics, 15, 747-758.

http://dx.doi.org/10.1109/TVCG.2009.31 - Steinbach, E., Hirche, S., Ernst, M., Brandi, F., Chaudhari, R., Kammerl, J. and Vittorias, I. (2012) Haptic Communications. IEEE Journals & Magazines, 100, 937-956.

- Huang, P. and Ishibashi, Y. (2013) QoS Control and QoE Assessment in Multi-Sensory Communicati-

ons with Haptics. The IEICE Transactions on Communications, E96-B, 392-403. - Hayashi, D., Ohnishi, H. and Nakamura, N. (2006) Understand the Effect of Visual Information and Delay on a Haptic Display. IEICE Technical Report, 7-10.

- Kamata, K., Inaba, G. and Fujita, K. (2010) Virtual Manipulation Assistance by Collision Enhancement Using Multi-Finger Force-Feedback Device. Transactions on VRSJ, 15, 653-661.

- Yap, K. and Marshal, A. (2010) Investigating Quality of Service Issues for Distributed Haptic Virtual Environments in IP Networks. Proceedings of the IEEE ICIE, 237-242.

- Watanabe, T., Ishibashi, Y. and Sugawara, S. (2010) A Comparison of Haptic Transmission Methods and Influences of Network Latency in a Remote Haptic Control System. Transactions on VRSJ, 15, 221-229.

- Huang, P., Ishibashi, Y., Fukushima, N. and Sugawara, S. (2012) QoE Assessment of Group Synchronization Control with Prediction in Work Using Haptic Media. IJCNS, 5, 321-331.

http://dx.doi.org/10.4236/ijcns.2012.56042 - Hashimoto, T. and Ishibashi, Y. (2008) Effects of Inter-Destination Synchronization Control for Haptic Media in a Networked Real-Time Game with Collaborative Work. Transactions on VRSJ, 13, 3-13.

- Hikichi, K., Yasuda, Y., Fukuda, A. and Sezaki, K. (2006) The Effect of Network Delay on Remote Calligraphic Teaching with Haptic Interfaces. Proceedings of 5th ACM SIGCOMM Workshop on Network and System Support for Games, Singapore City, 30-31 October 2006.

- Nishino, H., Yamabiraki, S., Kwon, Y., Okada, Y. and Utsumiya, K. (2007) A Remote Instruction System Empowered by Tightly Shared Haptic Sensation. Proceedings of SPIE Optics East, Multimedia Systems and Applications X, 6777.

- Kameyama, S. and Ishibashi, Y. (2006) Influence of Network Latency of Voice and Haptic Media on Efficiency of Collaborative Work. Proceedings of International Workshop on Future Mobile and Ubiquitous Information Technologies, Nara, 9-12 May 2006, 173-176.

- ITU-T Rec. P. 10/G. 100 Amendment 1 (2007) New Appendix I—Definition of Quality of Experience (QoE).

- Brooks, P. and Hestnes, B. (2010) User Measures of Quality of Experience: Why Being Objective and Quantitative Is Important. IEEE Network, 24, 8-13. http://dx.doi.org/10.1109/MNET.2010.5430138

- Sens Able Technologies, Inc. (2004) 3D Touch SDK Open Haptics Toolkit Programmers Guide. Version 1.0.

- Huang, P., Zeng, Q. and Ishibashi, Y. (2013) QoE Assessment of Will Transmission Using Haptics: Influence of Network Delay. Proceedings of IEEE 2nd Global Conference on Consumer Electronics, Tokyo, 1-4 October 2013, 456-460.

- Carson, M. and Santay, D. (2003) NIST Net—A Linux-Based Network Emulation Tool. ACM SIGCOMM Computer Communication Review, 33, 111-126. http://dx.doi.org/10.1145/956993.957007

- ITU-R Rec. BT. 500-12 (2009) Methodology for the Subjective Assessment of the Quality of Television Pictures.

- Huang, P., Zeng, Q., Ishibashi, Y. and Fukushima, N. (2013) Efficiency Improvement of will Transmission Using Haptics in Networked Virtual Environment. 2013 Tokai-Section Joint Conference on Electrical and Related Engineering, 4-7.

- Yu, H., Huang, P., Ishibashi, Y. and Fukushima, N. (2013) Influence of Packet Loss on Will Transmiss-

ion Using Haptics. 2013 Tokai-Section Joint Conference on Electrical and Related Engineering, 4-8. - ITU-R BT. 1359-1 (1998) Relative Timing of Sound and Vision for Broadcasting.

NOTES

†1We select these parameter values by carrying out a preliminary experiment in which the network delay is negligibly small and it is easy to do the collaborative work by using the parameter values. The other parameter values in this section are selected in the same way.

†2The authors also dealt with the case in which each pair of subjects does not stop after passing each intersection at which the subjects need to transmit their wills . As a result, we found that the results are almost the same as those in this study.

†3The authors also investigated the influence of packet loss on the will transmission . As a result, we saw that it becomes more difficult to transmit the wills accurately as the packet loss rate increases.