Advances in Linear Algebra & Matrix Theory

Vol.06 No.04(2016), Article ID:72331,12 pages

10.4236/alamt.2016.64011

A QMR-Type Algorithm for Drazin-Inverse Solution of Singular Nonsymmetric Linear Systems

Alireza Ataei

Department of Mathematics, Faculty of Science, Persian Gulf University, Bushehr, Iran

Copyright © 2016 by author and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: October 8, 2016; Accepted: November 26, 2016; Published: November 29, 2016

ABSTRACT

In this paper, we propose DQMR algorithm for the Drazin-inverse solution of consistent or inconsistent linear systems of the form  where

where  is a singular and in general non-hermitian matrix that has an arbitrary index. DQMR algorithm for singular systems is analogous to QMR algorithm for non-singular systems. We compare this algorithm with DGMRES by numerical experiments.

is a singular and in general non-hermitian matrix that has an arbitrary index. DQMR algorithm for singular systems is analogous to QMR algorithm for non-singular systems. We compare this algorithm with DGMRES by numerical experiments.

Keywords:

Singular Linear Systems, DGMRES Method, Quasi-Minimal Residual Methods, Drazin-Inverse Solution, Krylov Subspace Methods

1. Introduction

Consider the linear system

(1)

(1)

where  is a singular matrix and

is a singular matrix and  is arbitrary. Here

is arbitrary. Here , the index of A is the size of the largest Jordan block corresponding to the zero eigenvalue of A. We recall that the Drazin-inverse solution of (1) is the vector

, the index of A is the size of the largest Jordan block corresponding to the zero eigenvalue of A. We recall that the Drazin-inverse solution of (1) is the vector , where

, where  is the Drazin-inverse of the singular matrix A. For the Drazin-inverse and its properties, we can refer to [1] or [2] . In the important special case

is the Drazin-inverse of the singular matrix A. For the Drazin-inverse and its properties, we can refer to [1] or [2] . In the important special case , this matrix is called the group inverse of A and denoted by

, this matrix is called the group inverse of A and denoted by . The Drazin-inverse has various applications in the theory of finite Markov chains [2] , the study of singular differential and difference equations [2] , the investigation of Cesaro-Neumann iterations [3] , cryptography [4] , iterative methods in numerical analysis, [5] [6] , multibody system dynamics [7] and so forth. The problem of finding the solution of the form

. The Drazin-inverse has various applications in the theory of finite Markov chains [2] , the study of singular differential and difference equations [2] , the investigation of Cesaro-Neumann iterations [3] , cryptography [4] , iterative methods in numerical analysis, [5] [6] , multibody system dynamics [7] and so forth. The problem of finding the solution of the form  for (1) is very common in the literature and many different techniques have been developed in order to solve it.

for (1) is very common in the literature and many different techniques have been developed in order to solve it.

In [6] [8] [9] [10] [11] , authors presented some Krylov subspace methods [9] to solve singular linear system with some restriction. However, the treatment of singular linear inconsistent system by Krylov subspace has been proved extremely hard. In [12] , Sidi had not put any restrictions on the matrix A and the system (1). In his paper, the spectrum of A can have any shape and no restrictions are put on the linear system (1). The only assumption is that  is known. Although the

is known. Although the  of A is overestimated, the method is valid.

of A is overestimated, the method is valid.

In [12] , Sidi proposed a general approach to Krylov subspace methods to compute Drazin-inverse solution. In addition, he presented several Krylov subspace methods of Arnoldi, DGCR and Lancoze types. Furthermore, in [13] [14] , Sidi has continued to drive two Krylov subspace methods to compute . One is DGMRES method, which is the implementation of the DGCR method for singular systems which is analogues to GMRES for non-singular systems. Other is DBI-CG method which is Lanczos type algorithm [13] . DGMRES, like, GMRES method, is a stable numerically and economical computationally, which is a storage wise method. DBI-CG method, also like BI-CG for non-singular systems, is a fast algorithm, but when we need a high accuracy, the algo- rithm is invalid. DFOM algorithm is another implementation of the projection method for singular linear systems is analogues to Arnoldi for non-singular systems. DFOM algorithm may be less accurate but faster than DGMRES, and more precise and slower than DBI-CG [15] .

. One is DGMRES method, which is the implementation of the DGCR method for singular systems which is analogues to GMRES for non-singular systems. Other is DBI-CG method which is Lanczos type algorithm [13] . DGMRES, like, GMRES method, is a stable numerically and economical computationally, which is a storage wise method. DBI-CG method, also like BI-CG for non-singular systems, is a fast algorithm, but when we need a high accuracy, the algo- rithm is invalid. DFOM algorithm is another implementation of the projection method for singular linear systems is analogues to Arnoldi for non-singular systems. DFOM algorithm may be less accurate but faster than DGMRES, and more precise and slower than DBI-CG [15] .

In the present paper, the Drazin-Quasi-minimal residual algorithm (DQMR here- after) is another implementation of the projection method for singular linear systems is analogues to Lanczos algorithm for non-singular systems. DGMRES algorithm, in prac- tice, cannot afford to run the full algorithm and it is necessary to use restart. For dif- ficult problems, in most cases, this results in extremely slow convergence, While DQMR algorithm can be implemented using only short recurrences and hence it can be com- puted with little work and low storage requirements per iteration.

The outline of this paper is as follows. In Section 2, we will provide a brief of sum- mary of the review of the theorem and projection method in [12] which is relevant to us. We shall discuss the projection methods approach to solve (1) in general and DQMR particular. In Section 3, we will drive the DQMR method. We design DQMR when we set  throughout, DQMR reduces to QMR. In this sense, DQMR is an extension of QMR that archives the Drazin-inverse solution of singular systems. In Sec- tion 4, by numerical examples, we show that the computation time and iteration number of DQMR algorithm is substantially less than that of DGMRES algorithm. Section 5 is devoted to concluding remarks.

throughout, DQMR reduces to QMR. In this sense, DQMR is an extension of QMR that archives the Drazin-inverse solution of singular systems. In Sec- tion 4, by numerical examples, we show that the computation time and iteration number of DQMR algorithm is substantially less than that of DGMRES algorithm. Section 5 is devoted to concluding remarks.

2. Some Basic Theorem and Projection Methods for ADb

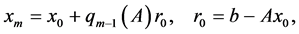

The method we are interested in starts with an arbitrary initial vector  and generate sequences of vector

and generate sequences of vector  according to

according to

(2)

(2)

where

Let us define

We call

Note that

The condition (6) is due to Eiermann et al. [5] .

For convenience we denote by

Thus, the polynomial

The projection methods of [12] are now defined by demanding that the vector

where

We see that unique solution for c exists provided det

As we choose different W, we have a different algorithm: for DGMRES, we choose

In this paper, for DQMR, we choose

We will mention several definitions and theorems, which have projection method converge below.

We will denote by

Definition 1 [12] . Let A be singular and

The following theorems will ensure the success of projection method.

Theorem 1 [12] .

The following result that is the justification of the above-mentioned projection approach is Theorem 4.2 in [12] .

Theorem 2 Let

Obviously, of Theorem 2 if

Theorem 3 [14] . The vector

3. DQMR Algorithm

In this section, we will introduce a different implementation of projection method. The algorithm is analogous to QMR algorithm. We must note that in spite of the analogy, DQMR seems to be quite different from QMR, which is for non-singular systems.

As

algorithm [16] generates two sequences of vectors

where they are clear that

respectively.

If we define that

then, we can write for

Therefore, it is obvious that we need to determine

where

Moreover, provided that

Note that

Consequently, provided

and since

If the column vectors of

as in GMRES. Therefore, a least, squares solution could be obtained from the krylove space

over

Similar to [14] ,

Let us define

where

Since

where, certainly,

Supposed that

From [14] , we have:

Equation (16) can be simplified as follows:

By using the Hadamard product Equation (18) is reduced. For this purpose, we first introduce the concepts of Hadamard matrix product.

Definition 2 Let A and B be

Let us denote

Now, we can be simplified (18) as follows

For solution system

We must reduce the band matrix,

Generally, if we define

where

where

Since if we assume

Consequently,

and

where

or

Thus,

This gives the following algorithm, which we call the Drazin-QMR for Drazin- inverse solution of singular nonsymmetric linear equations.

Algorithm 4.1 DQMR Algorithm

13. EndFor.

16. End Do.

4. Numerical Examples

In this section, we will compute the linear system

This linear system has also been computed by Sidi [14] for testing DGMRES algorithm. The problem has also been considered by Hank and Hochbruck [18] for testing the Chebyshev-type semi-iterative method. The numerical computations are performed in MATLAB (R213a) with double precision. The results were obtains by running the code on an Intel (R) Core (TM) i7-2600 CPU Processor running 3.40 GHz with 8 GB of RAM memory using Windows 7 professional 64-bit operating system. The initial vector

Let M be an odd integer, we discretize the Poisson equation on a uniform grid of mesh size

Here, I and 0 denote, respectively, the

The numerical experiment was performed for

It should be noted A is singular with 1D null space spanned by the vector

We first construct a consistent system with the known solution

Consequently the system

meter

In Table 1, Table 2, we give the number of iterations (Its), the CPU time (Time)

Table 1. Application of DQMR implementation to the consistent singular system.

Table 2. Application of DQMR implementation to the inconsistent singular system.

required for convergence, the relative error (RE), for the DGMRES and DQMR methods. As shown in Table 1, Table 2 the DQMR algorithm is effective and less expensive than the DGMRES algorithm.

5. Conclusion

In this paper, we presented a new method, called DQMR, for Drazin-inverse solution of singular nonsymmetric linear systems. The DQMR algorithm for singular systems is analogous to the QMR algorithm for non-singular systems. Numerical experiments indi- cate that the Drazin-inverse solution obtained by this method is reasonably accurate, and its computation time is less than that of solution obtained by the DGMRES method. Thus, we can conclude that the DQMR algorithm is a robust and efficient tool to com- pute the Drazin-inverse solution of singular linear systems.

Acknowledgements

First, we would like to thank professor F. Toutounian for her comments that improved our results. Second, we thank the editor and the referees for their carefully reading and useful comments.

Cite this paper

Ataei, A. (2016) A QMR-Type Algorithm for Drazin-Inverse So- lution of Singular Nonsymmetric Linear Sys- tems. Advances in Linear Algebra & Matrix Theory, 6, 104-115. http://dx.doi.org/10.4236/alamt.2016.64011

References

- 1. Ben-Israel, A. and Grevile, T.N.E. (2003) Generalized Inverses: Theory and Applications. 2nd Edition, Springer-Verlag, New York.

- 2. Campell, S.L. and Meyer, C.D. (1979) Generalized Inverses of Linear Transformations. Pitman (Advanced Publishing Program), Boston.

- 3. Hartwig, R.E. and Hall, F. (1982) Applications of the Drazin Inverse to Cesaro-Neumann Iterations. In: Campbell, S.L., Ed., Recent Applications of Generalized Inverses, 66, 145-195.

- 4. Hartwig, R.E. and Levine, J. (1981) Applications of the Drazin Inverse to the Hill Cryptographic system. Part III, Cryptologia, 5, 67-77.

https://doi.org/10.1080/0161-118191855850 - 5. Eiermann, M., Marek, I. and Niethammer, W. (1988) On the Solution of Singular Linear Systems of Algebric Equations by Semiiterative Methods. Numerische Mathematik, 53, 265-283.

https://doi.org/10.1007/BF01404464 - 6. Freund, R.W. and Hochbruck, M. (1994) On the Use of Two QMR Algorithms for Solving Singular Systems and Applications in Markov Chain Modeling. Numerical Linear Algebra with Applications, 1, 403-420.

https://doi.org/10.1002/nla.1680010406 - 7. Simeon, B., Fuhrer, C. and Rentrop, P. (1993) The Drazin Inverse in Multibody System Dynamics. Numerische Mathematik, 64, 521-539.

https://doi.org/10.1007/BF01388703 - 8. Brown, P.N. and Walker, H.F. (1997) GMRES on (Nearly) Singular Systems. SIAM Journal on Matrix Analysis and Applications, 18, 37-51.

https://doi.org/10.1137/S0895479894262339 - 9. Ipsen, I.F. and Meyer, C.D. (1998) The Idea behind Krylov Methods. The American Mathematical Monthly, 105, 889-899.

https://doi.org/10.2307/2589281 - 10. Smoch, L. (1999) Some Result about GMRES in the Singular Case. Numerical Algorithms, 22, 193-212.

https://doi.org/10.1023/A:1019162908926 - 11. Wei, Y. and Wu, H. (2000) Convergence Properties of Krylov Subspace Methods for Singular System with Arbitrary Index. Journal of Computational and Applied Mathematics, 114, 305-318.

https://doi.org/10.1016/S0377-0427(99)90237-6 - 12. Sidi, A. (1999) A Unified Approach to Krylov Subspace Methods for the Drazin-Inverse Solution of Singular Non-Symmetric Linear Systems. Linear Algebra and Its Applications, 298, 99-113.

https://doi.org/10.1016/S0024-3795(99)00153-6 - 13. Sidi, A. and Kluzner, V. (1999) A Bi-CG Type Iterative Method for Drazin Inverse Solution of Singular Inconsistent Non-Symmetric Linear Systems of Arbitrary Index. The Electronic Journal of Linear Algebra, 6, 72-94.

- 14. Sidi, A. (2001) DGMRES: A GMRES-Type Algorithm for Drazin-Inverse Solution of Singular Non-Symmetric Linear Systems. Linear Algebra and Its Applications, 335, 189-204.

https://doi.org/10.1016/S0024-3795(01)00289-0 - 15. Zhou, J. and Wei, Y. (2004) DFOM Algorithm and Error Analysis for Projection Methods for Solving Singular Linear System. Applied Mathematics and Computation, 157, 313-329.

https://doi.org/10.1016/j.amc.2003.08.036 - 16. Lanczos, C. (1950) An Iteration Method for the Solution of the Eigenvalue Problem of Linear Differential and Integral Operators. Journal of Research of the National Bureau of Standards, 45, 255-282.

https://doi.org/10.6028/jres.045.026 - 17. Saad, Y. (2003) Iterative Methods for Sparse Linear Systems. 2nd Edition, SIAM, Philadelphia.

https://doi.org/10.1137/1.9780898718003 - 18. Hank, M. and Hochbruck, M. (1993) A Chebyshev-Like Semiiteration for Inconsistent Linear Systems. Electronic Transactions on Numerical Analysis, 1, 315-339.