Paper Menu >>

Journal Menu >>

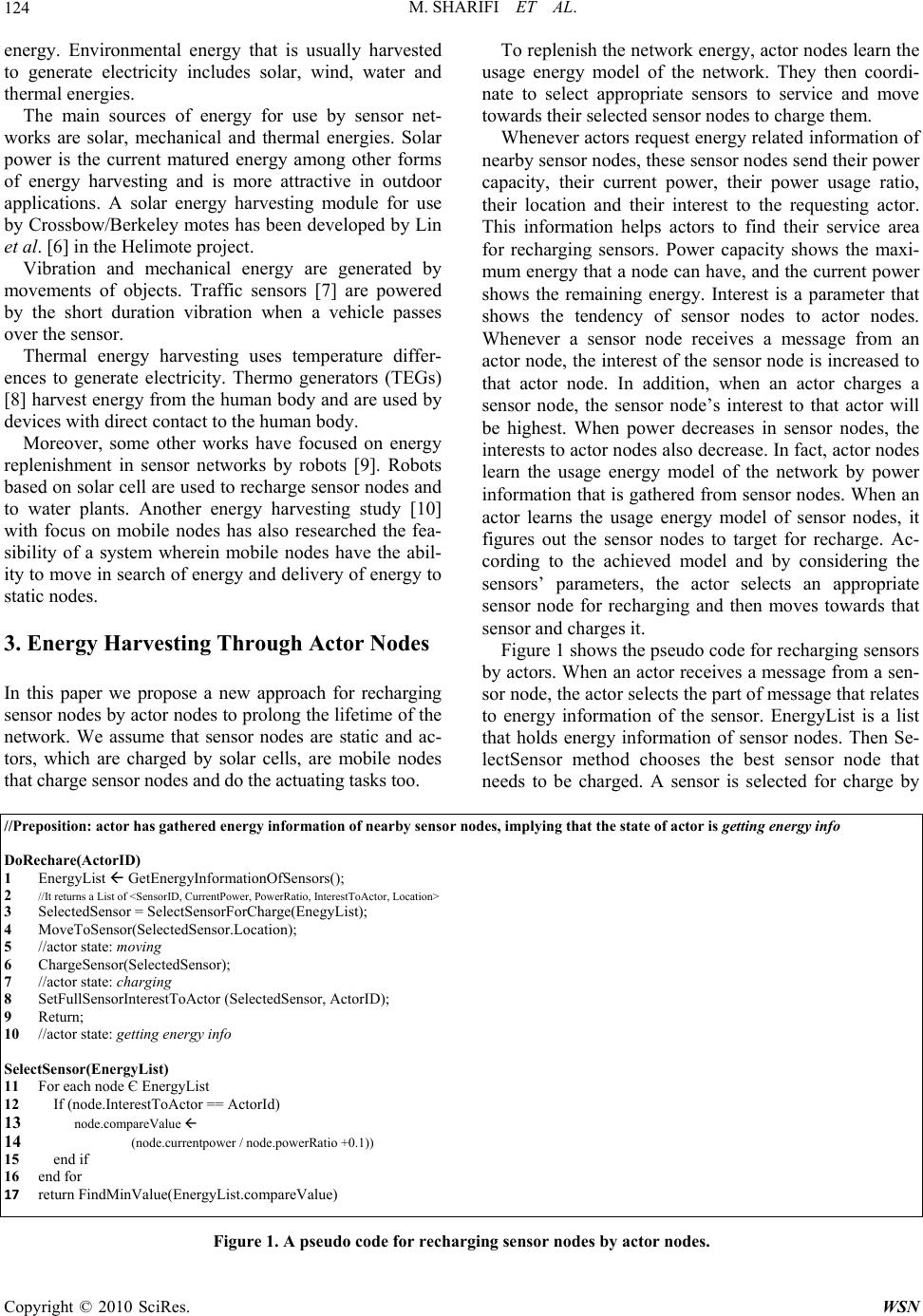

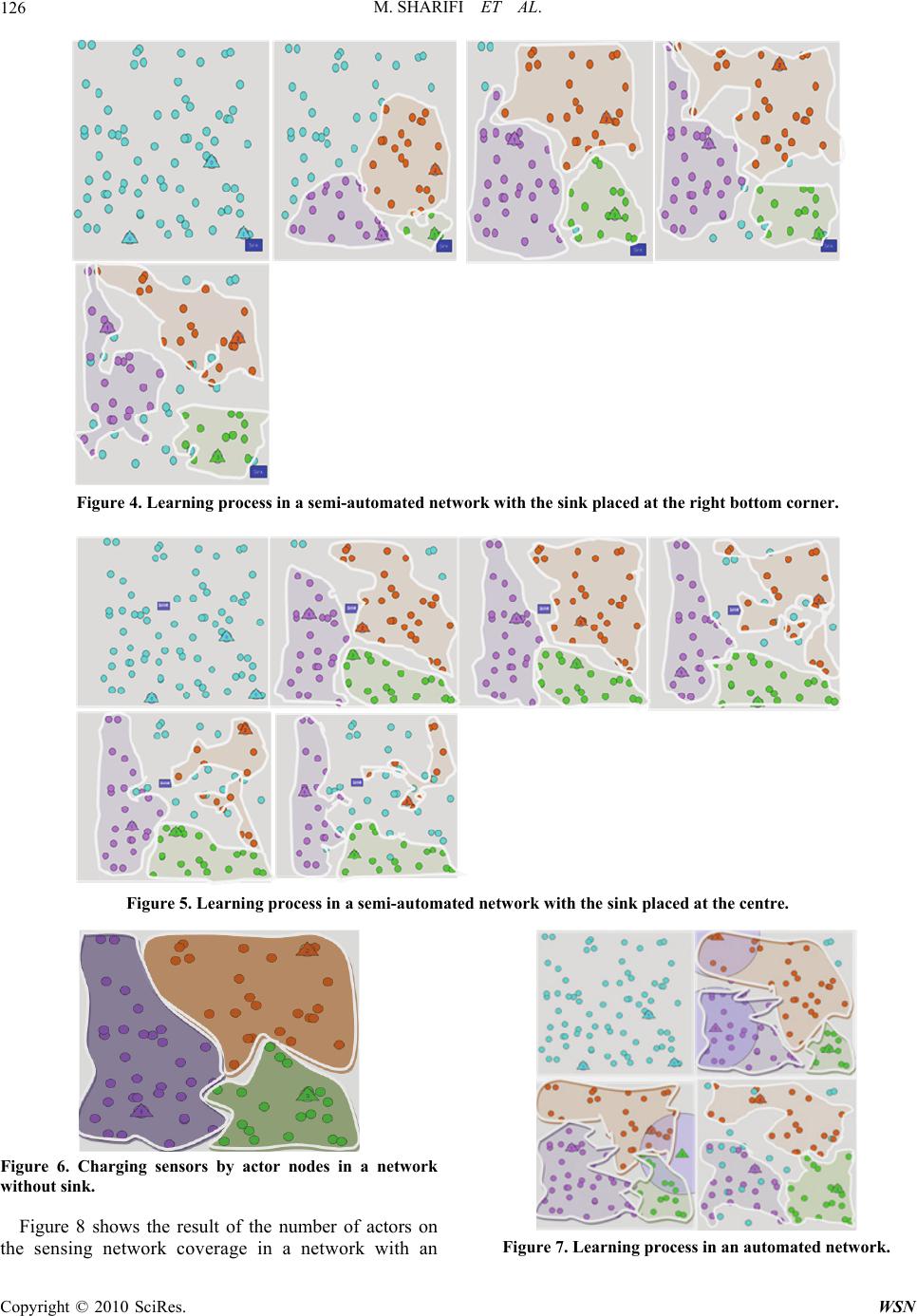

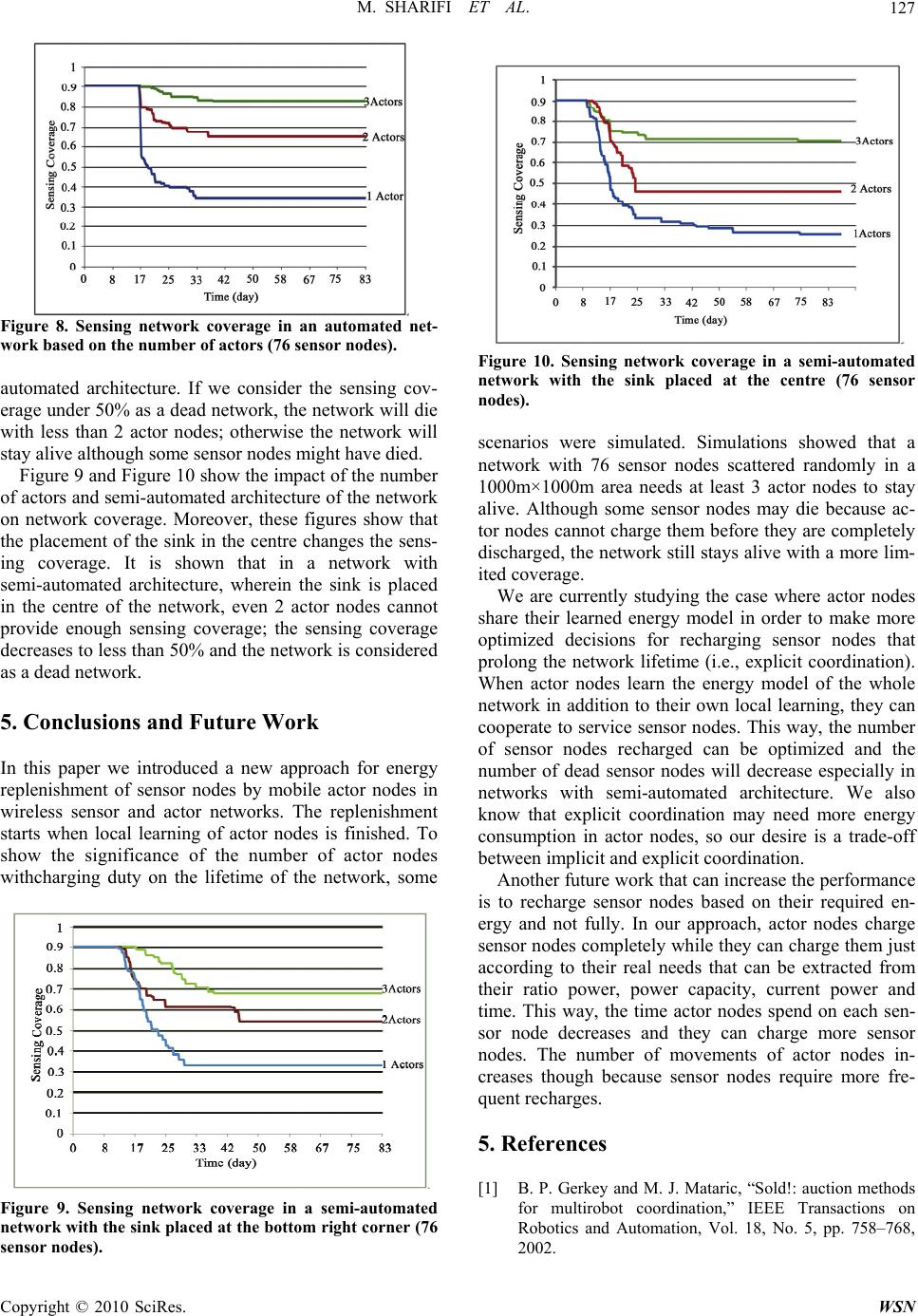

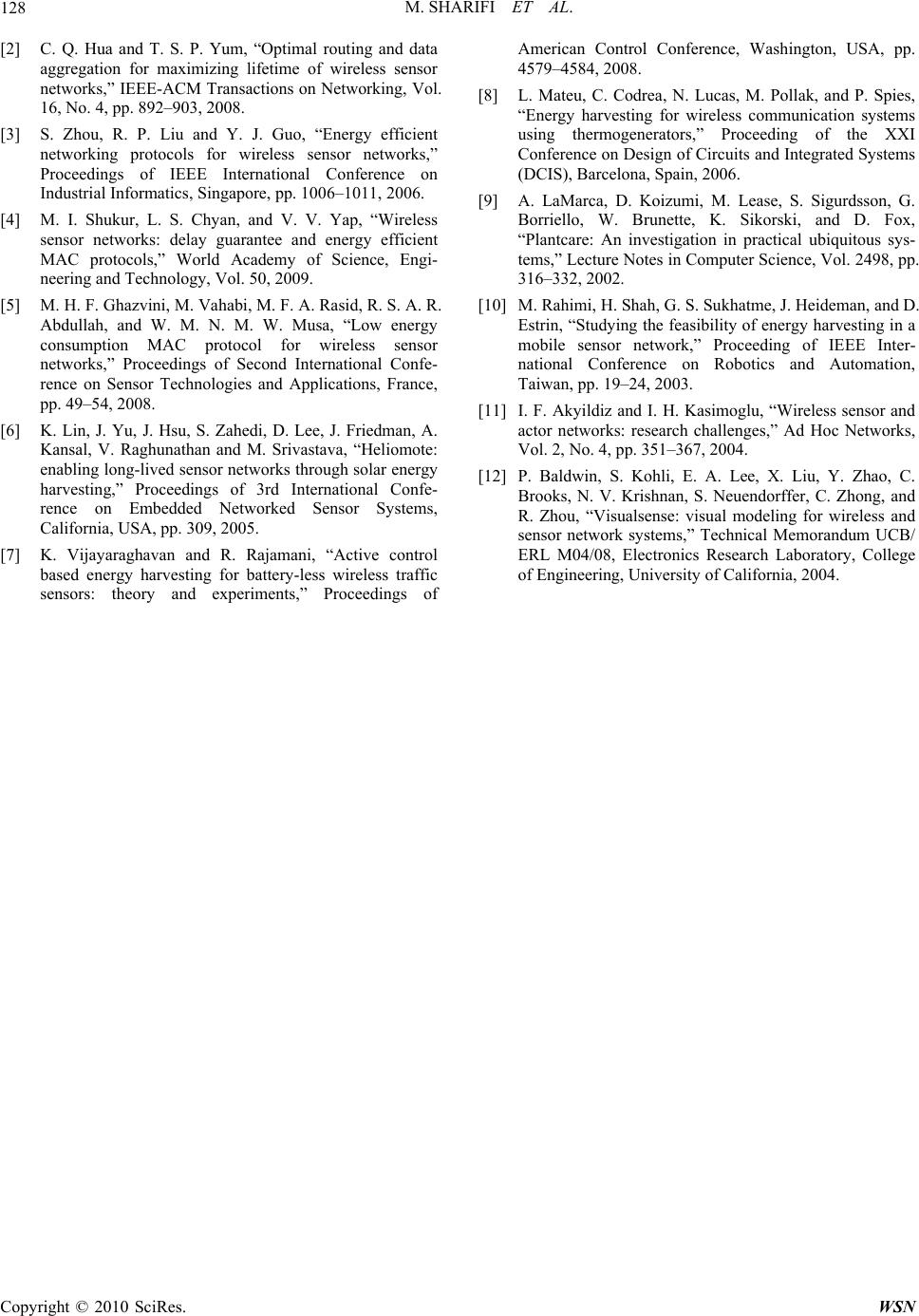

Wireless Sensor Network, 2010, 2, 123-128 doi:10.4236/wsn.2010.22017 y 2010 (http://www.SciRP.org/journal/wsn/). Copyright © 2010 SciRes. WSN Published Online Februar Recharging Sensor Nodes Using Implicit Actor Coordination in Wireless Sensor Actor Networks Mohsen Sharifi, Saeed Sedighian, Maryam Kamali School of Computer Engineering, Iran University of Science and Technology, Tehran, Iran E-mail: {msharifi, sedighian}@iust.ac.ir, m_kamali@comp.iust.ac.ir Received November 25, 2009 ; revised December 9, 2009; accepted December 14, 2009 Abstract Wireless sensor actor networks are composed of sensor and actor nodes wherein sensor nodes outnumber resource-rich actor nodes. Sensor nodes gather information and send them to a central node (sink) and/or to actors for proper actions. The short lifetime of energy-constrained sensor nodes can endanger the proper op- eration of the whole network when they run out of power and partition the network. Energy harvesting as well as minimizing sensor energy consumption had already been studied. We propose a different approach for recharging sensor nodes by mobile actor nodes that use only local information. Sensor nodes send their energy status along with their sensed information to actors in their coverage. Based on this energy informa- tion, actors coordinate implicitly to decide on the timings and the ordering of recharges of low energy sensor nodes. Coordination between actors is achieved by swarm intelligence and the replenishment continues dur- ing local learning of actor nodes. The number of actors required to keep up such networks is identified through simulation using VisualSense. It is shown that defining the appropriate number of actor nodes is critical to the success of recharging strategies in prolonging the network lifetime. Keywords: Wireless Sensor Actor Networks, Coordination, Energy Harvesting, Swarm Intelligence 1. Introduction Wireless sensor and actor networks (WSANs) are made of two types of nodes called sensor nodes and actors. Sensor nodes are tiny, low-cost, low-power devices with limited sensing, computation, and wireless communica- tion capabilities. Actors are usually resource-rich nodes with higher processing capabilities, higher transmission powers and longer life. In WSANs a large number of sensor nodes are randomly deployed in a target area, may be in the order of hundreds or thousands, to perform a coordinated sensing task. Such a dense deployment is usually not necessary for actor nodes because actors are sophisticated devices with higher capabilities that can act on wider areas. Actors collect and process sensor data and perform actions on the environment based on their gathered information. Common applications of WSANs include all types of surveillance, target tracking, attack detection, medical sensing, and environment monitoring. Actors in many applications should coordinate together to maximize their overall task performance by sharing and processing the sensors’ data, making decisions and taking appropri- ate actions [1]. One of the greatest concerns in WSANs is energy espe- cially for sensor nodes. A sensor node without energy cannot do its duties unless the source of energy is re- charged or changed. Since such networks are usually de- ployed in large scales and for a long period of time, human intervention for replenishment of energy is not feasible. The rest of paper is organized as follows. Section 2 presents notable related work. Section 3 describes our energy harvesting approach in detail. Section 4 argues the validity of the approach and presents simulation re- sults. Section 5 concludes the paper. 2. Related Work Many researches on extending the lifetime of WSANs have focused on minimizing energy usage in different layers of WSANs like in data aggregation to decrease data traffic [2], in energy-efficient networking protocols [3], in managing sleep cycles [4] and in using low power MAC [5]. There are other variants to tackle energy problem that consider different ways of recharging or changing the energy sources (mostly, batteries) of sensor nodes. A method to improve the battery lifetime of sensor nodes is to supplement the battery supply with environmental  M. SHARIFI ET AL. Copyright © 2010 SciRes. WSN 124 energy. Environmental energy that is usually harvested to generate electricity includes solar, wind, water and thermal energies. The main sources of energy for use by sensor net- works are solar, mechanical and thermal energies. Solar power is the current matured energy among other forms of energy harvesting and is more attractive in outdoor applications. A solar energy harvesting module for use by Crossbow/Berkeley motes has been developed by Lin et al. [6] in the Helimote project. Vibration and mechanical energy are generated by movements of objects. Traffic sensors [7] are powered by the short duration vibration when a vehicle passes over the sensor. Thermal energy harvesting uses temperature differ- ences to generate electricity. Thermo generators (TEGs) [8] harvest energy from the human body and are used by devices with direct contact to the human body. Moreover, some other works have focused on energy replenishment in sensor networks by robots [9]. Robots based on solar cell are used to recharge sensor nodes and to water plants. Another energy harvesting study [10] with focus on mobile nodes has also researched the fea- sibility of a system wherein mobile nodes have the abil- ity to move in search of energy and delivery of energy to static nodes. 3. Energy Harvesting Through Actor Nodes In this paper we propose a new approach for recharging sensor nodes by actor nodes to prolong the lifetime of the network. We assume that sensor nodes are static and ac- tors, which are charged by solar cells, are mobile nodes that charge sensor nodes and do the actuating tasks too. To replenish the network energy, actor nodes learn the usage energy model of the network. They then coordi- nate to select appropriate sensors to service and move towards their selected sensor nodes to charge them. Whenever actors request energy related information of nearby sensor nodes, these sensor nodes send their power capacity, their current power, their power usage ratio, their location and their interest to the requesting actor. This information helps actors to find their service area for recharging sensors. Power capacity shows the maxi- mum energy that a node can have, and the current power shows the remaining energy. Interest is a parameter that shows the tendency of sensor nodes to actor nodes. Whenever a sensor node receives a message from an actor node, the interest of the sensor node is increased to that actor node. In addition, when an actor charges a sensor node, the sensor node’s interest to that actor will be highest. When power decreases in sensor nodes, the interests to actor nodes also decrease. In fact, actor nodes learn the usage energy model of the network by power information that is gathered from sensor nodes. When an actor learns the usage energy model of sensor nodes, it figures out the sensor nodes to target for recharge. Ac- cording to the achieved model and by considering the sensors’ parameters, the actor selects an appropriate sensor node for recharging and then moves towards that sensor and charges it. Figure 1 shows the pseudo code for recharging sensors by actors. When an actor receives a message from a sen- sor node, the actor selects the part of message that relates to energy information of the sensor. EnergyList is a list that holds energy information of sensor nodes. Then Se- lectSensor method chooses the best sensor node that needs to be charged. A sensor is selected for charge by //Preposition: actor has gathered energy information of nearby sensor nodes, implying that the state of actor is getting energy info DoRechare(ActorID) 1 EnergyList GetEnergyInformationOfSensors(); 2 //It returns a List of <SensorID, CurrentPower, PowerRatio, InterestToActor, Location> 3 SelectedSensor = SelectSensorForCharge(EnegyList); 4 MoveToSensor(SelectedSensor.Location); 5 //actor state: moving 6 ChargeSensor(SelectedSensor); 7 //actor state: charging 8 SetFullSensorInterestToActor (SelectedSensor, ActorID); 9 Return; 10 //actor state: getting energy info SelectSensor(EnergyList) 11 For each node Є EnergyList 12 If (node.InterestToActor == ActorId) 13 node.compareValue 14 (node.currentpower / node.powerRatio +0.1)) 15 end if 16 end for 17 return FindMinValue(EnergyList.compareValue) Figure 1. A pseudo code for recharging sensor nodes by actor nodes.  M. SHARIFI ET AL. 125 this method based on the energy information of sensor nodes in the EnergyList. Afterwards, the location of the selected sensor node is passed to MoveToSensor and the actor moves towards the sensor and charges the selected sensor node. During the time actors are learning the energy usage model of sensors, the network is divided into parts. This division is formed by energy information that is sent by sensors. If the network architecture is automated, there is no central controller (sink) and actor nodes process all in- coming data and initiate appropriate actions [11]. In such a network, events might happen everywhere uniformly and the usage of power for each sensor node is considered approximately the same as for other nodes. In this sce- nario, the sensor node deployment has a random uniform distribution; the divided parts are about the same size. However, if the network architecture is considered semi-automated, the sink in a WSAN coordinates net- work activities [11] and nodes that are closer to the sink lose more power because they are involved more in communication than nodes farther from the sink. So in semi-automated networks, the usage of power in a sensor differs from power usage in other sensor nodes, resulting in different sizes of network partitions. Because sensor nodes closer to the sink lose their power faster, actors have to recharge them more frequently. Therefore, the service area of actors near the sink is smaller in size. 4. Experimental Validation The proposed approach was simulated by VisualSense [12] with different number of actor nodes and 100 sensor nodes. Actors and sensor nodes were randomly placed in an area of 1000m × 1000m. The transmission ranges for each actor node and for each sensor node were 100m and 50m, respectively. The model was used in different sce- narios to evaluate the performance of the model. We used a short range for actor nodes because our algorithm is really localized. In Figure 2, there are 3 actor nodes and 80 sensor nodes scattered randomly in the area. Also there is a sink at the bottom right corner of the area. Actually, the net- work is assumed semi-automated. As it is shown, the area that actor #1 has created is smaller than the area of actor #2 and actor #3. In this scenario, since energy of sensor nodes near the sink decreases by higher ratio, ac- tor #1 has to act in a smaller area to be able to supply enough energy to sensor nodes. As in this scenario, there are limited actor nodes with a lot of sensor nodes, so some sensor nodes die out. But because there are a lot of sensor nodes, sensing coverage does not decrease quickly. The learning steps of actors for the above scenario are shown in Figure 4. As it is depicted in the third part of Figure 4, sensor nodes at the left top corner need not to Figure 2. Sensor nodes charged by actor nodes in a network wherein the sink is placed at the right bottom corner (cir- cles represent sensor nodes and triangles represent actor nodes). Figure 3. Charging sensors by actor nodes in a network with the sink placed at the centre. be charged because they are not involved in interface communication to other nodes; this means that they only use power when they need to send their information. Figure 3 shows the same scenario as the previous sce- nario except that the sink is placed in the middle of the area. As it is shown in Figure 3, network service areas are different from those shown in Figure 2. Furthermore, Figure 5 shows the evolutionary progress of the service area formation as a result of application of learning process. Figure 6 shows a scenario for an automated architec- ture containing the same number of sensor and actor nodes as in the last two scenarios. Figure 7 shows the learning process in this scenario and illustrates how some sensor nodes die out because of limited number of actor nodes. In our proposed approach, we have an important chal- lenge for the number of actors. Some important parame- ters that affect the number of actors needed for the net- work are the number of sensor nodes, the area, average recharge ratio, local recharge ratio, speed of actors, charge time and the network architecture. If the number of actors does not match the network requirements, the network sensing coverage will decrease, leading to a dead network. C opyright © 2010 SciRes. WSN  M. SHARIFI ET AL. 126 Figure 4. Learning process in a semi-automated network with the sink placed at the right bottom corner. Figure 5. Learning process in a semi-automated network with the sink placed at the centre. Figure 6. Charging sensors by actor nodes in a network without sink. Figure 8 shows the result of the number of actors on the sensing network coverage in a network with an Figure 7. Learning process in an automated network. C opyright © 2010 SciRes. WSN  M. SHARIFI ET AL.127 Figure 8. Sensing network coverage in an automated net- work based on the number of actors (76 sensor nodes). automated architecture. If we consider the sensing cov- erage under 50% as a dead network, the network will die with less than 2 actor nodes; otherwise the network will stay alive although some sensor nodes might have died. Figure 9 and Figure 10 show the impact of the number of actors and semi-automated architecture of the network on network coverage. Moreover, these figures show that the placement of the sink in the centre changes the sens- ing coverage. It is shown that in a network with semi-automated architecture, wherein the sink is placed in the centre of the network, even 2 actor nodes cannot provide enough sensing coverage; the sensing coverage decreases to less than 50% and the network is considered as a dead network. 5. Conclusions and Future Work In this paper we introduced a new approach for energy replenishment of sensor nodes by mobile actor nodes in wireless sensor and actor networks. The replenishment starts when local learning of actor nodes is finished. To show the significance of the number of actor nodes withcharging duty on the lifetime of the network, some Figure 9. Sensing network coverage in a semi-automated network with the sink placed at the bottom right corner (76 sensor nodes). Figure 10. Sensing network coverage in a semi-automated network with the sink placed at the centre (76 sensor nodes). scenarios were simulated. Simulations showed that a network with 76 sensor nodes scattered randomly in a 1000m×1000m area needs at least 3 actor nodes to stay alive. Although some sensor nodes may die because ac- tor nodes cannot charge them before they are completely discharged, the network still stays alive with a more lim- ited coverage. We are currently studying the case where actor nodes share their learned energy model in order to make more optimized decisions for recharging sensor nodes that prolong the network lifetime (i.e., explicit coordination). When actor nodes learn the energy model of the whole network in addition to their own local learning, they can cooperate to service sensor nodes. This way, the number of sensor nodes recharged can be optimized and the number of dead sensor nodes will decrease especially in networks with semi-automated architecture. We also know that explicit coordination may need more energy consumption in actor nodes, so our desire is a trade-off between implicit and explicit coordination. Another future work that can increase the performance is to recharge sensor nodes based on their required en- ergy and not fully. In our approach, actor nodes charge sensor nodes completely while they can charge them just according to their real needs that can be extracted from their ratio power, power capacity, current power and time. This way, the time actor nodes spend on each sen- sor node decreases and they can charge more sensor nodes. The number of movements of actor nodes in- creases though because sensor nodes require more fre- quent recharges. 5. References [1] B. P. Gerkey and M. J. Mataric, “Sold!: auction methods for multirobot coordination,” IEEE Transactions on Robotics and Automation, Vol. 18, No. 5, pp. 758–768, 2002. Copyright © 2010 SciRes. WSN  M. SHARIFI ET AL. Copyright © 2010 SciRes. WSN 128 [2] C. Q. Hua and T. S. P. Yum, “Optimal routing and data aggregation for maximizing lifetime of wireless sensor networks,” IEEE-ACM Transactions on Networking, Vol. 16, No. 4, pp. 892–903, 2008. [3] S. Zhou, R. P. Liu and Y. J. Guo, “Energy efficient networking protocols for wireless sensor networks,” Proceedings of IEEE International Conference on Industrial Informatics, Singapore, pp. 1006–1011, 2006. [4] M. I. Shukur, L. S. Chyan, and V. V. Yap, “Wireless sensor networks: delay guarantee and energy efficient MAC protocols,” World Academy of Science, Engi- neering and Technology, Vol. 50, 2009. [5] M. H. F. Ghazvini, M. Vahabi, M. F. A. Rasid, R. S. A. R. Abdullah, and W. M. N. M. W. Musa, “Low energy consumption MAC protocol for wireless sensor networks,” Proceedings of Second International Confe- rence on Sensor Technologies and Applications, France, pp. 49–54, 2008. [6] K. Lin, J. Yu, J. Hsu, S. Zahedi, D. Lee, J. Friedman, A. Kansal, V. Raghunathan and M. Srivastava, “Heliomote: enabling long-lived sensor networks through solar energy harvesting,” Proceedings of 3rd International Confe- rence on Embedded Networked Sensor Systems, California, USA, pp. 309, 2005. [7] K. Vijayaraghavan and R. Rajamani, “Active control based energy harvesting for battery-less wireless traffic sensors: theory and experiments,” Proceedings of American Control Conference, Washington, USA, pp. 4579–4584, 2008. [8] L. Mateu, C. Codrea, N. Lucas, M. Pollak, and P. Spies, “Energy harvesting for wireless communication systems using thermogenerators,” Proceeding of the XXI Conference on Design of Circuits and Integrated Systems (DCIS), Barcelona, Spain, 2006. [9] A. LaMarca, D. Koizumi, M. Lease, S. Sigurdsson, G. Borriello, W. Brunette, K. Sikorski, and D. Fox, “Plantcare: An investigation in practical ubiquitous sys- tems,” Lecture Notes in Computer Science, Vol. 2498, pp. 316–332, 2002. [10] M. Rahimi, H. Shah, G. S. Sukhatme, J. Heideman, and D. Estrin, “Studying the feasibility of energy harvesting in a mobile sensor network,” Proceeding of IEEE Inter- national Conference on Robotics and Automation, Taiwan, pp. 19–24, 2003. [11] I. F. Akyildiz and I. H. Kasimoglu, “Wireless sensor and actor networks: research challenges,” Ad Hoc Networks, Vol. 2, No. 4, pp. 351–367, 2004. [12] P. Baldwin, S. Kohli, E. A. Lee, X. Liu, Y. Zhao, C. Brooks, N. V. Krishnan, S. Neuendorffer, C. Zhong, and R. Zhou, “Visualsense: visual modeling for wireless and sensor network systems,” Technical Memorandum UCB/ ERL M04/08, Electronics Research Laboratory, College of Engineering, University of California, 2004. |