Journal of Software Engineering and Applications

Vol.07 No.05(2014), Article ID:46337,8 pages

10.4236/jsea.2014.75042

A recognition method of pedestrians’ running in the red light based on image

Min Zhang, Chao li Wang, Yun feng Ji

Department of Control Science and Engineering, University of Shanghai for Science and Technology, Shanghai, China

Email: mixue.2012@163.com, clwang@usst.edu.cn, jyf123456789@126.com

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 4 April 2014; revised 4 May 2014; accepted 12 May 2014

ABSTRACT

It is dangerous for pedestrians to run when the traffic shows a red light, but in some cases the pedestrians are breaking the rules. This system will be a meaningful thing if the jaywalking behaviors of pedestrians in the road crossing through the monitoring cameras could be recognized. Then drivers can be informed of the situations in advance, and they can take some actions to avoid an accident. The characteristic behavior is the non-construction, and furthermore, due to the change of sunlight, temperature, and weather in the outside environment, and the shaking of cameras themselves, the background images will change as time goes by, which will bring special difficulties in recognizing jaywalking behaviors. In this paper, the method of adaptive background model of mixture Gaussian is used to extract the moving objects in the video. On the base of Histograms of Oriented Gradients (HOG), the pedestrians images and car images from MIT Library are used to train our monitoring system by SVM classifier, and identify the pedestrians in the video. Then, the color histogram, position information and the movement of pedestrians are selected to track them. After that we can identify whether the pedestrians are running in the red lights or not, according to the transportation signals and allocated walking areas. The experiments are implemented to show that the proposed method is effective.

Keywords:

SVM classifier, Histogram, Background Modeling, Objects Tracking

1. Introduction

Due to the rapid development in urban road traffic, the numbers of pedestrians and the vehicles are increasing continuously. And the phenomenon of pedestrians’ running in the red light is increasing too. It brings bad influence to the road traffic safety and leads to road traffic jam for all the time. To improve the efficiency of urban traffic and to protect the people, we need to do some work to detect the pedestrians who are running in the red light and give some alerts to them. It can help to improve the consciousness of people and give the drivers some important reminder to avoid traffic accidents. This is an economic and effective method to use the surveillance video of the modern transportation network to detect the pedestrians running in the red light.

We can use the frame difference to extract moving objects in the video. Though it has a good real-time performance to get the moving objects by subtracting two adjacent images, it is too sensitive to the environmental noise. The selected threshold has great impact on the detected results. For the big and color unanimous moving objects, it is easy to get internal voids from the detected objects, then it is hard to extract the complete moving targets. Optical-flow method can estimate the moving field based on the temporal and spatial gradients of the image sequence, then it can distinguish the moving objects and the background, but it is susceptible to the outside environment influence and it needs some complex mathematical calculation. Background subtraction is to get the difference from the background image to the current image, so if we want to extract the moving objects, we need to acquire the background first. Median filter, linear filter, linear Kalman filter and the Stauffer’s mixture Guassian [1] model are all adaptive background models.

At the moment, recognizing based on the gait characteristics of moving is a method for identifying pedestrians. The human’s waking gait has a certain periodicity, so we can analyze the periodicity of the video sequence to distinguish the pedestrian and other objects. Wohler [2] had adopted this feature to combine the adaptive time delay neural network to recognize pedestrians. Dalal proposed using the histograms of oriented gradients [3] [4] feature and combining the support vector machine to recognize pedestrians.

Multi-objects tracking in video sequence can be based on moving detection [5] , particle filter [6] . Color, texture and motion information can be combined to track multi-objects [7] , and it has achieved good results in moving objects tracking.

We choose the adaptive background model of mixture Guassian to characterize the background and then extract the moving objects from the foreground. HOG descriptors are chosen to describe the moving objects. In this system the linear SVM classifier is used for the training and then to classify the pedestrians. The training images are pedestrian images and car images from MIT Library. For the extracted pedestrian targets, color histograms, location and trajectory were used to construct matching matrix to track the objects. After pedestrians are detected and tracked, we can judge whether the pedestrians are running when the red light is on or not, according to traffic signal and alarmed area.

Three main sections of this system are extracting moving objects, the recognition of pedestrians, pedestrians tracking and behavior judgment. The execute solution frame is showed in Figure 1.

2. Extract the moving objects

The background of the video images will change frequently as the outdoor environments changes, such as light, temperature, and wind. And the camera’s shaking also contributes to this factor. So we choose the adaptive background model of mixture Gaussian [1] to cope with the changing environmental conditions. Extract the foreground from the video sequence, and make it binary. Then using morphological processing we can find the connected areas, and extract the moving objects from the foreground.

2.1. Adaptive background model of mixture Gaussian

We build K mixture Gaussian models for every pixel in an image. While a new picture of the video sequence comes, we do the matching processing for every pixel in it. If the pixel matched successfully, it will be classified into background. Otherwise it will be classified as foreground, and we use the information this pixel to update

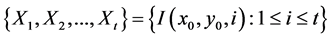

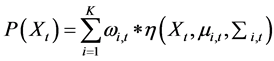

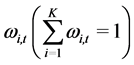

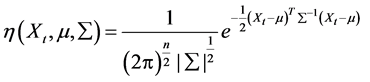

the mixture Gaussian models. The history values of pixel  is

is ,

,

where  represents the pixel value of the location

represents the pixel value of the location  at the ith frame of the video sequence.

at the ith frame of the video sequence.

Firstly, we build K mixture Gaussian models based on the history values of the pixels. We always arrange the K

Gaussian models as the priority  descending. The probability of the current pixel value as

descending. The probability of the current pixel value as  is:

is:

. (1)

. (1)

Figure 1. The framework of the system.

where K represents the number of Gaussian models,  is the weight corresponding to Gaussian

is the weight corresponding to Gaussian

model, and  represents the ith Gaussian model probability density function:

represents the ith Gaussian model probability density function:

(2)

(2)

For an image of RGB channels, we assume that the three channels are independent of each other and have the

same variance , that means

, that means , the mean value

, the mean value , and the covariance matrix is

, and the covariance matrix is

.

.

Background modeling procedures are as follows:

1) Initializing the mixture Gaussian models (using the median background modeling firstly)

Initialize a bigger variance  for every background mixture Gaussian. The weight of every models are initialized as

for every background mixture Gaussian. The weight of every models are initialized as

2) Matching the background models

The K Gaussian models are sorted by descending order priority

models, we do the match for the new coming frames. If the pixel value meet the condition that

And the we can set

change the last Gaussian model’s mean value as the current pixel value and initialize a big variance.

3) Update the mixture Gaussian models

If the model is matched, (i.e.,

For all the models update their weights

parameter learning rate, which react with the parameter convergence speed of the adaptive background model.

4) Background generation:

We choose the former B Gaussian models to generate the background models, which meet

2.2. Foreground moving object’s extracting

According to the adaptive background model of mixture Gaussian, we can get the background and foreground of the video sequence, as show in Figure 2(b), Figure 2(c). The foreground is a binary image, the moving object is represented by 1 and the background is represented by 0 in Figure 2(c). We use the morphological processing such as expansion and corrosion to remove the empty in the foreground object and the small noise in it. Then extract the blob block of connected areas. Extracted moving object from the original image is showed in Figure 2(d).

3. The recognition of pedestrians

Histograms of oriented gradients (HOG) is a feature descriptor of objected detection in computer vision and image processing. Dalal [4] proposed that, combination of HOG descriptor and the linear SVM classifier will recognize the pedestrians more effectively and we can achieve good results from it. So in our experiments, we choose the HOG descriptor to extract the features of moving objects and use the liner SVM classifier to distinguish the pedestrians from other objects.

3.1. HOD descriptor

The appearance and shape of the local area of an object can be characterized by the local distribution of local intensity gradients and the directions. The descriptor of histograms of oriented gradients is based on this idea. It’s a statistical characteristic of the intensity gradients and directions to the local area of the image.

In our experiments, we get our HOG descriptors using the suggested parameters of reference paper [4] to recognize pedestrians. For an image of 64 × 128 pixels, we divide it into small cells of 8 × 8 pixels. And the ad-

Figure 2. Campus playground: (a) the 40th frame. (b) The background by the mixture Gaussian models. (c) The binary fore- ground model. (d) The extracted moving object.

jacent four cells combined into one block. The block slides by one cell to the adjacent area once and the entire image is scanned to get the HOG descriptors. The blocks and cells are showed in Figure 3. As to the gradients, divide the 1800 into 9 directions (bin) equally. For the 9 directions, get the histograms of a cell as a 9-dimen- sional feature vector. Combine the four cells of each block into one descriptor of 36 dimensions. And the block slide in one direction by one cell for one time, so we can get 112 blocks in this 64 × 128 image. Combine all the blocks’ descriptors into one descriptor to get the final HOG descriptor of 112 × 36 dimensions.

In order to eliminate the effects of illumination, etc. Before we combine all the blocks’ descriptors, we need to normalize the descriptor for every block. We adopt the Lowe-style clipped L2 norm. Firstly, L2-norm:

norm of the vector

The gradient histogram for each cell is calculated by statistical devoting. Divide the 0˚ - 180˚ into 9 bins {(0 - 20), (20 - 40), (40 - 60), (60 - 80),… (160 - 180)}, the center point of every bin value is

gradient

Figure 3. 64 × 128 pixel image, cell (8 × 8) and block (16 × 16), block stride one cell once.

and the (I + 1)th. The ith bin will be added by

The gradient is calculated by the template

where

The intensity and direction of the gradient are

For an image of RGB channels, we calculate the gradients for every channel. Choose the biggest intensity gradient vector as the gradient of the pixel.

3.2. Support vector machine classifier (SVM)

Support Vector Machine Classifier is a statistics method for classifying. We choose the penalized parameter C = 0.01 for the linear SVM. In the urban traffic junctions, the moving objects are mainly pedestrians and cars . Other types of moving objects are relatively less than these two types. So we choose the pedestrians’ pictures in MIT library as positive training samples and the cars’ pictures in MIT library as the negative training samples. Here 924 pedestrians’ pictures and 516 cars’ pictures were used.

Use the SVM classifier result to identify the extracted moving objects in the video sequence. Distinguish the pedestrians from the moving objects and using the recognition results for the next procedure. Figure 4 shows the recognition results. The red outline stands for the moving objects extracted by adaptive background model of mixture Gaussian. The green rectangles are the moving objects identified as pedestrian.

4. Pedestrians tracking and behavior judgment

After distinguish pedestrians from other moving objects, we need to track them to get their location and moving direction trend, then judge whether the pedestrian is running the red light or not. The pedestrians’ clothes color is almost fixed when they are running the road cross. So the color histograms will be a very good feature for dis-

Figure 4. The recognition result of the video sequence.

tinguishing different moving objects as a color feature. The motion of a pedestrian is some kind of continuous in trajectory and space. So we can choose the location and trajectory as the motion features of the pedestrian. We combine the color histograms, location and trajectory of the pedestrian to track the moving pedestrian.

4.1. The introduction of tracking features

Color histograms [7] feature is a statistic feature of the whole image. It uses the global colors’ distribution to describe a picture and can represent the image in a global aspect. Pedestrian is no-rigid moving object. Its shape and size will change unexpected in different frames, but its clothes color is almost stationary. And the color histograms can respect a pedestrian’s clothes color very well. Since the RGB image cannot perceive the real world color space intuitively and can be easily influenced by sunlight, but the HSV color space is based on the perception of human eyes and can confirm the object’s color information. So in our experiment, Initial step is to convert the RGB color image to the HSV color space. And then we used only the color histograms of the H channel. The distance between two objects’ color histograms is calculated by Euclidean distance

index

Location means the center coordinate of the moving object,

location distance between two objects is

Trajectory information can be described by the smoothness of direction and velocity, the smoothness of the ith object is

where

frame. In the equation, the first part is the smoothness of direction and the second part is the smoothness of velocity. We treat them equally, so add them without weights. In the distance matrix, we set trajectory distance as

4.2. Tracking and matched method

We construct a distance matrix

[8] , we construct the correspond matrix of the distance matrix

The matching steps are as follows:

1) For every row of the matrix

correspond matrix element is increased by one.

2) For every column of the matrix

correspond matrix element is increased by one.

3) If the element in the matrix

objects and match successfully. The other objects haven’t been matched are the new objects coming into the image or have left the image.

The element of distance matrix

and the jth pedestrian in the new frame. It contains the color histograms distance, location distance and trajec-

tory smoothness information.

distance is

distance between the jth object in the new frame and all the tracked objects should be calculated normalized).

4.3. Detect the pedestrians Which run the red light

After the objects are detected and tracked, we marked every pedestrian, see from Figure 5. We set an alarmed area firstly. While the red light is on, if we tracked the pedestrians run into the alarmed area, we can point it out that the pedestrians who are running in the red light and storing the pictures of the pedestrians running when there is a red light. Meanwhile we can give some good suggestions to the pedestrians, for educating them to be careful and don’t break the red lights.

Figure 5. Four pedestrians marked out for walking into the alarmed area.

We have done our experiments in school playground and traffic junctions. The accuracy rate of detecting and tracking pedestrians in school playground can reach above 80%. Then we use it in the traffic junctions, through 6 video sequences which took from 3 traffic junctions, for every traffic junctions we took two 15 minutes surveillance video sequences. The accuracy rate of detecting pedestrians who run the red light is above 60%.

5. Conclusion

We apply the pedestrian recognition of video sequence to the road safety, through detecting the moving pedestrians in it and tracking them properly. While the red light is on, if we detect pedestrians running into the alarmed area, we alarm this information to the drivers and the pedestrians to improve the safety to road traffic. This system works well to some extend to the real time traffic that we have analyzed, so it is possible to apply the pedestrian recognition of video sequence to improve road safety. In the future we will focus on how to improve the accuracy of detecting and tracking. And we are planning to add the prediction module in our system, the main idea is that before the people run the red light, we give some conditions to judge whether the pedestrian will run the red light or give the probability of the pedestrians running the red light.

Acknowledgements

This paper was partially supported by The National Natural Science Foundation (61374040), Key Discipline of Shanghai (S30501), Scientific Innovation program (13ZZ115), Graduate Innovation program of Shanghai (54-13-302-102).

References

- Stauffer, C. and Grimson, W.E.L. (1999) Adaptive Background Mixture Models for Real-Time Tracking. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Fort Collins, 23-25 June 1999.

- Wohler, C., Kressel, U. and Anlaur, J.K. (2000) Pedestrian Recognition by Classification of Image Sequences Global Approaches vs. Local Spatio-Temporal Processing. 15th International Conference on Pattern Recognition, Barcelona, 3-7 September 2000, 540-544.

- Gavrila, D.M. (2000) Pedestrian Detection from a Moving Vehicle. In: Vernon, D., Ed., Computer Vision—ECCV 2000, Springer, Berlin, 37-49. http://dx.doi.org/10.1007/3-540-45053-X_3

- Dalal, N. and Triggs, B. (2005) Histograms of Oriented Gradients for Human Detection. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, 25-25 June 2005, 886-893.

- Chang, F.L., Ma, L. and Qiao, Y.Z. (2007) Human Oriented Multi-Target Tracking Algorithm in Video Sequence. Control and Decision, 22, 418-422.

- Liu, G.C. and Wang, Y.J. (2009) An Algorithm of Muli-Target Tracking Based on Improved Particle Filter. Control and Decision, 22, 317-320.

- Takala, V. and Pietikainen, M. (2007) Multi-Object Tracking Using Color, Texture and Motion. IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, 17-22 June 2007, 1-7.

- Yang, T., Pan, Q. and Li, J. (2005) Real-Time Multiple Objects Tracking with Occlusion Handling in Dynamic Scenes. IEEE Conference on Computer Vision and Pattern Recognition, 20-25 June 2005, 970-975.