Psychology

Vol.08 No.09(2017), Article ID:77842,11 pages

10.4236/psych.2017.89092

Down Syndrome Cognitive Constraints to Recognize Negative Emotion Face Information: Eye Tracking Correlates

Ernesto Octavio Lopez-Ramirez1, Guadalupe Elizabeth Morales-Martinez2, Yanko Norberto Mezquita-Hoyos3, Daniel Velasco Moreno2

1Cognitive Science Laboratory, Department of Psychology, Nuevo Leon Autonomous University (UANL), Monterrey, Mexico

2Cognitive Science Laboratory, Institute of Research on the University and Education, National Autonomous University of Mexico (UNAM; IISUE), Mexico City, Mexico

3Department of Psychology, Yucatan Autonomous University (UADY), Merida, Mexico

Copyright © 2017 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY 4.0).

http://creativecommons.org/licenses/by/4.0/

Received: June 20, 2017; Accepted: July 21, 2017; Published: July 24, 2017

ABSTRACT

Eye gaze correlates to emotion face recognition were obtained from a sample with Down syndrome (DS) as well as from a sample of typical individuals to look for gaze pattern differences between both. The goal was to determine if possible face scanning patterns might be related to different styles of cognitive automatic processing of emotion faces information. First, after IQ control, participants were required to take an affective priming study. This emotion recognition studies allowed appropriate selection of DS showing typical negative face recognition difficulties. Then, both samples took a formative eye tracking study in order to identify gaze correlates typifying them. Results showed that participants with DS have atypical eye fixation patterns regarding emotion faces recognition. In particular, they seem to intentionally avoid fixating on the eyes of presented photographs of emotion faces. This face scanning patterns might contribute to their difficulties to recognize negative face information. It is argued that this kind of cognitive processing of emotion facial information obeys to an acquired affective style.

Keywords:

Down Syndrome, Emotional Facial Recognition, Eye Tracking Correlates, Affective Priming Paradigm

1. Introduction

Our ability to recognize facial expressions of emotion like a happy face or an angry one as well as the capacity to discriminate them from neutral expressions begins to develop early in life (around 4 to 9 months of age; Williams, Wishart, Pitcairn, & Willis, 2005 ). Increment in facial recognition expertise seems to be related to general aspects of cognitive and perceptual development (Mondloch, Maurer, & Ahola, 2006) . Then, in recent years emerged an interest to explore effects of atypical cognitive development condition on facial recognition abilities (see Morales & Lopez, 2013 ). For example, several studies showed that most people with Down syndrome (PWDS) (see Wishart & Pitcairn, 2000; Pitcairn & Wishart, 2000 ), autism (for review see Turk & Cornish, 1998; Uljarevic & Hamilton, 2012; Weigelt, Koldewyn, & Kanwisher, 2012 ), Williams syndrome (e.g., Porter, Coltheart, & Langdon, 2007; Plesa-Swerer, Faja, Schofield, Verbalis, & Tager-Flusberg, 2006 ) present difficulties to recognize some kinds of emotional facial expressions.

Since recognizing emotional faces have a relevant influence on social relationships establishment and maintenance (Williams et al., 2005) , low accuracy in this ability prevents people to take on opportunities to establish pro-social interactions and to avoid potential social dangers (e.g. Marsh, Kozak, & Ambady, 2007 ). Persons with difficulties to recognize negative emotions linked to social disapproval (e.g., fear, angry) may experience segregation or discrimination. For instance, many PWDS has difficulties to keep proper social distance from others (frequently they approach too close) (e.g., Porter et al., 2007 ), which makes them prone to social discrimination. This behavior has been related to facial information recognition difficulties. For example, regarding emotion face recognition studies, DS children do present lower recognition accuracy scores than those obtained with typical children and children and adolescents with intellectual disabilities having the same mental age (Williams, Wishart, Pitcairn, & Willis, 2005; Wishart, Cebula, Willis, & Pitcairn, 2007) . These difficulties arise in par- ticular with the emotions of fear and surprise (Wishart & Pitcairn, 2000) . Complementary research suggests the possibility for a DS specific cognitive information processing style tuned to discriminate negative faces from other emotion faces (Conrad, Schmidt, Niccols, Polak, Riniolo, & Burack, 2007; Mo- rales & Lopez, 2010; Morales, Lopez, Castro, Charles, & Mezquita, 2014) .

Recently, a set of affective priming studies were carried over to explore PWDS’ affective style to recognize emotion face information. First, participants’ abilities to identify and categorize emotion were identified (Morales & Lopez, 2010, Morales et al., 2014) , then familiarity effects over emotion face recognition were explored (Morales & Lopez, 2010) . Finally, their affective style to recognize emotion face information was determined (configural vs. analytic) (Morales, 2010). Generally speaking, these results showed that: a) As it is the case regarding typical individuals, DS study participants showed different styles to emotion face recognition. Interestingly, negative face recognition difficulties were not a characteristic typifying all DS study participants. Furthermore, the capacity shown by some PWDS to correctly categorize negative face emotions did not extend to all spectra of negative information. Here, for some DS study participants was harder to recognize angry and fear emotions (specially over female faces) and for other participants the categorization of faces showing sadness (Morales et al., 2014) , b) PWDS seem to process differently positive familiar faces from unfamiliar positive faces and c) a configural face recognition style typifies most of DS study samples as it is the case over typical adults (e.g., Shimamura, Ross, & Bennett, 2006 ).

In a set of face recognition neuro computational studies regarding DS face stimuli and typical population faces (Morales & Lopez, 2011) it was observed that implicit emotion face information facilitated 80% accurate recognition of typical faces and around 70% of DS emotion faces. This lead to the question about if DS negative face recognition difficulties might be related to specific gaze patterns of implicit face information. Thus a follow up eye tracking study in addition to the appointed emotion face recognition reaction studies can be implemented to explore this unknown answer. Eye tracking studies typifying gaze patterns of people with intellectual disabilities have been carried on providing insightful information. For example, Hedley, Young and Brewer (2012) , found that people having Autism present different difficulties when considering explicit versus implicit emotion face information. It is not known if similar results can be found in a DS population.

This study aims to explore the implicit affective facial recognition by using eye tracking correlates such that a specific eye scanning pattern to evaluate facial information should typify PWDS as different from a typical population. Schurgin and colleagues (2014) have shown that typical individuals primarily pay more attention to salient facial features (like eyes, nose and mouth) than on other facial regions, but spend more time looking at the mouth when recognizing happy faces and more time on the eyes for angry, sad and fearful faces. If this emotion face recogniton pattern applies to PWDS remains unknown. Moreover, it is assumed that DS emotion face scanning patterns to negative face information must agree with a failure to detect distinct characteristics of negative face information (e.g. no fixation time over eyes and eyebrows). To explore this, the following experimental procedures were implemented.

2. Method

Since a robust amount of academic evidence suggests that persons with DS have some facial emotion recognition difficulties (for a review see Morales & Lopez, 2013 ) it is expected from a formative eye tracker study to immediately detect gaze typification to this population. Thus, this study intention seeks for an immediate qualitative identification of face recognition differences between DS and typical participants rather than a summative analysis to inference. However, as it will be discussed later, by finding some empirical evidence of gaze patterns typifying DS negative face recognition, relevant theoretical insights on DS emotion face recognition difficulties are expected.

In order to accomplish this research goal both samples were required to take an affective priming as well as to participate in an eye tracking study. The affective priming study was a control to assure that typical participants were capable of differential automatic processing recognition of valenced face stimuli (Musch & Klauer, 2003) whereas DS participants were not capable of cognitive automatic processing of negative face stimuli (Morales et al., 2014) .

2.1. Participants

According to Pernice and Nielsen (2009) , 30 to 32 participants are needed to produce a “stable” heatmap that represents the gaze behaviors of all users in a study. However, as it has been pointed out by Bojko (2013) whenever the average likelihood of problem detection in a formative study is high (problem discoverability) then the required sample size is low. Table 1 shows the relation between sample size and the probability of detecting the problem being searched (Sauro & Lewis, 2012) .

Thus, by considering Table 1 we expected at least to have a 90% chance to find Down syndrome specific gaze patterns and we included an initial sample of ten young PWDS. However, after IQ testing control only two individuals were taken into account for the affective priming study and the eye tracker study. The final scrutiny to compare both samples into the formative eye tracker study consider two typical female participants (out of eight) whose age ranged between 21 to 22 years old and two PWDS (out of ten participants), a 17 years old female and a 25 years old male.

Psychometric Control

Wechsler scales (WAIS-IV) were used to test participants’ IQ. Moreover, an instrument was developed (Multidimensional Assessment of Emotion―I: MAE-1) to capture demographic information (age, gender, health, etc.), mood history (possible emotional disorders, current mood states, most frequent mood state), and measurement of emotion dimensions (conceptualization, experience, self- regulation, and perceived emotion, as well as face recognition capacities: emotion naming, emotion identification, emotion discrimination, etc.).

Table 1. Sample size specification according to a consideration of problem discoverability (Based on Sauro & Lewis, 2012 ).

Here, the DS male presented an IQ score of 60 whereas the DS female an IQ score of 61. Several efforts to test they had attention capacity for both studies were carried on. Regarding, the first typical participant she obtained an IQ score of 120 whereas the second typical female obtained a score of 104.

2.2. Instruments and Stimuli

2.2.1. The Affective Priming Study

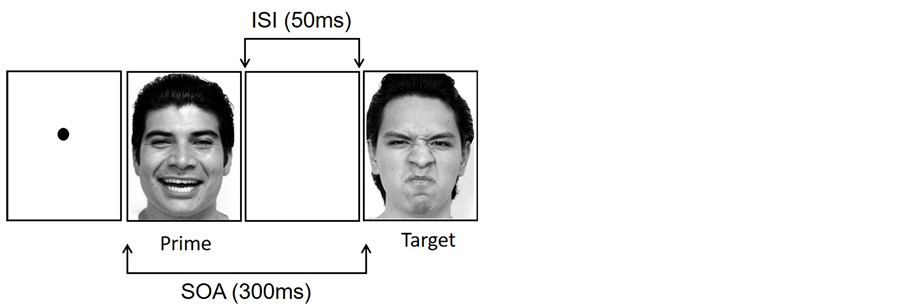

The selected affective prime face stimuli were arranged in pairs (prime-target) to create 135 experimental trials. Each trial presented the two faces consecutively, and stimulus onset asynchrony (SOA) was controlled (the time interval considering the beginning of the presentation of the first stimulus up to the beginning of the presentation of the second stimulus. In addition, the inter-stimulus interval (ISI; time between both stimuli) was controlled. Manipulation of the ISI and the SOA induces either cognitive automatic processing or controlled processing. In this study, both temporal parameters were set to activate automatic processing. The experimental trials were presented on a computer using the software Super Lab Pro 5. Figure 1 illustrates visually this experimental manipulation.

2.2.2. The Eye Tracking Study

To register eye movements, we used a RED 500 Hz tracking system, SMI SensoMotoric Instruments. Both eyes were recorded. Regarding the control group (typical individuals) only eye data of correctly recognized emotions were analyzed whereas for the experimental group the experimental task was considered only a gaze capture mechanism. Distance from floor to participants’ eyes ranged between 43.30 to 55.11 inches with a standalone RED eye tracker having a 20-degree visual angle having a 15 inches distance from a 21’’ wide screen monitor. Before beginning the eye-tracking experiment, participants completed a calibration procedure by using the SensoMotoric iView 2.8 system to ensure the eye-tracker was adequately tracking gaze. In this calibration procedure, all participants were asked to follow a flashing dot as it appeared at 5 locations. If calibration was unsuccessful, the monitor and chair were adjusted until proper calibration was achieved.

Figure 1. Illustration of an affective priming experimental trial.

Following calibration, participants were presented with a practice block consisting of 10 face emotion images (five female and five male faces). Then, five experimental blocks each containing twenty different female and male emotion faces displaying happy, fearful, angry, surprise and neutral expressions were presented once in a randomized order. Faces were shown for 4500 milliseconds each, with a 350 milliseconds inter-stimulus interval in which the screen was blank white. This kind of eye tracking technique methods are proved to be reliable to cognitive specification (Duchowsky, 2007; Luna, Marek, Larsen, Tervo, & Chahal, 2015; Eckstein, Guerra, Singley, & Bunge, 2016) .

3. Results

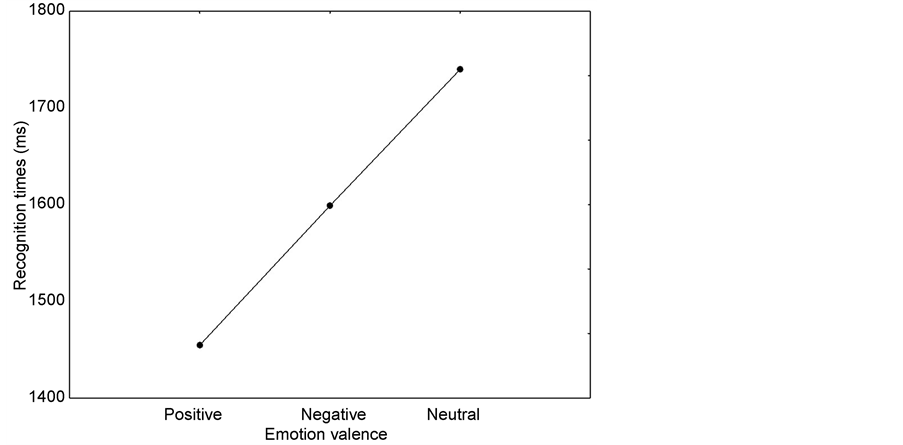

For typical participants’ data to be included in the analysis, they should have had at least 90% of correct hits. Here, a within ANOVA was carried on over a three experimental conditions data (Positive words, negative words and neutral faces). As expected a significant main effect was obtained for the stimuli valence factor F(2, 2) = 65,29, p = 0.01 (see Figure 2).

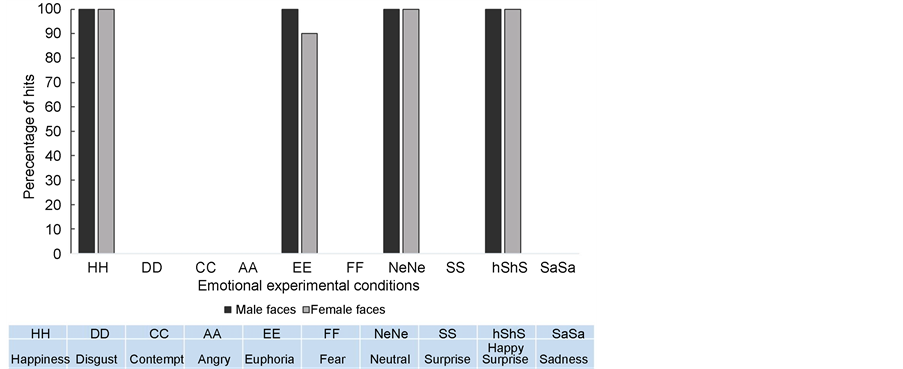

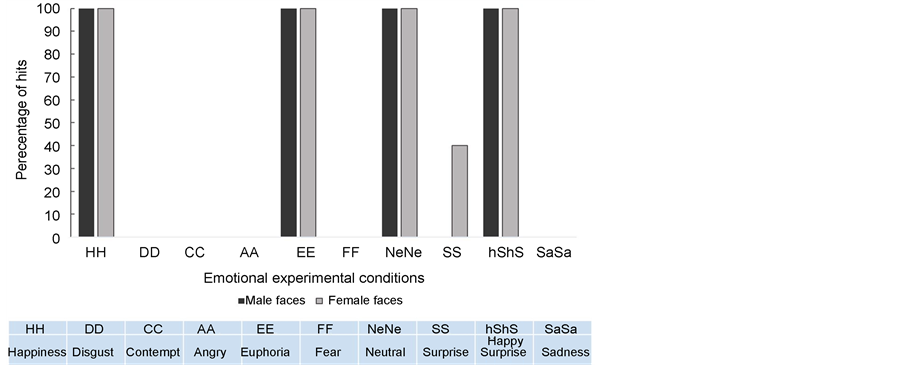

Also as expected, DS participants presented a recognition difficulty to negative facial information. Figure 3 shows the case for the DS female participant.

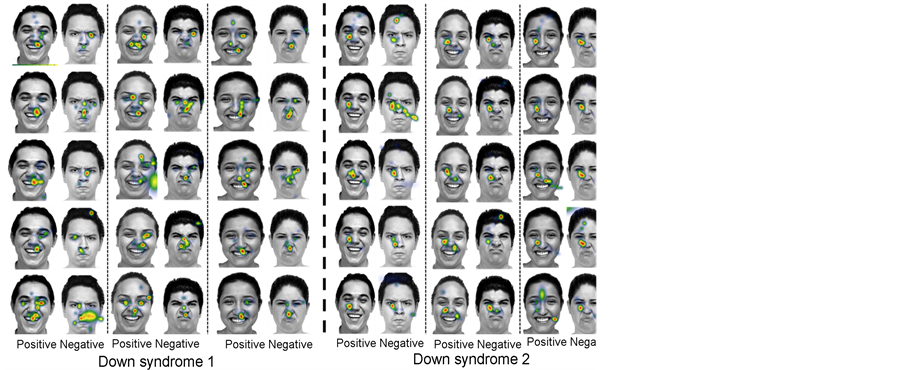

Figure 4 and Figure 5 show eye fixation times from both samples through heat maps. Rather than presenting averaged facial stimuli metrics both figures present some positive and negative faces from the experimental blocks to favor qualitative scrutiny.

Notice from Figure 5 that DS eye fixation (compared to typical population) in average did not concentrate on face salient characteristic regarding negative emotions (like fixation on eyes or eyebrows). They seem to be interested on face gesticulation surrounding the nose (upper lips area, nasolabial folds and the nose). This is especially true for DS2.

Figure 2. Typical study participants showed a significant main effect to recognition of emotion discrimination of face information.

Figure 3. Negative face recognition difficulty presented by a DS female participant.

Figure 4. Typical participants’ recognition of salient facial features (like eyes, nose and mouth). If fixation times are averaged then typical participants spend more time looking at the mouth when recognizing happy faces as well as more time on the eyes for angry, sad and fearful faces.

Figure 5. Male DS eye fixation time (DS1) compared to a DS female eye fixation pattern through different emotion face stimuli (DS2).

Figure 6. In contrast to the female DS participant this DS male individual recognized negative valenced surprise.

Overall, these results show distinctive eye fixation patterns between both sample studies. No distinctive or significant differences to DS eye fixation regarding positive or negative could be observed.

4. Discussion

In this study we sought to explore eye gaze patterns typifying DS emotion face recognition as different from typical individuals. In accordance to previous affective priming studies dealing with automatic processing of emotion face information studies (Morales et al., 2014; Morales & Lopez, 2013; Morales & Lopez, 2010) , DS participants showed lower accuracy to categorize negative face information when compared to typical individuals’ emotion face recognition accuracy. Furthermore, different gaze patterns to static photographs of emotion faces were obtained from both samples (DS vs typical).

Overall, the experimental group emphasized eye fixation over nasolabial fold and regions nearby the nose. This eye gaze pattern is similar to the appointed by Farsin and colleagues (Farsin, Rivera, & Hessl, 2009) . These authors reported an eye tracking study dealing with Fragile X Syndrome (FXS) individual’s capacity to recognize emotion face stimuli. Their study participants exhibited atypical fixation patterns to pictures of emotion faces; they specially avoided looking at eyes. They imply that these effects might extend to glaze avoidance in real-life situation. From the current study results the same speculation can be derived since DS participants seemed to deliberately avoid looking at the eyes of static photographs of faces.

Furthermore, the DS male behaved rather differently than the DS female participant. He recognized some negative valenced information in the affective priming study and her eye fixation was also different than the female counterpart. This opens exploration to look for DS gender preferences over emotion faces or to determine if possible female\male emotion recognition depend on particular affective processing.

It is worth to notice that even when DS gaze patterns to positive and negative face information are atypical, they had no trouble recognizing positive face stimuli. Here, they seem to achieve positive face recognition by eye fixation over the upper lips area and nasolabial fold face information (see Figure 5 and Figure 6). Negative face recognition difficulties might not apply to the information recognition of happiness since physiology to both kind of emotions seems to be different (Blair, Morris, Frith, Perrett, & Dolan, 1999) .

This formative study represents an example on how eye tracker studies help to explore cognitive emotional processing of people having intellectual disabilities. Consider the possibility to deepen our understanding into DS people who recognize negative face information from those PWDS who does not by using eye gaze metrics. If so, then a possibility to explore if they develop a cognitive filter to avoid eye contact to favor positive bias toward others. Cognitive specification of such cognitive emotion style is needed. Similar procedures used in this paper can be used for this purpose (also Luna et al., 2015; Eckstein et al., 2016 ).

Acknowledgements

“This research was funded by the Consejo Nacional de Ciencia y Tecnología (CONACYT)

Cite this paper

Lopez-Ramirez, E. O., Morales-Martinez, G. E., Mezquita-Ho- yos, Y. N., & Moreno, D. V. (2017). Down Syndrome Cognitive Constraints to Recognize Negative Emotion Face Information: Eye Tracking Correlates. Psychology, 8, 1403- 1413. https://doi.org/10.4236/psych.2017.89092

References

- 1. Blair, R. J. R., Morris, J. S., Frith, C. D., Perrett, D. I., & Dolan, R. J. (1999). Dissociable Neural Responses to Facial Expressions of Sadness and Anger. Brain, 122, 883-893.

https://doi.org/10.1093/brain/122.5.883 [Paper reference 1] - 2. Bojko, A. (2013). Eye Tracking, the User Experience: A Practical Guide to Research. Brookling, New York: Rosenfield Media. [Paper reference 1]

- 3. Conrad, N. J., Schmidt, L. A., Niccols, A., Polak, C. P., Riniolo, T. C., & Burack, J. A. (2007). Frontal Electroencephalogram Asymmetry during Affective Processing in Children with Down Syndrome: A Pilot Study. Journal of Intellectual Disability Research, 51, 988-995.

https://doi.org/10.1111/j.1365-2788.2007.01010.x [Paper reference 1] - 4. Duchowsky, A. T. (2007). Eye Tracking Methodology: Theory and Practice (2nd ed.). London: Springer. [Paper reference 1]

- 5. Eckstein, M. K., Guerra, C. B., Singley, M. A. T., & Bunge, S. A. (2016). Beyond Eye Gaze: What Else Can Eye Tracking Reveal about Cognition and Cognitive Development? Developmental Cognitive Neuroscience.

http://www.sciencedirect.com/science/article/pii/S1878929316300846 [Paper reference 2] - 6. Farsin, F., Rivera, S. M., & Hessl, D. (2009). Visual Processing of Faces in Individuals with Fragile X Syndrome: An Eye Tracking Study. Journal of Autism and Developmental Disorders, 39, 946-952.

https://doi.org/10.1007/s10803-009-0744-1 [Paper reference 1] - 7. Hedley, D., Young, R., & Brewer, N. (2012). Using Eye Movements as an Index of Implicit Face Recognition in Autism Spectrum Disorder. Autism Research, 5, 363-379.

https://doi.org/10.1002/aur.1246 [Paper reference 1] - 8. Luna, B., Marek, S., Larsen, B., Tervo-Clemmens, B., & Chahal, R. (2015). An Integrative Model of the Maturation of Cognitive Control. Annual Review of Neuroscience, 38, 151-170.

https://doi.org/10.1146/annurev-neuro-071714-034054 [Paper reference 2] - 9. Marsh, A. A., Kozak, M. N., & Ambady, N. (2007). Accurate Identification of Fear Facial Expressions Predicts Prosocial Behavior. Emotion, 7, 239-251.

https://doi.org/10.1037/1528-3542.7.2.239 [Paper reference 1] - 10. Mondloch, C. J., Maurer, D., & Ahola, S. (2006). Becoming a Face Expert. Association for Psychological Science: Research report, 17, 930-934.

https://doi.org/10.1111/j.1467-9280.2006.01806.x [Paper reference 1] - 11. Morales, G. E., Lopez, E. O., Castro, C., Charles, D. J., & Mezquita, Y. N. (2014). Contributions to the Cognitive Study of Facial Recognition on Down Syndrome: A New Approximation to Exploring Facial Emotion Processing Style. Journal of Intellectual Disability—Diagnosis and Treatment, 2, 124-132.

http://www.lifescienceglobal.com/pms/index.php/jiddt/article/view/2354

https://doi.org/10.6000/2292-2598.2014.02.02.6 [Paper reference 5] - 12. Morales, M. G. E., & Lopez, R. E. O. (2010). Down Syndrome and Automatic Processing of Familiar and Unfamiliar Emotional Faces. International Journal of Special Education, 25, 17-23. http://www.internationaljournalofspecialed.com/issues.php [Paper reference 3]

- 13. Morales, M. G. E., & Lopez, R. E. O. (2011). Down Syndrome Emotion Face Recognition: A Connectionist Approach. Revista Mexicana de Psicología Educativa, 1, 41-53.

http://www.psicol.unam.mx/silviamacotela/Pdfs/RMPE_2(1)_075_087.pdf [Paper reference 1] - 14. Morales, M. G. E., & Lopez, R. E. O. (2013). Down Syndrome, beyond the Intellectual Disability: People with Their Own Emotional World. New York, NY: Nova Science Publisher. [Paper reference 3]

- 15. Musch, J., & Klauer, K. C. (2003). The Psychology of Evaluation: Affective Processes in Cognition and Emotion. LEA. [Paper reference 1]

- 16. Pernice, K., & Nielsen, J. (2009). How to Conduct Eye Tracking Studies? Nielsen Norman Group.

https://media.nngroup.com/media/reports/free/How_to_Conduct_Eyetracking_Studies.pdf [Paper reference 1] - 17. Pitcairn, T. K., & Wishart, J. G. (2000). Face Processing in Children with Down Syndrome. In D. Weeks, R. Chua, & D. Elliot (Eds.), Perceptual-Motor Behavior in Down Syndrome (pp. 123-147). Champaign, III: Human Kinetics. [Paper reference 1]

- 18. Plesa-Skwerer, D., Faja, S., Schofield, C., Verbalis, A., & Tager-Flusberg, H. (2006). Perceiving Facial Vocal Expression of Emotion in Individuals with Williams Syndrome. American Journal of Mental Retardation, 111, 15-26.

https://doi.org/10.1352/0895-8017(2006)111[15:PFAVEO]2.0.CO;2 [Paper reference 1] - 19. Porter, M., Coltheart, M., & Langdon, R. (2007). The Neuropsychological Basis of Hyper-sociability in Williams and Down Syndrome. Neuropsychologia, 45, 2839-2849. [Paper reference 2]

- 20. Sauro, J., & Lewis, J. R. (2012). Quantifying the User Experience: Practical Statistics for User Research. Amsterdam: Elsevier. [Paper reference 2]

- 21. Schurgin, M. W., Nelson, J., Lida, S., Ohira, H., Chiao, J. Y., & Franconeri, S. L. (2014). Eye Movements during Emotion Recognition in Faces. Journal of Vision, 14, 1-16.

http://jov.arvojournals.org/article.aspx?articleid=2213025

">https://doi.org/10.1167/14.13.14 [Paper reference 1] - 22. Shimamura, P. A., Ross, J. G., & Bennet, H. D. (2006). Memory for Facial Expressions: The Power of a Smile. Psychonomic Bulletin & Review, 13, 217-222.

https://doi.org/10.3758/BF03193833 [Paper reference 1] - 23. Turk, J., & Cornish, K. (1998). Face Recognition and Emotion Perception in Boys with Fragile X Syndrome. Journal of Intellectual Disabilities Research, 42, 490-499.

https://doi.org/10.1046/j.1365-2788.1998.4260490.x [Paper reference 1] - 24. Uljarevic, M., & Hamilton, A. (2012). Recognition of Emotions in Autism: A Formal Meta-Analysis. Journal of Autism and Developmental Disorders, 43, 1517-1526.

https://doi.org/10.1007/s10803-012-1695-5 [Paper reference 1] - 25. Weigelt, S., Koldewyn, K., & Kanwisher, N. (2012). Face Identity Recognition in Autism Spectrum Disorders: A Review of Behavioral Studies. Neuroscience and Biobehavioral Reviews, 36, 1060-1084. [Paper reference 1]

- 26. Williams, R. K., Wishart, J. G., Pitcairn, T. K., & Willis, D. S. (2005). Emotion Recognition by Children with Down Syndrome: Investigation of Specific Impairments and Error Patterns. American Journal of Mental Retardation, 110, 378-392.

https://doi.org/10.1352/0895-8017(2005)110[378:ERBCWD]2.0.CO;2 [Paper reference 2] - 27. Wishart, J. G., Cebula, K. R., Willis, D. S., & Pitcairn, T.K. (2007). Understanding of Facial Expressions of Emotion by Children with Intellectual Disabilities of Differing Aetiology. Journal of Intellectual Disability Research, 51, 551-563.

https://doi.org/10.1111/j.1365-2788.2006.00947.x [Paper reference 1] - 28. Wishart, J., & Pitcairn, T. (2000). Recognition of Identity and Expression in Faces by Children with Down Syndrome. American Journal of Mental Retardation, 105, 466-479.

https://doi.org/10.1352/0895-8017(2000)105<0466:ROIAEI>2.0.CO;2 [Paper reference 3]