Journal of Signal and Information Processing

Vol.4 No.3(2013), Article ID:35871,9 pages DOI:10.4236/jsip.2013.43041

Depth Based View Synthesis Using Graph Cuts for 3DTV

![]()

Graduate School of Engineering, Hiroshima University, Higashihiroshima, Japan.

Email: d103714@hiroshima-u.ac.jp, hrd@hiroshima-u.ac.jp

Copyright © 2013 Anh Tu Tran, Koichi Harada. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received April 12th, 2013; revised May 12th, 2013; accepted June 12th, 2013

Keywords: View Synthesis; Depth Image Based Rendering (DIBR); Free-Viewpoint TV; Graph Cuts

ABSTRACT

In three-dimensional television (3DTV), an interactive free viewpoint selection application has received more attention so far. This paper presents a novel method that synthesizes a free-viewpoint based on multiple textures and depth maps in multi-view camera configuration. This method solves the cracks and holes problem due to sampling rate by performing an inverse warping to retrieve texture images. This step allows a simple and accurate re-sampling of synthetic pixels. To enforce the spatial consistency of color and remove the pixels wrapped incorrectly because of inaccuracy depth maps, we propose some processing steps. The warped depth and warped texture images are used to classify pixels as stable, unstable and disoccluded pixels. The stable pixels are used to create an initial new view by weighted interpolation. To refine the new view, Graph cuts are used to select the best candidates for each unstable pixel. Finally, the remaining disoccluded regions are filled by our inpainting method based on depth information and texture neighboring pixel values. Our experiment on several multi-view data sets is encouraging in both subjective and objective results. Furthermore, our proposal can flexibly use more than two views in multi-view system to create a new view with higher quality.

1. Introduction

Recently, 3D-TV application and system are rapidly growing. With the growing capability of capturing devices, multi-view capture system with dense or sparse camera array can be built with ease, free-viewpoint television (FTV) [1] system has attracted increasing attention. In FTV system, users can freely select the viewpoint of any dynamic real world to see. The chosen free-viewpoint cannot only be selected from available multi-view camera views, but also from any viewpoint between these cameras. This system requires a smart synthetic algorithm that allows free-viewpoint view rendering. To render a high quality image at an arbitrary viewpoint, one has to manage three main challenges as pointed out in [2]. First, empty pixels and holes due to sampling of the reference image have to be closed. Secondly, pixels at borders of high discontinuities cause contour artifacts. The third challenge involves inpainting disocclusions that remain after blending the projected images (these are invisible from any of the surrounding cameras). In [3] it is shown that one can obtain an improved rendering quality by using the geometry of the scene. When using depth information, a well-known technique for rendering is called Depth Image Based Rendering (DIBR), which involves the 3D-projection or 2D-warping from a viewpoint into another view.

In this paragraph, we describe briefly some recent researches on free-viewpoint DIBR algorithm. In [2], the author has developed a free-viewpoint rendering algorithm which is based on layered representation. For texture mapping, 3D meshes are created and the rendering is implemented on a Graphics Processing Unit (GPU). Although the results look good, the method is complex and requires a considerable amount of preand post-processing operations. This work is extended in [4] where the depth map is decomposed into three layers and these layers are warped separately. The warp results are obtained for each layer and merged. To deal with artifacts, they have introduced three post-processing algorithms. In [5], a new viewpoint is rendered by some steps. First, the depth maps of the reference cameras are warped to the new viewpoint. Then the empty pixels are filled with a median filter. Afterwards, the depth maps are processed with a bilateral filter. Then, the textures are retrieved by performing an inverse warping from the projected depth maps back to the reference cameras. Ghost contours are removed by dilating the disocclusions. Finally, the tex-

ture images are blended and the remaining disocclusions are inpainted using the method proposed by Telea [6]. Although, the results look good, this method is remaining some issues such as not removing all holes by median filter, assigning a none-zero value for some pixels in disocclusion regions. This work is improving in [7] by introducing three enhancing techniques. First, re-sampling artifacts are filled in by a combination of median filtering and inverse warping. Second, contour artifacts are processed while omitting warping of edges at high discontinuities. Third, disocclusion regions are inpainted with depth information. The quality of this method is higher than the work in [5], but still having disadvantages. For example, they have to define the label of pixel at high discontinuities. The color consistency during blending is not verified to avoid jagged edges at straight line after blending. The work in [8] combines depth based hole filling and inpainting to restore the disoccluded pixels more accurately compared to inpainting method without using depth information. This method produces a notable blur and can be computationally inefficient when disoccluded region is larger in the new view.

In this paper, we introduce a new free-viewpoint rendering algorithm from multiple color and depth images. First, the depth maps for the virtual views are created by warping the depth maps of reference cameras. We process the wrapped depth maps with median filter. Depth maps consist of smooth regions with sharp edges, so filtering with a median will not degrade the quality. Then, the textures are retrieved by performing an inverse warping from the warped depth maps to the reference cameras. This allows a simple and accurate resampling of synthetic pixels. After that, all warped depth and warped texture images are used to classify pixels as stable, unstable and disoccluded regions. An initial virtual view is created based on weighted interpolation of stable pixels. To refine the synthetic view, the best candidates for unstable pixels are optimally selected by Graph cuts. By defining the types of pixels and using Graph cuts, the color is consistent and the incorrectly wrapped pixels because of inaccuracy depth maps are removed in the refined view. The remaining disoccluded pixels are inpainted by using depth and texture neighboring pixel values. Considering depth information for inpainting, blurring between foreground and background textures is reduced.

The rest of this paper is organized as follows: Section 2 presents the proposed view synthesis algorithm. Section 3 shows experimental results; and, finally, Section 4 concludes this paper.

2. Proposed Synthesis Method

Our proposal is shown in Figure 1 and it consists of six steps. These steps are explained below.

2.1. 3D Warping the Depth Maps

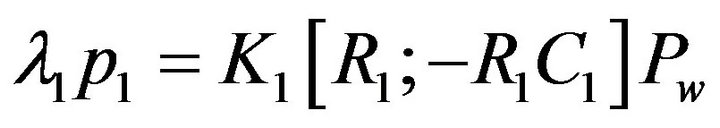

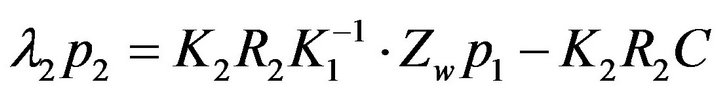

3D warping enables to synthesize a new view from the reference view as following.

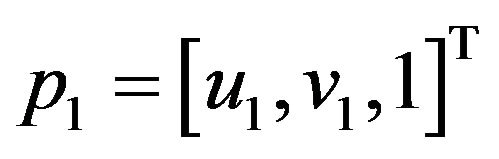

Let  be the world point;

be the world point;

and

and  be its projection onto reference and synthetic image planes, respectively.

be its projection onto reference and synthetic image planes, respectively.

,

,  and

and  are related by the camera perspective projection (1) and (2).

are related by the camera perspective projection (1) and (2).

(1)

(1)

(2)

(2)

where,  is a

is a  upper triangular matrix representing the inner structure of the camera

upper triangular matrix representing the inner structure of the camera  and is called the intrinsic matrix. The

and is called the intrinsic matrix. The  orthogonal matrix

orthogonal matrix  represents the orientation and

represents the orientation and  represents the position. The matrix

represents the position. The matrix  is called the extrinsic matrix and it indicates the relationship between world coordinates and the camera coordinates.

is called the extrinsic matrix and it indicates the relationship between world coordinates and the camera coordinates.

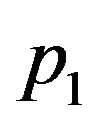

Rearranging (1) we can derive 3D coordinate of the scene point :

:

(3)

(3)

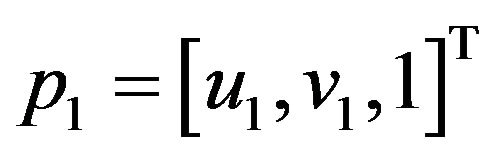

Substituting (3) into (2) we obtain the synthetic pixel position :

:

(4)

(4)

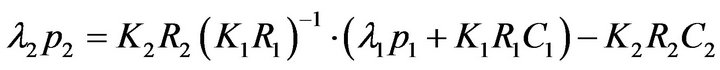

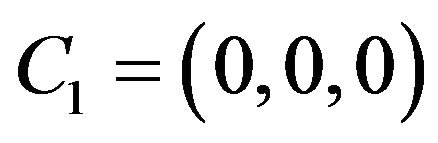

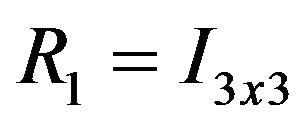

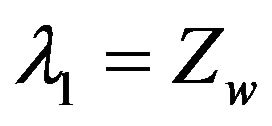

Assuming that the world coordinate system is the same as the reference camera coordinate system and looks at along , i.e.,

, i.e.,  ,

,  and

and , Equation (4) can rewrite as following:

, Equation (4) can rewrite as following:

(5)

(5)

where,  is defined by the pixel value at coordinate point

is defined by the pixel value at coordinate point  in the reference image.

in the reference image.

Applying (5) for a point  from the reference image we can calculate a point

from the reference image we can calculate a point  on the synthesis image. The problem that several points can be projected to the same point in virtual image is solved by using simple

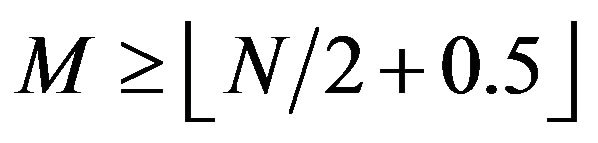

on the synthesis image. The problem that several points can be projected to the same point in virtual image is solved by using simple  technique. Another issue of this process is that a pixel

technique. Another issue of this process is that a pixel  of reference view is not usually projected on to a point

of reference view is not usually projected on to a point  at integer pixel position. To obtain an integer pixel position, we map the sub-pixel

at integer pixel position. To obtain an integer pixel position, we map the sub-pixel  to the nearest integer pixel

to the nearest integer pixel  as follows equation:

as follows equation:

(6)

(6)

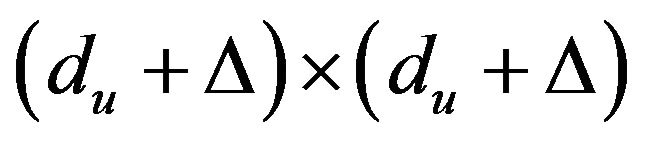

In our method, only depth maps of reference cameras are projected to virtual image plane. The warping is specified by:

Figure 1. Proposed new view synthesis algorithm.

(7)

(7)

where,  is depth map of a reference camera,

is depth map of a reference camera,

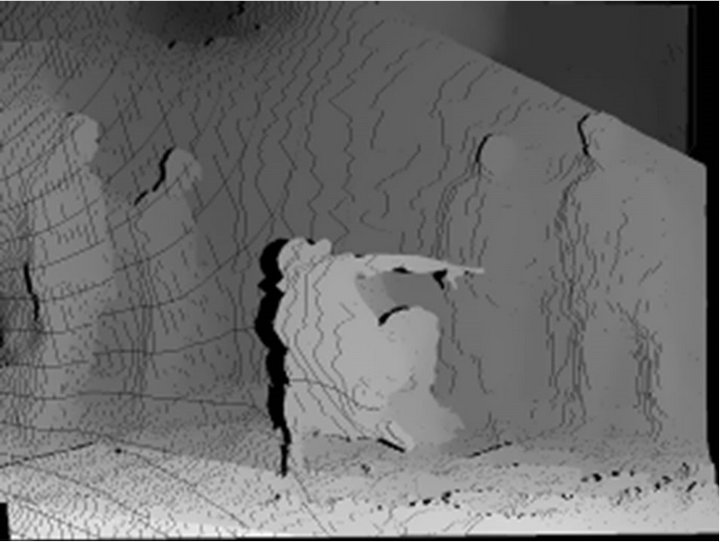

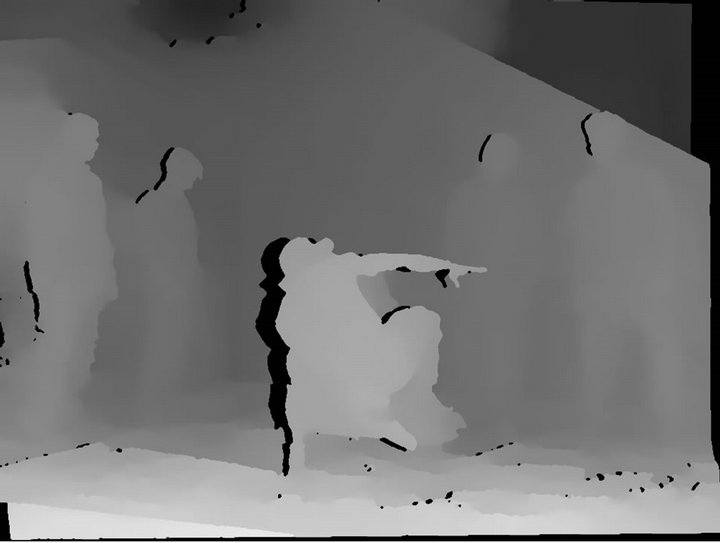

is warping operation as above describing. The projected depth maps from two reference cameras for an arbitrary scene are shown in Figure 2.

is warping operation as above describing. The projected depth maps from two reference cameras for an arbitrary scene are shown in Figure 2.

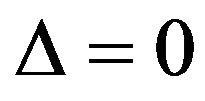

2.2. Median Filter the Warped Depth Map

In this step, we consider the blank points that appeared in projected depth map. The reasons for the appearance of these blank points are round off errors of the image coordinate by (6) and depth discontinuities. It can cause one pixel wide blank region to appear. This blank region can be filled by median filter with a window of  pixels. Depth maps consist of smooth regions with sharp edges, so filtering with a median will not degrade the quality.

pixels. Depth maps consist of smooth regions with sharp edges, so filtering with a median will not degrade the quality.

This step can describe as:

(8)

(8)

where,  is a median filter with a window

is a median filter with a window  pixels,

pixels,  is output of median filter. The image in

is output of median filter. The image in

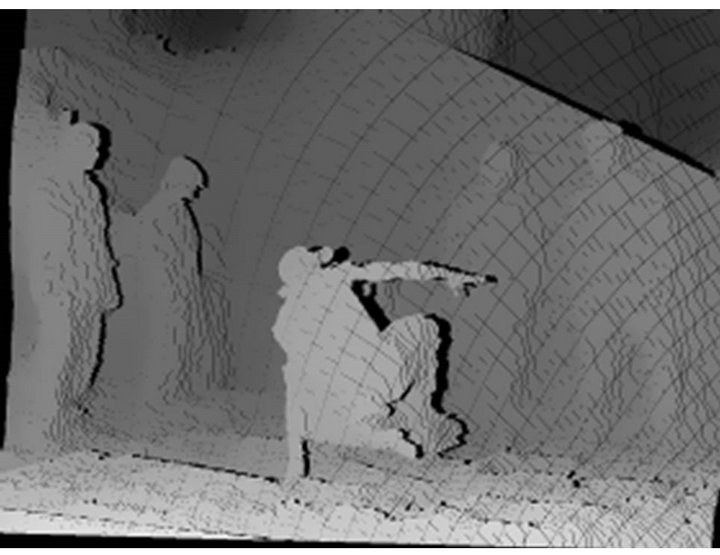

Figure 2 can be processed by using median filter to obtained images in Figure 3.

2.3. Retrieve Texture Image by Inverse Warping

In this step, the textures are retrieved by performing in-

Figure 2. The projected depth maps from two reference cameras (from the left side and from the right side).

verse warping from filtered projected depth maps back to the reference cameras.

For each pixel  of the filtered projected depth image, a

of the filtered projected depth image, a  world point

world point  is calculated based on (3).

is calculated based on (3).  is defined by the depth value at coordinate

is defined by the depth value at coordinate  in the filtered projected depth image. Then, the calculated

in the filtered projected depth image. Then, the calculated  point

point  is projected onto respective reference textures image by employing (5), such that color of the synthetic destination pixel

is projected onto respective reference textures image by employing (5), such that color of the synthetic destination pixel  is interpolated from the surrounding pixel

is interpolated from the surrounding pixel  in the reference color image. Figure 4 illustrates the image rendering process using inverse warping.

in the reference color image. Figure 4 illustrates the image rendering process using inverse warping.

This step can be specified by:

(9)

(9)

The advantage of an inverse warping operation is that all pixels of the destination image are correctly defined and the color disoccluded pixels can be inferred by back

Figure 3. Median filter depth maps.

Figure 4. Image synthesis process using inverse warping.

projected  point

point  onto multiple source image planes, covering all regions of video scene.

onto multiple source image planes, covering all regions of video scene.

Figure 5 shows the retrieved color images by inverse warping using depth maps in Figure 3.

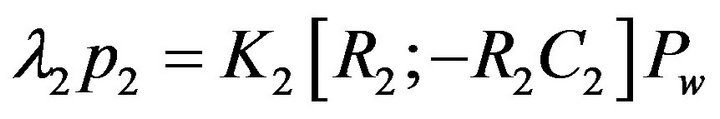

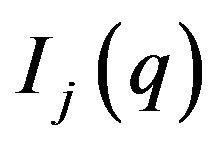

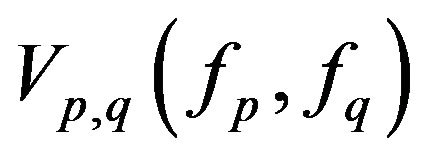

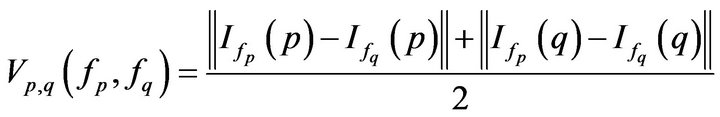

2.4. Pixel Classification and Initial New View Creation

Formally, suppose that we have a set of  texture images

texture images  and

and depth images

depth images

. Let

. Let  and

and  be the color and depth value at position of

be the color and depth value at position of  image.

image.

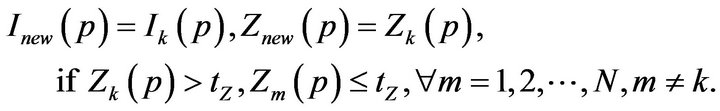

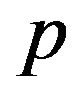

In this step, we describe the type of pixels in the synthetic view. We go through each pixel  of all

of all  input images and classify as stable, unstable and disoccluded pixels. To detect the types of pixel, we set the thresholds (depth threshold

input images and classify as stable, unstable and disoccluded pixels. To detect the types of pixel, we set the thresholds (depth threshold  and color threshold

and color threshold ) and examine the color and depth values for pixel

) and examine the color and depth values for pixel . For each color channel, the color threshold

. For each color channel, the color threshold  is set to be 15 in our case. Depth threshold is the brightness in the depth map. In our experiments,

is set to be 15 in our case. Depth threshold is the brightness in the depth map. In our experiments,  is set to 5 for the 8 bits depth quantization.

is set to 5 for the 8 bits depth quantization.

A pixel is classified as:

If the depth value of a pixel  at all

at all  input depth images is less than depth threshold

input depth images is less than depth threshold , we classify the pixel p as the disoccluded pixel. The color and depth values of the pixel

, we classify the pixel p as the disoccluded pixel. The color and depth values of the pixel  at synthetic view are set temporally to zero.

at synthetic view are set temporally to zero.

(10)

(10)

If the depth value of a pixel  at only one input image is higher than the depth threshold

at only one input image is higher than the depth threshold  and at all remaining

and at all remaining  images is less than

images is less than , we classify the pixel p as the stable pixel. This is case the pixel p is visible in only one view. The values of the pixel

, we classify the pixel p as the stable pixel. This is case the pixel p is visible in only one view. The values of the pixel  at synthetic view are just copied from the values of the pixel p in the visible view.

at synthetic view are just copied from the values of the pixel p in the visible view.

(11)

(11)

If the depth value of a pixel  is higher than

is higher than

Figure 5. Obtained color images by inverse warping.

the depth threshold  in more than one view, we examine both the color and depth values of the pixel

in more than one view, we examine both the color and depth values of the pixel  to detect the types of pixel.

to detect the types of pixel.

First step, for each view ,

,  , we examine pixel

, we examine pixel . If the depth value of the pixel

. If the depth value of the pixel  is higher than the depth threshold

is higher than the depth threshold , then we check other views

, then we check other views ,

,  ,

, . If the view

. If the view  has both a depth value of the pixel

has both a depth value of the pixel  higher than the depth threshold

higher than the depth threshold  and has color similarity at

and has color similarity at  of view

of view

and ,

,  and

and  are called consistent color

are called consistent color

(the color similarity at pixel  of two input images

of two input images  and

and  is defined based on the absolute color differences between

is defined based on the absolute color differences between  and

and  of

of ,

,  and

and  channels,

channels, ). We count the total number of view

). We count the total number of view ,

,  having the consistent color with view

having the consistent color with view

at pixel p. Assuming that for each view

at pixel p. Assuming that for each view , this total number is

, this total number is .

.

Second step, we find the biggest number of , assuming that the biggest number is

, assuming that the biggest number is .

.

If , we classify the pixel

, we classify the pixel  as the stable pixel. Otherwise, the pixel

as the stable pixel. Otherwise, the pixel  is classified as the unstable pixel. The value of unstable pixel can set to be −1 so that they can be easily identified.

is classified as the unstable pixel. The value of unstable pixel can set to be −1 so that they can be easily identified.

The color and depth values of stable pixel  at synthetic view are rendered by blending

at synthetic view are rendered by blending  pixels as following weighted interpolation:

pixels as following weighted interpolation:

(12)

(12)

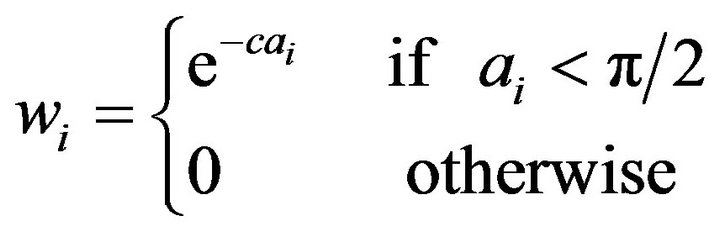

where,  is the weight factor assigned to view

is the weight factor assigned to view ,

,  and

and  are color value and depth value of pixel

are color value and depth value of pixel  at view

at view . The weight assigned to each view should reflect its proximity with the view being synthesized. The views that are closer to the synthetic view should have a bigger weight. In general, case, the weight

. The weight assigned to each view should reflect its proximity with the view being synthesized. The views that are closer to the synthetic view should have a bigger weight. In general, case, the weight

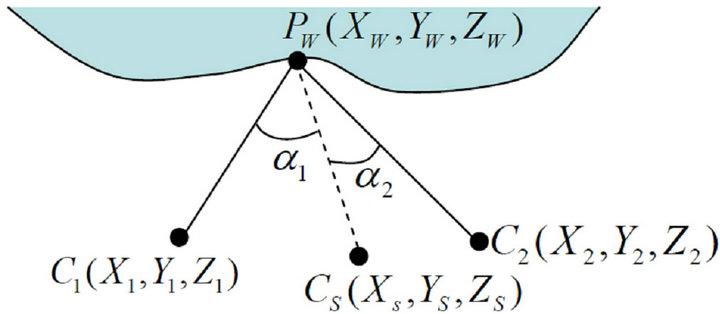

can be set based on baseline spacing. However, for more precise weighting, we use the angle distance determined by the point in

can be set based on baseline spacing. However, for more precise weighting, we use the angle distance determined by the point in  and camera positions as shown in Figure 6. The weight factor

and camera positions as shown in Figure 6. The weight factor  is calculated by

is calculated by

(13)

(13)

where,  is view index,

is view index,  is the angular distance of view

is the angular distance of view  and

and  is weight for the view at that pixel. The constant

is weight for the view at that pixel. The constant  controls the fall off as the angular distance increases. Input views for which

controls the fall off as the angular distance increases. Input views for which  are eli-

are eli-

Figure 6. Weighted interpolation based on angular distances.

minated as they view the scene the other side. In practice,  has been found to work well.

has been found to work well.

The new view is specified by

(14)

(14)

where,  is the procedure of pixel classification and initial new view creation as above described.

is the procedure of pixel classification and initial new view creation as above described.

2.5. Find the Best Candidate for Unstable Pixel by Graph Cuts

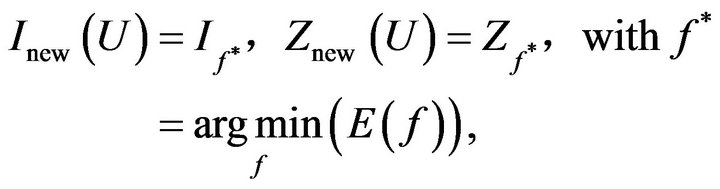

In this step, we focus on refining initial synthetic view with unstable pixels. Unstable pixels have multiple pixel candidates and we want to predict the best candidate that minimizes the energy function described in following part.

We denote  as labeling space with

as labeling space with

, representing the image index and let

, representing the image index and let  be the set of unstable pixels. Let

be the set of unstable pixels. Let  be the label of unstable pixel

be the label of unstable pixel  and

and . A labeling

. A labeling  is to assign a particular label

is to assign a particular label  to a pixel

to a pixel . With this definition, our problem is to find the labeling

. With this definition, our problem is to find the labeling  to fill the unstable region, such that the labeling

to fill the unstable region, such that the labeling  has minimum cost.

has minimum cost.

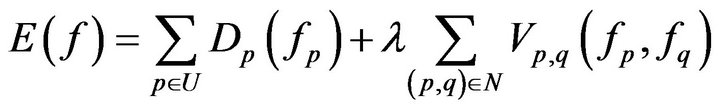

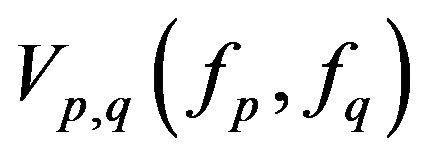

We define our energy function based on the Markov Random Fields (MRF) formulation:

(15)

(15)

where,  is the labeling field,

is the labeling field,  is the set of unstable pixels, and

is the set of unstable pixels, and  is the pixel’s neighborhood system.

is the pixel’s neighborhood system.

is called the data term, which defines the cost of assigning label

is called the data term, which defines the cost of assigning label  to pixel

to pixel .

.  denotes the smoothness term that evaluates the cost of disagreement between

denotes the smoothness term that evaluates the cost of disagreement between  and

and  which is assigned with

which is assigned with  and

and  respectively.

respectively.  is a parameter to weigh the importance of these two terms.

is a parameter to weigh the importance of these two terms.

Data term  is defined by

is defined by

(16)

(16)

where  is neighboring pixels of

is neighboring pixels of ,

,  is the depth value of pixel

is the depth value of pixel  at candidate

at candidate ,

,  and

and  (0 or 1) are the color value and disoccluded indicator of pixel

(0 or 1) are the color value and disoccluded indicator of pixel , respectively.

, respectively.  and

and  are weight factors.

are weight factors.  is color value of pixel

is color value of pixel  at input image

at input image .

.  represents the sum of absolute color differences between

represents the sum of absolute color differences between  and

and  of R, G and B channels.

of R, G and B channels.

The first part of data term enforces the candidate pixel selected to agree with its neighbor pixels. In addition, the neighboring pixel that is disocclusion does not influence the candidate selection process. It is also penalized less cost for the selecting a candidate pixel which has smaller depth value  because the pixel with smallest depth value is closer to the camera and more likely defined the color of synthetic pixel

because the pixel with smallest depth value is closer to the camera and more likely defined the color of synthetic pixel .

.

The second part of (16) is stationary cost, which defined based on color similarity at pixel p of all the input images. If the pixel  has similar color at more input images, the stationary cost is smaller.

has similar color at more input images, the stationary cost is smaller.

Smoothness term : measures the penalty of two neighboring pixel

: measures the penalty of two neighboring pixel  and

and  with different labels and is defined as follow:

with different labels and is defined as follow:

(17)

(17)

where,  denotes the Euclidean distance in RGB color spaces. The smoothness term gives a higher cost if

denotes the Euclidean distance in RGB color spaces. The smoothness term gives a higher cost if  and

and  do not match well. By incorporating such the smoothness term, we can achieve visually smooth in the synthetic image.

do not match well. By incorporating such the smoothness term, we can achieve visually smooth in the synthetic image.

We apply graph cuts optimization that is public available in [9] to minimize our energy function . More detail about energy minimization with graph cuts can be found in [10,11].

. More detail about energy minimization with graph cuts can be found in [10,11].

This step is specified by

(18)

(18)

The refinement of image in Figure 7 by using graph cut to select the best candidate for unstable pixel is shown in Figure 8" target="_self"> Figure 8.

2.6. Inpainting Disocclusion Pixels Based on the Depth and Color Values of Neighboring Pixels

Until this step, only the disocclusion regions are remaining. To deal with these disoccluded pixels, many papers such as [5,8] have developed algorithms based on the

Figure 7. Initial synthesized view with 3 types of pixels. (The white color pixels are unstable pixels, the red color pixels are disoccluded pixels and the remaining pixels are stable pixels).

Figure 8. Refinement of initial synthesized image (image in Figure 7) by using graph cut (the red color pixels are disoccluded pixels).

inpainting method proposed by Tela [6]. Inpainting is a process of reconstructing lost or corrupted parts of images using the values of neighborhood pixels. Although, these algorithms work sufficiently well, the resulting inpainted regions contain a notable blur because of the mixture background and foreground colors at the edge of disoccluded regions. In this paper, we develop a technique based on inpainting method with depth information. We assume that the disoccluded pixels belong only to background, and we employ depth information to select accurately background pixels at the edges of disoccluded regions so that the blur can be avoided. Our method consists of several steps as follow.

First, for reducing processing time we find the small disoccluded regions by defining a window with the size of  centered at

centered at  and counting the unstable pixel inside this window. If the number of visible pixels

and counting the unstable pixel inside this window. If the number of visible pixels  inside this window is higher than 50%, then the disoccluded pixels is inpainted by a weighted interpolation from visible pixels, which is specified by

inside this window is higher than 50%, then the disoccluded pixels is inpainted by a weighted interpolation from visible pixels, which is specified by

(19)

(19)

where,  is number of visible pixels inside the window.

is number of visible pixels inside the window.  is disoccluded region, and

is disoccluded region, and  is distance from disoccluded pixel

is distance from disoccluded pixel  to visible pixel

to visible pixel .

.  and

and  are color and depth values of the visible pixel

are color and depth values of the visible pixel .

.

Second, for each pixel  in remaining disoccluded regions we search in eight directions to find the pixel

in remaining disoccluded regions we search in eight directions to find the pixel , which has the smallest depth value

, which has the smallest depth value  at the edge of disoccluded region and the distance

at the edge of disoccluded region and the distance  from this point to

from this point to . We define a window with the size of

. We define a window with the size of

centered at

centered at  (at first,

(at first, )and we count the visible pixels which have depth value

)and we count the visible pixels which have depth value

with

with . If there are not enough 50% of visible pixels inside the window, we increase the size of window by increasing

. If there are not enough 50% of visible pixels inside the window, we increase the size of window by increasing . Finally, disoccluded pixels are inpainted by a weighted interpolation from visible pixels according to (19).

. Finally, disoccluded pixels are inpainted by a weighted interpolation from visible pixels according to (19).

With inpainting procedure describing above, this step can summarized by

(20)

(20)

3. Experimental Results

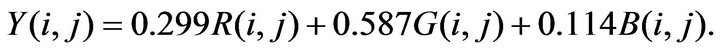

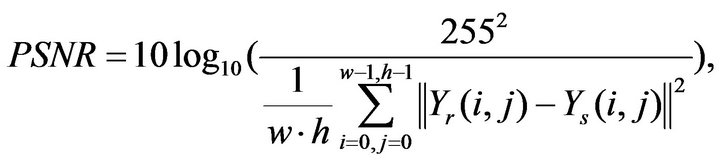

We quantify the proposal method performance based on Peak Signal Noise Ratio ( ) and the structural similarity (SSIM) index between a reference image

) and the structural similarity (SSIM) index between a reference image  and a synthetic image

and a synthetic image . SSIM index is a method for measuring the similarity between two images [12]. The SSIM index value 1 is only reachable when two images are identical and the higher PSNR normally indicates that it is higher quality synthetic image. Before computing

. SSIM index is a method for measuring the similarity between two images [12]. The SSIM index value 1 is only reachable when two images are identical and the higher PSNR normally indicates that it is higher quality synthetic image. Before computing , the images are converted from RGB color space to YUV color space, and Y channel is used for calculation. Y channel is defined by

, the images are converted from RGB color space to YUV color space, and Y channel is used for calculation. Y channel is defined by

(21)

(21)

The  can be calculated by

can be calculated by

(22)

(22)

where,  and

and  are the image width and height.

are the image width and height.  and

and  are the channels of reference image and synthetic image, respectively.

are the channels of reference image and synthetic image, respectively.

The proposed new view synthesis has been tested on “Break-dancer” and “Ballet” sequence which are generated and distribution by Interactive Visual Group at Microsoft Research [13]. These datasets include a sequence of 100 images of  pixels captured from 8 cameras with the calibration parameters. Figure 9" target="_self"> Figure 9 shows the camera arrangement of these two sequences. Depth maps for each view are also provided. For more detail about these depth maps generation, please refer to [2].

pixels captured from 8 cameras with the calibration parameters. Figure 9" target="_self"> Figure 9 shows the camera arrangement of these two sequences. Depth maps for each view are also provided. For more detail about these depth maps generation, please refer to [2].

In our paper, the synthetic view is set to be the same as the actual camera. View 3 and 5 are used with depth maps to synthesize view 4. Figure 10 shows the example of view synthesis results. The experimental results show that the proposed method achieved on average over 34 dB in PSNR and 0.93 index value in SSIM on the two sequence “Break-dancer “and “Ballet”.

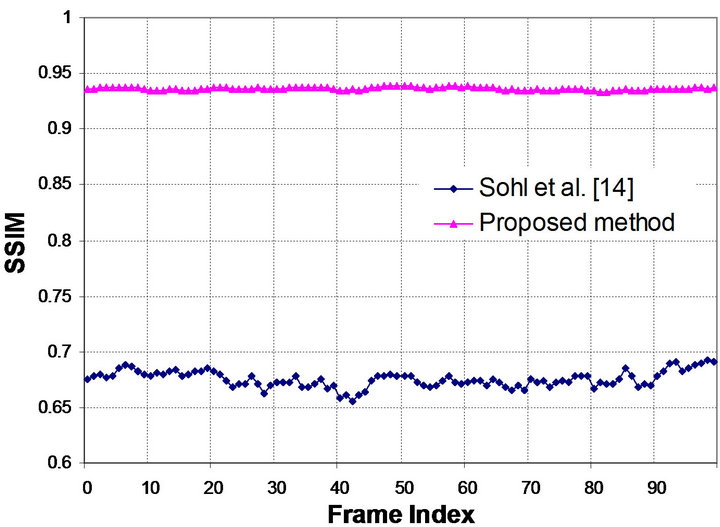

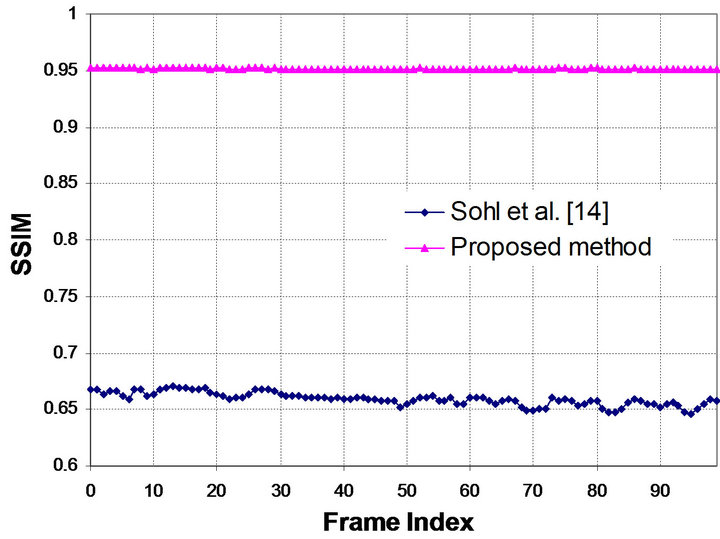

Figure 11 shows our PSNR and SSIM comparison with those of Sohl et al. [14] over 100 frames for the “Break-dancer” and “Ballet” sequences.

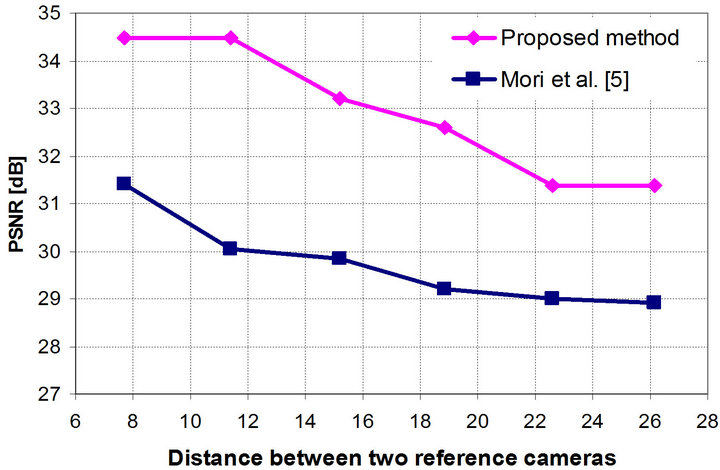

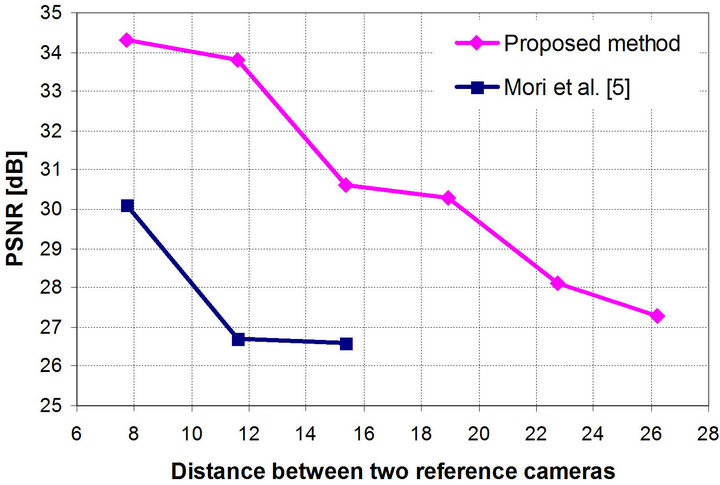

Because usually the number of cameras is limited, the camera arrangement is very importance for obtaining a good quality of synthesized view. Figure 12 shows our quality of synthesis with varying the distance between the two reference cameras comparing the method presented by Mori et al. in [5], where our measurements

Figure 9. A configuration of “Break-dancer” and “Ballet” sequences with 8 cameras [2].

(a1)

(a1)  (b1)

(b1)

(a2)

(a2)  (b3)

(b3)

Figure 10. Example of the synthetic view. (a1) Original view image; (b1) Synthesized image (PSNR = 34.7 dB; SSIM = 0.94); (a2) Original view image; (b2) Synthesized image (PSNR = 34.6 dB; SSIM = 0.95).

(a)

(a) (b)

(b) (c)

(c) (d)

(d)

Figure 11. PSNR and SSIM comparison: (a) PSNR for “Break-dancer”, (b) SSIM for “Break-dancer”, (c) PSNR for “Ballet”, (d) SSIM for “Ballet”.

correspond to an average over 100 frames.

The measured synthetic qualities are compared with other methods and summarized in Table 1. From the

(a)

(a) (b)

(b)

Figure 12. PSNR versus distance between camera for (a) “Break-dancer” sequence, (b) “Ballet” sequence.

Table 1. Exerimental results comparision.

results, the average PSNR of proposal is superior to that of other methods such as Mori et al. [5], Sohl et al. [14] with a gain of 3.0 dB. The structure similarity (SSIM) of our method is higher than that of Sohl et al. method.

Moreover, in multi-view configuration, we have  cameras, which capture the scene at difference positions. For our experimental case, there are 8 cameras. Thus, instead of using only two neighbor views as above conventional methods, we can use more than two images to synthesize a new view. Our proposal can do this idea easily. Our experiment shows that using four reference views (two views on both left side and right side) to synthesis a new view, a higher PSNR (about 0.5 - 1 dB) and SSIM are obtained than the case of using two reference views.

cameras, which capture the scene at difference positions. For our experimental case, there are 8 cameras. Thus, instead of using only two neighbor views as above conventional methods, we can use more than two images to synthesize a new view. Our proposal can do this idea easily. Our experiment shows that using four reference views (two views on both left side and right side) to synthesis a new view, a higher PSNR (about 0.5 - 1 dB) and SSIM are obtained than the case of using two reference views.

4. Conclusions

In this paper, we propose a novel synthesis method that enables to render a free-viewpoint from multiple existing cameras. The proposed method solves the main problems of depth based synthesis by performing the pixel classification to generate an initial new view from stable pixels and using Graph cuts to select the best candidate for unstable pixels. By defining the types of pixels and using Graph cuts, the color is consistent and the pixels are wrapped incorrectly because inaccuracy depth maps are removed. The remained disoccluded pixels are inpainted by using depth and texture neighboring pixel values. Considering depth information for inpainting, blurring between foreground and background textures are reduced. Experimental results show that the proposed method has strength in artifact reduction. In addition, our smooth term makes the result visually smooth. Objective evaluation has shown that our method gets a significant gain in PSNR and SSIM comparing to some other existing methods. Another advantage of our method is that we can use a set of un-rectified images in multi-view system to create a new view with higher quality.

The drawback of our method is using Graph Cuts, which is time consuming. However, we just only apply Graph Cuts for unstable pixels, which are a small amount of pixels comparing to the whole image, so the time for Graph Cuts can be reduced.

The future work will focus on more improving synthesis quality with utilizing temporal information in successive video frames.

5. Acknowledgements

We would like to thank Interactive Visual Media Group, Microsoft Research for distributing multi-camera video data and the anonymous reviewers for their comments.

REFERENCES

- M. Tanimoto, “Overview of FTV (Free-Viewpoint Television),” Proceedings of the 2009 IEEE International Conference on Multimedia and Expo, New York, 28 June-3 July 2009, pp. 1552-1553. doi:10.1109/ICME.2009.5202803

- C. L. Zitnick, S. B. Kang, M. Uyttendaele, S. Winder and R. Szeliski, “High-Quality Video View Interpolation Using a Layered Representation,” ACM Transactions on Graphics, Vol. 23, No. 3, 2004, pp. 600-608. doi:10.1145/1015706.1015766

- K. Pulli, M. Cohen, T. Duchamp, H. Hoppe, L. G. Shapiro and W. Stuetzle, “View-Base Rendering: Visualizing Real Objects from Scanned Range and Color Data,” Proceedings of the Eurographics Workshop on Rendering Techniques‘97, St. Etienne, 16-18 June 1997, pp. 23-24.

- K. Muller, K. Dix, P. Merkle, P. Kauff and T. Wiegand, “Intermediate View Interpolation Based on Multiview Video plus Depth for Advanced 3D Video Systems,” 15th IEEE International Conference on Image Processing (ICIP), San Diego, 12-15 October 2008, pp. 2448-2451

- Y. Mori, N. Fukushima, T. Yendo, T. Fujii and M. Tanimoto, “View Generation with 3D Warping Using Depth Information for FTV,” Signal Processing-Image Communication, Vol. 24, No. 1-2, 2009, pp. 65-72. doi:10.1016/j.image.2008.10.013

- A. C. Telea, “An Image Inpainting Technique Based on the Fast Marching Method,” Journal of Graphics Tools, Vol. 9, No. 1, 2004, pp. 25-36. doi:10.1080/10867651.2004.10487596

- S. Zinger, L. Do and P. H. N. de With, “Free-Viewpoint Depth Image Based Rendering,” Journal of Visual Communication and Image Representation, Vol. 21, No. 5-6, 2010, pp. 533-541. doi:10.1016/j.jvcir.2010.01.004

- K.-J. Oh, S. Yea and Y.-S. Ho, “Hole Filling Method Using Depth Based In-Painting for View Synthesis in Free Viewpoint Television and 3-D Video,” Picture Coding Symposium, Chicago, 6-8 May 2009, pp. 1-4.

- V. Kolmogorov and R. Zabih, “What Energy Functions Can Be Minimizedvia Graph Cuts?” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 26, No. 2, 2004, pp. 147-159. doi:10.1109/TPAMI.2004.1262177

- Y. Boykov, O. Veksler and R. Zabih, “Fast Approximate Energy Minimization via Graph Cuts,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 23, No. 11, 2001, pp. 1222-1239. doi:10.1109/34.969114

- Y. Boykov and V. Kolmogorov, “An Experimental Comparison of Min-Cut/Max-Flow Algorithms for Energy Minimization in Vision,” IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 26, No. 9, 2004, pp. 1124-1137. doi:10.1109/TPAMI.2004.60

- Z. Wang, A. C. Bovik and H. R. Sheikh, “Image Quality Assessment: From Error Measurement to Structural Similarity,” IEEE Transactions on Image Processing, Vol. 13, No. 4, 2004, pp. 600-612. doi:10.1109/TIP.2003.819861

- S. M. Rhee, Y. J. Yoon, I. K. Shin, Y. G. Kim, Y. J. Choi and S. M. Choi, “Stereo Image Synthesis by View Morphing with Stereo Consistency,” Applied Mathematics & Information Sciences, Vol. 6, 2012, pp. 195-200.

- M. Solh and G. AlRegib, “Hierarchical Hole-Filling for Depth-Based View Synthesis in FTV and 3D Video,” IEEE Journal of Selected Topics in Signal Processing, Vol. 6, No. 5, 2012, pp. 495-504. doi:10.1109/JSTSP.2012.2204723