Creative Education

Vol.5 No.13(2014), Article ID:48109,16 pages

DOI:10.4236/ce.2014.513135

An Asynchronous, Personalized Learning Platform―Guided Learning Pathways (GLP)

Cole Shaw1, Richard Larson2, Soheil Sibdari3

1Office of Digital Learning, Massachusetts Institute of Technology, Cambridge, MA, USA

2Engineering Systems Division, Massachusetts Institute of Technology, Cambridge, MA, USA

3Charlton College of Business, University of Massachusetts, Dartmouth, MA, USA

Email: rclarson@mit.edu

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 3 June 2014; revised 5 July 2014; accepted 13 July 2014

ABSTRACT

The authors propose that personalized learning can be brought to traditional and non-traditional learners through a synchronous learning platform that recommends to individual learners the learning materials best suited for him or her. Such a platform would allow learners to advance towards individual learning goals at their own pace, with learning materials catered to each learner’s interests and motivations. This paper describes the authors’ vision and design for a modular, personalized learning platform called Guided Learning Pathways (GLP), and its characteristics and features. We provide detailed descriptions of and propose frameworks for critical modules like the Content Map, Learning Nuggets, and Recommendation Algorithms. A threaded user scenario is provided for each module to help the reader visualize different aspects of GLP. We discuss work done at MIT to support such a platform.

Keywords:Online Learning, Asynchronous Learning, Personalized Learning, Learning Styles, Pathways, Content Map, Instructional Technology, Recommendation Algorithm

1. Introduction

We live in a period of customization, with laser-fitted body-tight jeans, personally configured computers and less welcomed highly targeted online advertizing. We can customize our personal technology eco-system, from computers to music, entertainment and TV. Our fitness program is tailored to our individual needs and goals.

But what about education? The dominant form of education today is “one size fits all”. All students proceed through a sequence of learning exercises, at the same speed and with the same pedagogical model. This industrial style paradigm has been the leading form of teaching for more than a century. Think of Taylorism, made famous with Henry Ford’s 19-teens mass production of Model T’s. Students in K-12 can be visualized as in-process widgets, proceeding down the production line having 13 manufacturing steps, each corresponding to a school grade level. Concerning any customization of the output, Henry Ford said, “Yes, they can have any color, as long as it is black”.

Perhaps the greatest disruptive force in education today is due to Moore’s Law that has made feasible customized learning environments, “laser fitted” for each student. Which is more important: Customized jeans or customized learning environments? Yet, we have the first and not yet the second. This paper offers a framework for design of a computer-based system that could provide the second―a rich learning environment made by contributors from around the world, an environment that learns a student’s strengths, weakness and learning style preferences, one that allows each student to move at her preferred pace and through different learning paths. We call it “Guided Learning Pathways”.

Education is experiencing many shifts; Clayton Christensen says that it is being “disrupted” by the potential of online learning (Christensen, Johnson, & Horn, 2008). Picciano et al. (Picciano, Seaman, & Allen, 2010) note some barriers to true transformation of education, such as changes in education policy, blended learning adoption, and higher education institutions not embracing online learning. Since they published their analysis in 2010, many new trends and technologies have emerged in the education field. The Khan Academy®1 (Khan Academy Homepage) has enabled widespread blended learning in K-12, and prestigious universities have adopted online education through MOOCs (Massive Open Online Courses).

However, some of the more popular MOOCs, still utilize the industrial education of model with a “pre-defined course”, where thousands of students must try to learn the same topics at the same pace during a given time period. This emphasis on seat-time instead of topic-based mastery learning causes many students to drop out of the courses—they may have the ability to learn the material, but struggle with the time constraints (Belanger, 2012). Given the current state of technology, asynchronous learning is able to support flexible and personalized learning so that each learner focuses on mastering the topics rather than keeping an unnecessary pace.

An asynchronous, personalized learning platform based on atomistic topics would also cater to the needs of individual learners, allowing them to learn the topics based on their interests and background, while using information suitable for each person’s ability. For example, learners could prove their content mastery and start at the topic of hashing in lecture 9 of the edX 6.00x syllabus, instead of having to re-learn the course’s prior topics that they already understand (edX, 2012). The goal of using technology to achieve personalized learning stems from the work done by Bloom in 1984 and his proposed “Two Sigma Problem”, which showed that one-to-one tutoring coupled with mastery learning improved student performance two standard deviations above that of a traditional classroom (Bloom, 1984). More recent research in traditional classrooms has also shown the benefits of letting students learn at their own pace and focus on topics that interest them (Rose & Meyer, 2012; Tullis & Benjamin, 2011).

The goal of technology-enabled personalized learning has been re-invigorated in recent years. The Department of Education’s National Education Technology Plan defines personalized education as “paced to learning needs, tailored to learning preferences, and tailored to the specific interests of different learners”, and a platform supporting personalized education is one of the plan’s Grand Challenges (US Department of Education, 2010). Researchers and startup companies have begun exploring adaptive technologies to support personalized learning in classrooms, although a comprehensive solution has not yet appeared (see (Dede & Richards, 2012; Knewton, n.d.; Siemens et al., 2011; Time To Know, n.d.; Vander Ark, 2012)).

We believe that an asynchronous, service-oriented platform can provide these features to hundreds of thousands of traditional and non-traditional students across the globe. While it would require significant up front investment, the added cost for each additional learner would be negligible. Researchers and companies would be able to plug-in new and improved modules into the platform so it continuously serves the learners. To achieve this goal, we propose an online, personalized learning platform called Guided Learning Pathways (GLP).

Many learners turn to asynchronous information sources on the Internet, such as MOOCs (Massive Open Online Courses), MIT OpenCourseWare®2 (MIT), or Khan Academy (Khan Academy Homepage). However, they often have to sift through information sources to find useful, targeted material appropriate for their specific situations; furthermore, they may need to chain together multiple sources to cover all of their learning needs. For example, a newly graduated engineer at an aerospace firm may need to improve her knowledge of neural networks for a project. Yet she does not have the time or resources to take a traditional class at the local university or community college; a textbook seems too abstract for her needs. Searching online may yield great information about neural networks, and existing OER (Open Educational Resources) search tools could help her find resources on neural networks. But she needs to review some fundamental material—and she has to keep searching and reviewing additional sites to first define her “known unknowns”.

We believe that Guided Learning Pathways (GLP) can provide this type of “pre-requisite” information (her personalized pathway) using an expert-defined Content Map and can automatically assess which topics she needs to master to achieve her overall learning goal. Furthermore, GLP will help learners achieve this goal at their own pace, through personalized learning and personalized content—for instance, examples of neural network usage in aerospace could be served to our example learner. Using data collected from a multitude of learners, GLP can provide targeted learning recommendations to maximize learner’s understanding and engagement, much like consumer internet services and merchants provide content recommendations to their users.

This paper presents an overview of Guided Learning Pathways with additional detail on the modules that comprise the platform. A threaded example that continues through each module provides details on how the modules could interact with an example learner. Finally, we discuss some work at MIT that could support a platform like GLP.

2. Overview of GLP

Recent publications put forth ideas for personalized education platforms in classrooms (US Department of Education, 2010; Dede & Richards, 2012; Vander Ark, 2012). Some companies have started selling such platforms to schools. However, these platforms require the use of a teacher or in-person facilitator to guide the students or to teach the content, which restricts them to physical classroom experiences—limiting their reach and impact. Typically, the teachers help students with specific difficulties when they get stuck and provide guidance on which lessons, topics, or materials should be followed and in what sequence. As an alternative, GLP provides a large-scale, asynchronous platform where domain experts could encode their guidance, which could then be accessed by large numbers of learners.

GLP is envisioned to be a modular platform, and as new learning technologies and improvements to existing technologies emerge, new modules could integrate into the platform. Different learners or educators could select which type of module they wish to utilize—one may wish to use a crowd-sourced and “approved” Content Map, while another may wish to use a custom version created by a high-school teacher in Ohio. As another example, current researchers look to improve intelligent tutors by detecting learner emotions (learners’ affective state), and as this technology matures and proves useful, it could be incorporated into GLP as one intelligent tutor option (D’Mello et al., 2010). This can be achieved with a service-oriented architecture supporting GLP.

We list some commonly used terminology in Table 1, and more detailed explanations will be given in the later sections.

As learners make their way through their individual pathways, each experiences a personalized experience, which might include the graphical representation of their pathway. GLP will access data repositories of Learning Nuggets, like MIT’s Core Concept Catalog (Merriman, n.d.), and recommend to each learner the Nuggets most suitable for her. GLP will make this determination based on each learner’s learning style preferences, personal interests, and other learners’ success with each Nugget; however, learners will be free to choose the resources they use among those available. Even though it has not been proven that there exists a single, unique learning style per individual (Pashler, McDaniel, Rohrer, & Bjork, 2008), learners may define a preference as a default and then select what seems more engaging for them at any given moment. As more learners use the system and GLP gathers better data on Nuggets, GLP can perform better filtering based on Nugget quality and discard poorer performing Nuggets to maintain a strong learning environment.

GLP can also match learners to create learning communities. Previous research shows that such communities help learners master content (Lenning & Ebbers, 1999). GLP will be able to use its data on learner history and mastery to form virtual learning communities where members can help each other learn.

The GLP platform will be a modularized platform where different organizations or individuals can plug-in their modules. Envisioned modules could include (but are not limited to) the following: 1) Content Map, 2) Data

Repository, 3) Intelligent Tutors, 4) Learning Communities, 5) Learning Nuggets, 6) Recommendation Algorithms, and 7) User Interfaces. Several of these will be described in more detail throughout the rest of this paper. This document presents a fluid and evolving description of GLP, and the examples described within represent possible GLP implementations—readers should not interpret them as being the only implementations possible.

3. Learners

3.1. Description

We begin by defining the types of learners that use GLP. As mentioned in the Overview of GLP, we envision that both traditional and non-traditional learners will use the GLP platform. We define traditional learners as those in age-appropriate learning environments with access to a qualified teacher, while non-traditional learners may include youth in rural areas, people in developing countries, or lifelong learners with specific application needs. Regardless of each individual’s situation, GLP will personalize her learning experience based on her needs and goals.

Each learner embodies a set of inherent attributes that GLP uses to improve her learning—these attributes define her needs and the context of her learning. Some of the attributes may include non-academic interests to help tailor the learning material and better engage the learner. If she is a basketball fan, she may get more basketball related Nuggets. Similarly, her explicit educational learning goals (i.e. introductory biology math) and interests (computational biology) help GLP focus the types of material and topics presented to her. A learner also starts using GLP with a set of knowledge different than other users, and by determining her starting point; GLP ensures that the material presented to her is at the right difficulty level.

A learner may also have learning preferences that change over time. Parameters like her preferred learning style (i.e. visual, textual, or auditory) or even her preferred interface style (i.e. node-based, virtual world) could be adapted to better engage the learner and improve her learning.

Some of these attributes can be determined by GLP upon learner registration, either through a direct questionnaire or a basic assessment test. GLP inputs the information into its Recommendation Algorithms to rank the Nuggets for each learner. As GLP gathers more information from a large number of learners and learns more about each individual’s learning patterns, its recommendations should improve and help learners master the topics more readily. For example, a learner may claim a preferred learning style of visual materials, but GLP notices that she actually performs better when using auditory materials and adjusts her preferences automatically. More detail on this is provided in the Nugget Recommendation Algorithms section.

3.2. Threaded Example

Mary Smith wants to be a biology major and is entering her freshman year at State University, where Mr. Mathlet coordinates introductory courses. It is the start of the school year, and he has almost ten thousand new students to assign courses to. He sees that Mary has an interest in biology, so he assigns her to take the State University Biology Calculus pathway to complete in the first academic year.

After Mary gets the registration e-mail in her university e-mail account, she navigates to the GLP website. She registers and is presented with several different learning materials talking about trees. One is a visual resource that shows her a small video and some graphics, another is a text passage describing the same information, and a third is an audio recording of a botanist in the field describing a rain forest. Mary selects the visual category as her preferred option. GLP records this and will use that information as her initial learning style preference—GLP will initially recommend more visual Nuggets to her, but it may adjust the recommendations as it learns more about her learning habits.

Mary also has a chance to list her non-academic interests. This information will help customize the problem sets and Nuggets that GLP recommends to her, and it could be used to match her up with an on or off-campus learning community. She imports her interests from a social media site, which include jazz music, baseball, and action movies.

4. User Interface

4.1. Description

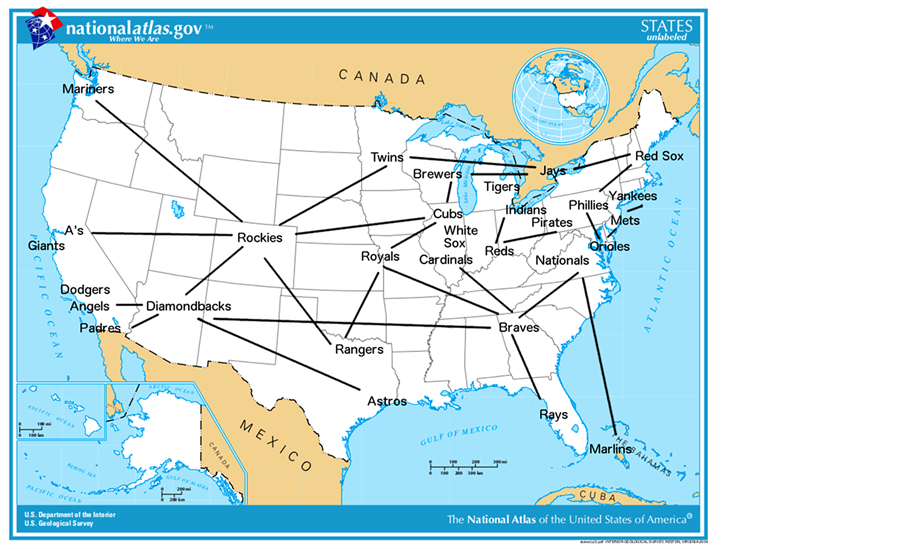

The User Interface allows each learner to navigate her pathway in GLP through a visualization method that may be more intuitive for her (pathways are described more in the Content Map section). One can imagine that these interfaces are further personalized with overlays that cater to each learner’s personal interests—for example, John and Mary might both prefer geographic interfaces, but John likes soccer and Mary likes baseball, so John’s Content Topics are mapped to soccer stadiums while Mary’s are mapped to baseball stadiums.

Furthermore, two different levels of interface could be presented in GLP, one for the Content Map and another for the Nuggets. For example, for geographic interfaces, a Content Map might be overlaid onto the United States with cities representing each topic, but Nuggets might be mapped to rooms inside a building within the topic city. In this case, Cleveland might be where learners study Derivatives, and the Terminal Tower might house all of the Nuggets classified as Lecture Notes, with different floors representing different majors (odd floors contain Biology notes, while even floors contain Engineering notes).

4.2. Threaded Example

After importing her interests, Mary then has a chance to pick an interface style. GLP offers some pre-defined categories, including node-based or graph-theoretic (shown in Figure 2 and Figure 3), geographic or map-based (Figure 1), and 3D virtual world. Since Mary enjoys geography, she selects the geographic option. GLP knows that she has an interest in baseball, so it uses a baseball overlay. GLP then starts her off with a trip around the USA and asks her to visit all the Major League Baseball stadiums with a general East-to-West direction of travel. She sees the initial map from Figure 1, which shows different topics in Biology Calculus overlaid onto baseball stadium locations.

Earlier in the afternoon, Mary had chatted with a new friend, Mark, who was also in the class. Mark prefers simple interfaces when he works on the computer, to minimize distractions, and he selected a node-based interface when he registered. Mary appreciates that she selected an interface that would be more dynamic and engaging for her.

5. Content Map

5.1. Description

After a learner registers, she is introduced to the GLP Content Map. The Content Map takes the traditional,

Figure 1. Example of geographic user interface (original image courtesy of National Atlas (National Atlas of the United States, 2003)).

high-level idea of a subject (like calculus) and breaks it down into topic-based maps. Instead of every topic being linearly connected as in a standard course syllabus or textbook, topics would be connected to related topics and such topic-strings could be learned in parallel. For example, learning Fractions does not depend on knowledge associated with Exponents, so the two concepts could be learned in parallel; the reverse example would be that Addition and Subtraction needs to be learned before Multiplication and Division, so these topics must be learned sequentially (Khan Academy Dashboard, n.d.). Thus, embedded within each topic is a list of pre-requisite Topics that a learner must master prior to starting the topic.

When she registers, the learner can take an assessment test to determine her placement on the Content Map and reveal which topics she may already have mastery knowledge in. Given the pre-requisite relationships within GLP, it will be assumed that if a learner tests out of a topic, she will also have mastery of the pre-requisite topics. If it is later discovered that she is weak in a specific area or needs additional mastery, GLP can add topics to the learner’s pathway and reinforce her knowledge. As the learner uses GLP, she can also opt to test out of additional topics by proving her mastery through an assessment test. Ways to prove mastery might be correctly answering several questions in a row, or achieving 95% probability of mastery in a Bayesian Knowledge Tracing model (Corbett & Anderson, 1994).

By focusing the Content Map on topics instead of “subjects”, GLP enables more efficient learning of core topics across disciplines. For example, Physics and Calculus might share many of the same topics, but in a Content Map the shared topics could be merged and learners would not have to re-learn the concepts at different times.

However, the Content Maps could also be customized for different majors and interests. Tailoring subjects like mathematics to engineering has been shown to improve student engagement and retention at several universities in the USA (Lord, 2012), so GLP will extend the concept to all majors. The National Research Council’s (NRC) BIO2010 report also supports the idea of specialized math; in the report, the NRC outlined the specific mathematics requirements for an undergraduate biology curriculum (National Research Council, 2010). Thus, in GLP, biology majors could each learn what is relevant for their interests, with examples and topics oriented towards their field. An engineering student might learn Derivatives differently than a biology major. An extension of this idea would be that different majors might have different levels of mastery required for different topics. Biology majors might need to master Derivatives at only 60%, whereas engineers might need to master it at 95% (in a theoretical case). Topics themselves could also be categorized according to rigor—for example, there could be Introductory Statistics as well as Graduate Statistics.

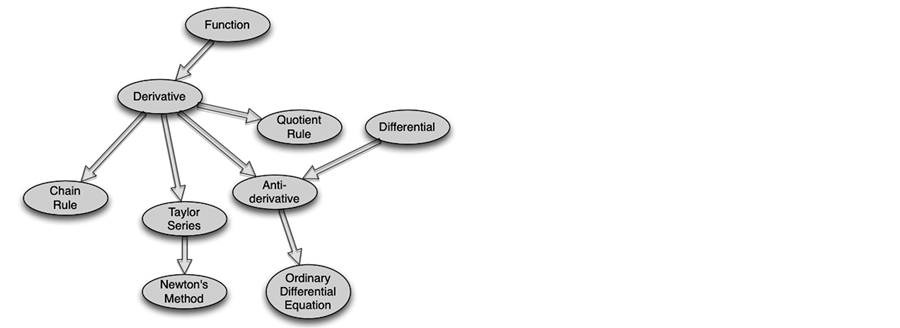

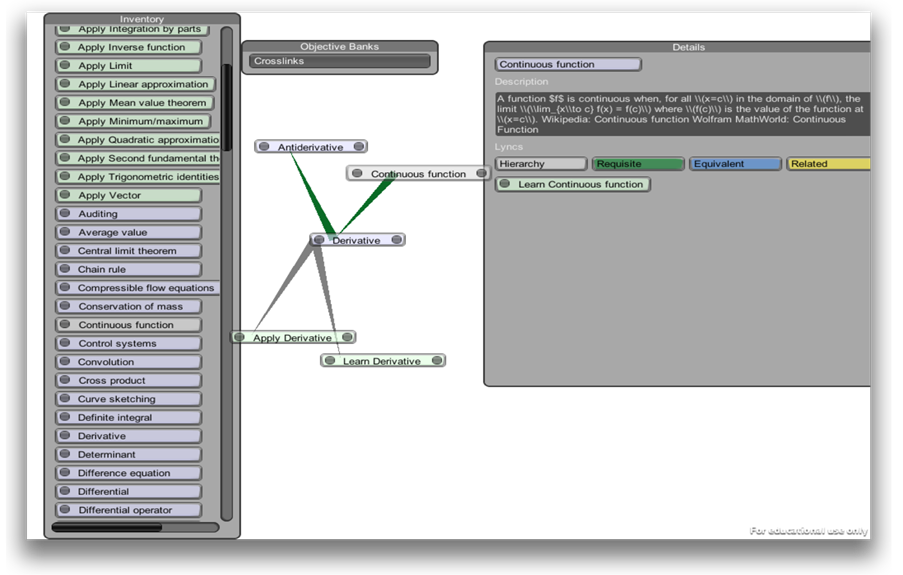

The idea of a Content Map is similar to the learning trajectories used in youth math education and research, or the ASSISTments®3 Skill Diagram (Daro, Mosher, & Corcoran, 2011; Hefferman, Hefferman, & Brest, n.d.). The GLP map could initially be designed by domain experts and then refined over time with GLP user data. As learners use Nuggets and note areas of confusion or add external resources to help clarify a topic, other learners can comment on the usefulness of these resources. Topics can then be broken down into sub-topics to create a more detailed Content Map. At the university level, MIT Crosslinks provides one example of an expert-generated Content Map that includes university-level Calculus topics (MIT Crosslinks). A portion of the Crosslinks data is shown in Figure 2:

Another way modify the map could be indirectly through learner success. Research has shown that infants learn reading through different pathways (Fischer, Rose, & Rose, 2006), and trying to teach an infant with an incorrect framework or map can be ineffective. One can imagine that sequences of topics are chained together, but different learners might learn each sequence in a different order, depending on their personal cognitive state. However, this will need to be better defined through more research—GLP could enable this by including a framework to allow learners to try different sequences of topics.

Table 2 summarizes the Content Topic Attributes that GLP will use to guide learners. Each topic in the Content Map will need to have this information.

5.2. Pathways

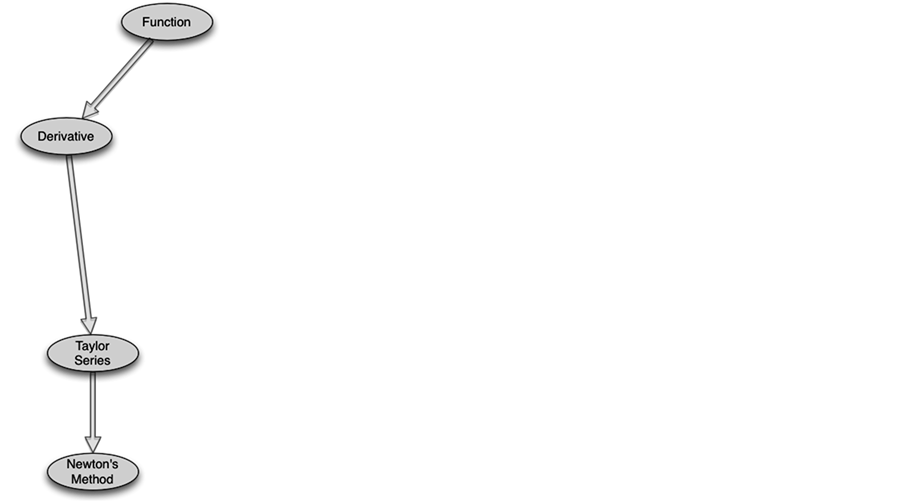

As defined above, pathways are sub-sections of the Content Map that help achieve specific learning goals. These pathways could be pre-defined by domain experts or determined by GLP based on aggregated Learner history. Figure 3 shows an example pathway to learn Newton’s Method:

To determine which pathway a learner should follow, GLP uses a learner’s learning goal and major field of study to search for a pre-defined pathway. This pre-defined pathway could be crowd-sourced from other GLP users and act as a modifiable template for each individual. A learner’s overall pathway should include her explicit learning goal, if she picked a specific topic (i.e. Derivatives), or all of the topics related to her field of study (i.e. introductory biology calculus). All pre-requisite topics to support her learning goal are included in the Pathway by default, and she must demonstrate mastery of these topics through an assessment test or GLP activities.

When a learner registers, GLP places her onto the appropriate starting point of her pathway—this could be at the very beginning, or halfway through. The exact location depends on the learner’s previous knowledge mastery and could differ for each individual student. As the learner uses GLP and masters additional topics, she moves along the pathway and towards her learning goal. It is possible for a Learner to have a different sequence of topics within her pathway to reach her goal compared to others (Fischer, Rose, & Rose, 2006). Thus as GLP evaluates her individual performance, it can offer the learner different pathway options.

Figure 2. Subset of MIT Crosslinks data (MIT).

Table 2. Content topic attributes.

Figure 3. Example pathway to learn Newton’s method (MIT).

5.3. Threaded Example

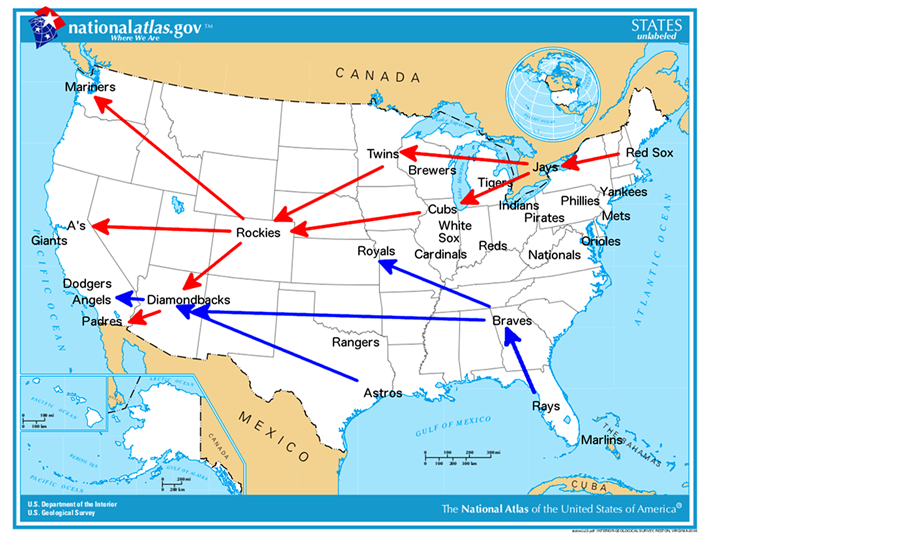

GLP analyzed the learning goals that Mr. Mathlet assigned to Mary. It has determined that she needs to master a set of topics from the Calculus Content Map—the blue and red arrows in Figure 4 represent two different pathways between topics within Biology Calculus that both allow her to achieve her learning goals. Table 3 shows one possible topic mapping for the blue arrows, using data from MIT Crosslinks (MIT Crosslinks). Mary can select the pathway that seems best suited for her, with some guidance from GLP. Based on the popularity rating of the blue pathway, she chooses to follow it first—if it does not seem to be working, she can always change later on.

Mary then gets a short assessment test to determine where on the blue pathway she should start. GLP finds that in some topics, Mary is actually at an intermediate level, while in others she is at a basic level. GLP records the topics that she already knows and places her at the start of the pathway—Mary needs to review some topics.

6. Content Recommendation Algorithms

6.1. Description

Two “levels” of recommendation algorithms will be used by GLP: for the Content Topics and the Nuggets. It can be imagined that different types of algorithms are tested at each level or adapted to individual learners and backgrounds, so that learners receive the most useful recommendations for them. Here we describe the Content Topic recommendation algorithms.

The Content Topic recommendation algorithm identifies the Content Topics that remain on the learner’s pathway, which he or she has not yet mastered. As noted above in the Content Map section, different learners could actually have different pathways to reach the same learning goal, which is something that requires additional research. In the Pathways section we described how a learner’s pathway includes all of the topics re

Figure 4. Example geographic GLP interface (original image courtesy of National Atlas (National Atlas of the United States, 2003)).

quired for her to achieve mastery in her learning goal. Once GLP knows this set of topics, it recommends a portion of them to the learner for her to study. These recommended topics are those where the learner has mastered all pre-requisites, or topics with no pre-requisites. By showing mastery for all pre-requisite topics (either through the initial assessment or with GLP usage), the learner demonstrates that she is ready to proceed to any of these topics. The learner can also follow her self-interest and choose to study topics not presented by GLP, but where she has also mastered the pre-requisites for her desired topic.

6.2. Threaded Example

Since Mary selected the blue pathway, GLP calculates the topics where she showed sufficient mastery in all the pre-requisites. However, during her assessment test, Mary did not achieve mastery in any topic, even though she did demonstrate knowledge in some of the basic topics like Derivatives and Functions. As a result, GLP searches for topics with no pre-requisites that Mary can start with.

GLP finds two topics along the blue pathway with no pre-requisites—Functions in Tampa Bay, and Differential in Houston. It presents both options to Mary. She still remembers some of the concepts in Functions from her high school class, so she decides to visit the Tampa Bay Rays.

7. Learning Nuggets

7.1. Description

Once a learner selects a topic, GLP uses statistical methods to infer about her learning preferences and skills in order to recommend the Learning Nuggets best suited for her (described in the Nugget Recommendation Algorithms section). These Nuggets include lectures notes, media (video, audio, etc.), assignments, and assessment tools that are crowd-sourced from public contributors as Open Educational Resources. Nuggets will have an associated level of rigor to suit different learners and could range from elementary school to postgraduate level. Each Nugget will also receive a dynamic rating that reflects its effectiveness in helping learners master its topic.

The learner studies as many Nuggets as she wants, before choosing to take a topic assessment to test her mastery level. If she proves her mastery of the topic, she can move on and select another topic to study. If the learner has not mastered the topic, she will be presented with a re-ranked list of Nuggets for the same topic.

To help match Nuggets to learners, Nuggets need to be tagged with metadata. Some of these have been mentioned above, such as rigor. Others might include learning style or non-academic themes; examples might be videos, lectures, or activities. This requires that GLP include an initial way to identify a learner’s preferred learning style. Learning styles could be defined as visual, textual, or auditory—note that this means a video-based resource could be a visual, textual, or auditory learning style, depending on the characteristics of the video.

Nuggets will also be categorized into different types, each of which represents a different pedagogical tool. Some example categories are seen in Table4

Over time, a learner’s preferred learning style could be refined from her initial selection by analyzing each learner’s behavior in GLP and which Nuggets prove most effective in advancing the learner’s mastery. While research has not definitively proven that learners have a single learning style that helps them learn most effectively (Pashler, McDaniel, Rohrer, & Bjork, 2008), the idea of providing multiple options that can engage learners in different ways is an alternative solution, incorporated into the Universal Design for Learning (UDL) framework (CAST, n.d.). By providing different types of Nuggets, GLP merges both approaches and provides the flexibility of UDL and learner choice while also providing a structure for learners who have a preferred learning modality the majority of the time. As Pashler et al. (Pashler, McDaniel, Rohrer, & Bjork, 2008) mention, there exists anecdotal evidence about individual learners “getting” a topic using some specific learning style when a different style did not work for them before or did not work for other learners, so providing multiple options is essential (and limiting learners to a single style may not be a great idea).

As noted above, Nuggets could be crowd-sourced from high-quality, existing OER or created specifically for GLP. A quality-review process will ensure that Nuggets meet GLP’s criteria for inclusion into the platform. Such crowdsourcing of content has proven successful in other Internet platforms, such as Wikipedia and open-source software like Linux.

GLP will constantly review and evaluate the Nuggets after a learner uses them. Over time, if a Nugget proves more useful for a subset of learners, GLP will recommend that Nugget more often for other learners with similar backgrounds. However, if a Nugget proves less useful or detrimental to a subset of learners, GLP will either remove the Nugget for that subset of learners or remove it completely from the Data Repository. Table 5 summarizes Learning Nugget Attributes.

GLP combines the Nugget attributes listed in Table 5 with learner attributes such as major field of study, non-academic interests, and previous knowledge, to create personalized rankings of Nuggets for each learner. The most highly recommended Nuggets are those that GLP believes can best help the learner master a specific Content Topic. We provide more detail in Nugget Recommendation Algorithms section.

7.2. Threaded Example

Mary selected to first visit the Tampa Bay Rays and Tropicana Field®10, where she will study Functions. Enter

Table 5. Learning nugget attributes.

ing the stadium, she sees that different sections contain different rigor levels and types of Nuggets. The Box Suites are Undergraduate Interactive Applets, the Lower Deck, First Base seats are Graduate Lecture Notes, and the Upper Deck, Third Base seats are Undergraduate Case Studies. It appears that there is one Nugget assigned per seat, so she has a wide variety of options to choose from. As she wanders through the Lower Deck, Third Base seats, metal placards on each seat flash at her. Each placard contains a phrase or keyword, and each seat seems to have at least four placards attached. Mary stops at one seat, and she sees: “Creator: John Smith” “population growth” “video” “visual” “4.2”.

8. Nugget Recommendation Algorithms

8.1. Description

As mentioned above, GLP will include a more detailed Recommendation Algorithm at the Nugget level. Nadolski et al. have used simulators to test personalized recommendation algorithms for lifelong learners and self-organized learning networks, to match learners with learning actions (similar to our Learning Nuggets) (Nadolski, et al., 2009). Other researchers have also investigated recommendation algorithms using collaborative filtering, preference-based, neighbor-interest-based, and other data mining techniques (Recker, Walker, & Lawless, 2003; Romero, Ventura, Delgado, & De Bra, 2007); Tsai, Chiu, Lee, & Wang, (Recker, Walker, & Lawless, 2003; Romero, Ventura, Delgado, & De Bra, 2007; Tsai, Chiu, Lee, & Wang, 2006).

The Nugget recommendation algorithm identifies and ranks the best Nuggets for a learner that will help her master the topic in the most efficient way possible (i.e. in less time, most intuitively, with least frustration). Our method assumes that a large dataset of Learning Nuggets exists within GLP along with a large number of learners using the platform. There exist many ways to recommend Nuggets under these conditions—one envisioned method would be to use the learner’s preferred learning style (i.e. visual Nuggets), personal interests (i.e. baseball), and historical data about each Nugget or sequence of Nuggets (i.e. 40% of learners with similar profiles who used Nugget X mastered the topic, vs. 70% of learners with similar profiles who used Nugget Y mastered the topic). Nugget efficacy can be measured in terms of marginal improvement for similar learners. To find this relationship among Nugget efficacy, Nugget attributes, and learner attributes, we can use different statistical tools such as regression, prediction models, clustering and classification, and understanding the learner’s preferences.

More sophisticated versions of this algorithm could be imagined:

• Different Nuggets may be more useful at the start of a learning sequence (when topic mastery is inherently low) and others at the end (when topic mastery is very high, and only some details are unclear).

• A sequence of Nuggets may be more useful than a single Nugget.

After scoring all Nuggets for the learner’s selected topic, GLP presents the Nuggets in descending order of score, much like a search engine’s results page—new (or “unranked”) Nuggets could be strategically inserted into the top of the list so that learners use them and help them develop a history. Similar to a search engine, this list of Nuggets will differ between individual learners. From this list, the learner can study as many Nuggets as desired (and in any order). She can choose to follow or not follow the GLP recommendations—she can select and use highly-ranked Nuggets that may not match her preferred learning style or major, but that may still be useful for her based on their quality and efficacy with other learners. When she feels ready to test her knowledge, the learner can choose to take an assessment to measure her mastery level. If she reaches the required mastery level, the learner moves on and selects another topic to study. If the learner has not achieved sufficient mastery, the Nuggets will be re-scored using updated information from all learners, and the learner can select new Nuggets to use. Avoid the stilted expression, “One of us (R. B. G.) thanks…” Instead, try “R. B. G. thanks”. Do NOT put sponsor acknowledgements in the unnumbered footnote on the first page, but at here.

8.2. Threaded Example

Mary stops her random exploration of Tropicana Field and pulls up GLP’s Recommended Nugget list. She sees that there are over ten pages of Function Nuggets available in the stadium; the first page includes a mixture of Nuggets from the Right Field bleacher seats, the Lower Deck, First Base side, Lower Deck behind home plate, and the Box Suites. She is free to explore these in any order, or even to skip to later pages on the list. However, she knows that GLP produced this list just for her, based on her interests, background, and other learners’ usage of the Nuggets.

Mary wanders over to the Right Field bleacher seats to read some Undergraduate Lecture Notes from MIT, then heads over to the Upper Deck, Third Base Side to analyze an Undergraduate level Case Study from Stanford. Finally, she plays with some Undergraduate level Interactive Applets in the Box Suites made by Marine Biologist 123, a practicing biologist. Mary loves exploring Tropicana Field and seeing the parts of the stadium while learning more about Functions!

Mary feels like she has a good grasp of the concept of Functions, so she returns to the Ticket Office and asks for an Assessment test. She starts working on the first problem, but doesn’t understand how to get past the second step. She requests a hint, and GLP records this action. Mary gets past her mental block and finishes the first problem. She works on the other problems and also uses some hints to get through them. She barely fails the assessment at the end, and the Ticket Office asks Mary to return to the stadium and try some more Nuggets.

Mary re-opens up the GLP recommendation page sees a new list of Nuggets to try—the list has been updated with additional information from other learners and her own history with Functions. GLP follows a mastery learning philosophy and expects all students to master each topic before moving on to subsequent topics. Since Functions is a fundamental concept for the rest of Mary’s pathway, GLP expects her to achieve at least a 95% mastery level with it, based off of its internal model of her knowledge. The system also makes an internal note that Mary failed her assessment after using the three Nuggets and adjusts their ratings accordingly—in the future, it will again try recommending these Nuggets for other learners to see how much it helps them, and if the Nuggets prove unhelpful, their ratings will decrease. Eventually they may be removed from the GLP data repository.

This time Mary selects a Khan Academy video Nugget from the Lower Deck, Third Base Side that is also highly recommended, but it doesn’t match her textual learning style. After watching the video, she returns to the Ticket Office and asks for another Assessment. This time she does better passes the assessment. Internally, GLP makes a note of this in Mary’s learner record and also adjusts the Khan Academy Nugget’s rating appropriately. According to GLP’s internal model of Mary’s knowledge, it thinks she has achieved 95% mastery and marks the topic of Functions as “completed” on her records. She receives a ticket out of Tampa Bay.

Mary then returns to the GLP main page and sees the node map with her pathway. Tampa Bay appears green, and the lines connecting Tampa Bay to Atlanta, Kansas City, and Phoenix are now bright, showing her the other stadiums that she can now visit. These correspond to Derivatives, Quotient Rule, and Antiderivative, which have Functions as their pre-requisite. Since Kansas City and Phoenix have additional pre-requisites, they are not available yet, and Mary can choose to visit Houston (Differential) or Atlanta (Derivatives) as her next stop.

9. Current Work at MIT

MIT Core Concept Catalog

The MIT Core Concept Catalog (MC3) is a learning objective service and asset repository that developers can build applications on top of―providing a core component of personalized learning systems like GLP. MC3 provides a central place for MIT faculty to store learning objectives, concepts, digital assets, and the relationships linking all of these items together. The Office of Digital Learning (ODL) at MIT has implemented and deployed MC3, and built several example applications on top. Each application accesses the same set of data, but presents the data in different ways—much like GLP envisions different users being able to interact with learning topics and nuggets in unique ways. As more applications are built and concepts are linked across the Institute, we can imagine MIT students learning “modules” of information that cater to their goals and interests, instead of semester long courses―i.e. GLP nuggets.

One example application, Salam (Figure 5), has been built with on a 3D gaming engine and presents MC3 learning data in a graphical format. This allows users to see relationships between learning objectives that may not be obvious in a purely text format.

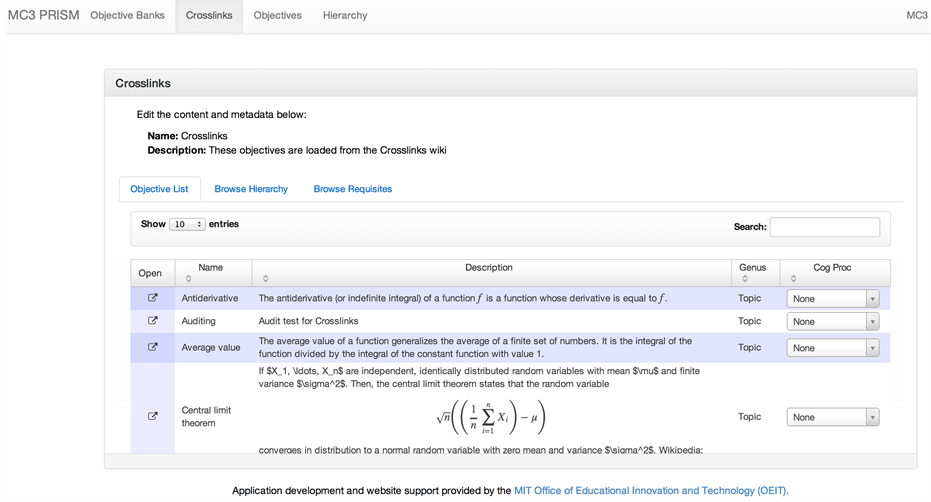

An administrative application, Prism (Figure 6), allows users to see the same MC3 data in more textual form, which allows for interaction on a more granular level.

As with other service-oriented web applications, we have created a learning service where the same set of educational data can be viewed and manipulated in different ways. As applications are deployed on top of MC3 and used at MIT by faculty and students, we expect that a rich ecosystem of learning experiences, much like GLP, will emerge.

10. Conclusion

In this paper we present Guided Learning Pathways (GLP), an asynchronous, personalized learning platform for non-traditional learners. GLP emphasizes content-based mastery and provides learners with recommended learning materials (Nuggets) that help them achieve this mastery. We describe a general framework for GLP and provide details on five modules: User Interface, Content Map, Content Topic Recommendation Algorithm, Learning Nuggets, and Learning Nugget Recommendation Algorithm. In the future, other modules could be developed and integrated into the platform. Examples of each module are provided to give readers a sense of the envisioned capabilities of GLP, though one can imagine additional system functionalities. A threaded example describes how each module would interact with a single learner. We finish with a view of an MIT project that

Figure 5. Salam app, using MC3 data.

Figure 6. MC3 Prism app uses a text-based representation of MC3 data.

provides a service-oriented architecture and could enable a GLP experience.

Acknowledgements

The authors would like to thank Mac Hird, Professor Navid Ghaffarzadegan, Yi Xue, Abby Horn, Peter Wilkins, Brandon Muramatsu, Jeff Merriman, Scott Thorne, Dr. Jun Wang, Dr. Kanji Uchino, Professor Robert Hampshire, and Professor Chris Dede for their comments and support during many discussions about GLP. The research leading to this paper was supported by Fujitsu Laboratories of America under contract to the Massachusetts Institute of Technology, the contract called, “Towards Intelligent Societies: What Motivates Students to Study Science and Math? How Can We Provide Flexible Learning Pathways?” The opinions expressed in this paper represent those of the authors and do not necessarily represent the opinions of Fujitsu Laboratories of America or the Massachusetts Institute of Technology.

About the Authors

Cole Shaw is a software developer in the Office of Digital Learning at MIT. He served as a Technology Transfer Peace Corps Volunteer in Mexico and has worked at Sandia National Laboratories. He also works with Mexican university students on innovative product design and entrepreneurship workshops. He holds an M.S.E. and B.S.E. in Electrical Engineering from the University of Michigan, Ann Arbor, and an S.M. in Technology and Policy from MIT.

Dr. Larson is MIT Mitsui Professor in the Engineering Systems Division. He is the founding Director of the Center for Engineering Systems Fundamentals as well as LINC, Learning International Networks Consortium. He has focused his research on research services industries, including technology-enabled education. He is PastPresident of INFORMS, Institute for Operations Research and the Management Sciences. From 1999 through 2004, Dr. Larson served as founding co-director of the Forum the Internet and the University. He is a member of the National Academy of Engineering.

Dr. Sibdari is an associate professor of operations management in the Charlton College of Business at University of Massachusetts in Dartmouth, MA, and teaches courses in statistics and operations management. His undergraduate background is computer science from the Iranian Shahid Beheshti University. He received his masters in Economics in 2001 and PhD in industrial engineering in 2005 both from Virginia Tech. His research interest is retails and service operations management, pricing and inventory policies, and empirical operations management. He has published in leading journals of the field including European Journal of Operations Research, Systems Dynamic Review, and International Journal of Revenue Management.

References

- Belanger, Y. (2012). Evaluating the MITx Experience. http://today.duke.edu/node/80777

- Bloom, B. S. (1984). The 2 Sigma Problem: The Search for Methods of Group Instruction as Effective as One-to-One Tutoring. Educational Researcher, 13, 4-16. http://dx.doi.org/10.3102/0013189X013006004

- CAST (n.d.). About UDL. http://www.cast.org/udl/

- Christensen, C., Johnson, C., & Horn, M. (2008). Disrupting Class: How Disruptive Innovation Will Change the Way the World Learns. New York, NY: McGraw-Hill.

- Corbett, A., & Anderson, J. (1994). Knowledge Tracing: Modeling the Acquisition of Procedural Knowledge. User Modeling and User-Adapted Interaction, 4, 253-278. http://dx.doi.org/10.1007/BF01099821

- D’Mello, S., Lehman, B., Sullins, J., Daigle, R., Combs, R., Vogt, K. et al. (2010). A Time for Emoting: When Affect-Sensitivity Is and Isn’t Effective at Promoting Deep Learning. Lecture Notes in Computer Science 2010: Intelligent Tutoring Systems, 6094, 245-254.

- Daro, P., Mosher, F., & Corcoran, T. (2011). Learning Trajectories in Mathematics: A Foundation for Standards, Curriculum, Assessment, and Introduction. Consortium for Policy Research in Education.

- Dede, C., & Richards, J. (Eds.) (2012). Digital Teaching Platforms: Customizing Classroom Learning for Each Student. New York, NY: Teachers College Press, Columbia University.

- EdReNe (2011). Current State of Educational Repositories—National Overview: United Kingdom. http://edrene.org/results/currentState/uk.html

- edX (2012). 6.00x Syllabus. https://www.edx.org/static/content-mit-600x~2012_Fall/handouts/6.00x_syllabus.5c9cae040ec5.pdf

- Fischer, K., Rose, L., & Rose, S. (2006). Growth Cycles of Mind and Brain: Analyzing Developmental Pathways of Learning Disorders. In K. Fischer, J. Bernstein, & M. Immordino-Yang (Eds.), Mind, Brain, and Education in Reading Disorders (pp.101-123). Cambridge, U.K.: Cambridge University Press.

- Hefferman, N., Hefferman, C., & Brest, A. (n.d.). ASSISTment Skill Diagram. http://teacherwiki.assistements.org/wiki/images/2/29/Assistment_Skill_Diagram_Poster_PDF.pdf

- Khan Academy Dashboard (n.d.). Exercise Dashboard. http://www.khanacademy.org/exercisedashboard

- Khan Academy Homepage (n.d.). http://www.khanacademy.org/

- Knewton (n.d.). Personalized Education for the World. http://www.knewton.com/about/

- Lenning, O., & Ebbers, L. (1999). The Powerful Potential of Learning Communities: Improving Education for the Future. Washington, DC: The George Washington University, Graduate School of Education and Human Development.

- Lord, M. (2012). Teaching Toolbox. http://www.asee.org/papers-and-publications/blogs-and-newsletters/connections/2012October.html#teaching

- Merriman, J. (n.d.). Core Concept Catalog. http://oeit.mit.edu/gallery/projects/core-concept-catalog-mc3

- MIT Crosslinks (n.d.). Crosslinks. http://Crosslinks.mit.edu/Crosslinks/index.php/Main_Page

- MIT (n.d.). http://ocw.mit.edu/index.htm

- Nadolski, R., van den Berg, B., Berlanga, A., Drachsler, H., Hummel, H., Koper, R. et al. (2009). Simulating Light-Weight Personalised Recommender Systems in Learning Networks: A Case for Pedagogy-Oriented and Rating-Based Hybrid Recommendation Strategies. Journal of Artificial Societies and Social Simulation, 12.

- National Atlas of the United States (2003). Printable States Map (Unlabeled). http://www.nationalatlas.gov/printable/images/pdf/outline/states%28u%29.pdf

- National Research Council (2010). BIO 2010: Transforming Undergraduate Education for Future Research Biologists. Washington, DC: National Academies Press.

- Pashler, H., McDaniel, M., Rohrer, D., & Bjork, R. (2008). Learning Styles: Concepts and Evidence. Psychological Science in the Public Interest, 9, 105-119.

- Picciano, A., Seaman, J., & Allen, I. (2010). Educational Transformation through Online Learning: To Be or Not to Be. Journal of Asynchronous Learning Networks, 14, 17-35.

- Recker, M., Walker, A., & Lawless, K. (2003). What Do You Recommend? Implementation and Analysis of Collaborative Filtering of Web Resources for Education. Instructional Science, 31, 299-316. http://dx.doi.org/10.1023/A:1024686010318

- Romero, C., Ventura, S., Delgado, J., & De Bra, P. (2007). Personalized Links Recommendation Based on Data Mining in Adaptive Educational Hypermedia Systems. Lecture Notes in Computer Science, 4753, 292-306. http://dx.doi.org/10.1007/978-3-540-75195-3_21

- Rose, D., & Meyer, A. (2012). Teaching Every Student in the Digital Age: Universal Design for Learning. Association for Supervision and Curriculum Development.

- Siemens, G., Gasevic, D., Haythornthwaite, C., Dawson, S., Shum, S., Ferguson, R. et al. (2011). Open Learning Analytics: an Integrated & Modularized Platform. Society for Learning Analytics Research.

- Time To Know (n.d.). Overview. http://www.timetoknow.com/company

- Tsai, K., Chiu, T., Lee, M., & Wang, T. (2006). A Learning Objects Recommendation Model Based on the Preference and Ontological Approaches. Sixth International Conference on Advanced Learning Technologies, Kerkrade, 5-7 July 2006, 36-40. http://dx.doi.org/10.1109/ICALT.2006.1652359

- Tullis, J., & Benjamin, A. (2011). On the Effectiveness of Self-Paced Learning. Journal of Memory and Language, 64, 109-118. http://dx.doi.org/10.1016/j.jml.2010.11.002

- US Department of Education (2010). Transforming American Education: Learning Powered by Technology. Washington, DC: US Department of Education, Office of Educational Technology,

- Vander Ark, T. (2012). Getting Smart: How Digital Learning Is Changing the World. San Francisco, CA: Jossey-Bass.

NOTES

1Khan Academy, Inc., http://www.khanacademy.org.

2Massachusetts Institute of Technology, http://ocw.mit.edu/index.htm.

3Carnegie Mellon University, http://www.cmu.edu.

4Tampa Bay Rays Baseball Ltd., http://tampabay.rays.mlb.com.

5Atlanta National League Baseball Club, Inc., http://atlanta.braves.mlb.com.

6Kansas City Royals Baseball Corporation, http://kansascity.royals.mlb.com.

7Houston McClane Company, Inc., http://houston.astros.mlb.com.

8AZPB Limited Partnership, http://arizona.diamondbacks.mlb.com.

9Anaheim Angels, L.P., http://losangeles.angels.mlb.com.

10Tropicana Products, Inc., http://www.tropicana.com.