Open Journal of Statistics

Vol.4 No.3(2014), Article ID:44323,5 pages DOI:10.4236/ojs.2014.43016

On Minimizing the Standard Error of the Slope in Simple Linear Regression

Steven M. Crunk, Xinh Huynh

Department of Mathematics and Statistics, San Jose State University, San Jose, USA

Email: steven.crunk@sjsu.edu, xinh.huynh@gmail.com

Copyright © 2014 by authors and Scientific Research Publishing Inc.

This work is licensed under the Creative Commons Attribution International License (CC BY).

http://creativecommons.org/licenses/by/4.0/

Received 8 February 2014; revised 8 March 2014; accepted 15 March 2014

ABSTRACT

A common homework problem in texts covering calculus-based simple linear regression is to find a set of values of the independent variable which minimize the standard error of the estimated slope. All discussions the authors have heard regarding this problem, as well as all texts with which the authors of this paper are familiar and which include this problem, provide no solution, a partial solution, or an outline of a solution without theoretical proof and the provided solution is incorrect. Going back to first principles we provide the complete correct solution to this problem.

Keywords: Minimization; Variance; Coefficient; Beliefs about Statistics; Statistical Literacy

1. Introduction

A homework question, occurring in several oft cited best-selling introductory texts covering calculus-based simple linear regression, goes something like this:

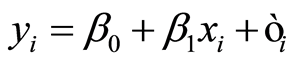

Suppose we are to collect data and fit a straight-line simple linear regression, . The errors are assumed to have mean zero, unknown variance

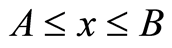

. The errors are assumed to have mean zero, unknown variance  and to be uncorrelated with one another. Further suppose that in this designed experiment, the region of interest for x is

and to be uncorrelated with one another. Further suppose that in this designed experiment, the region of interest for x is ,

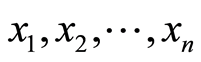

,  , and that the primary goal is to make the standard error of the estimate of the slope as small as possible. For a given sample size n, at what values of the independent variable should the observations be taken? That is, how should

, and that the primary goal is to make the standard error of the estimate of the slope as small as possible. For a given sample size n, at what values of the independent variable should the observations be taken? That is, how should  be chosen so as to minimize the standard error of the estimate of

be chosen so as to minimize the standard error of the estimate of .

.

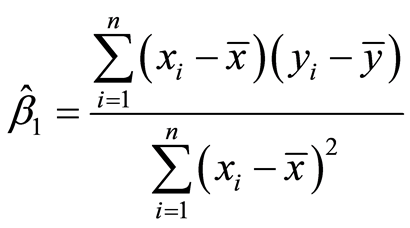

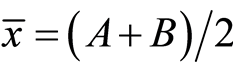

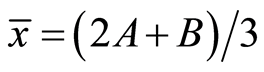

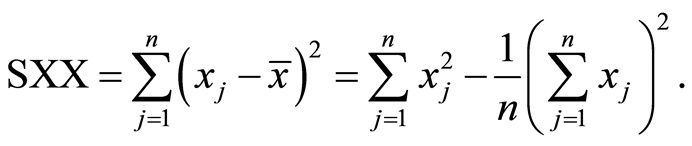

From [1] , which does not include the above noted problem, and virtually any other text covering simple linear regression, we know the following: the estimate of the slope is

which has standard deviation

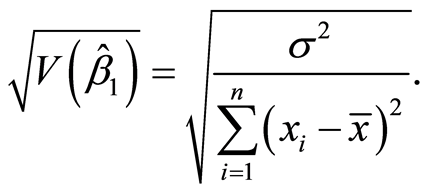

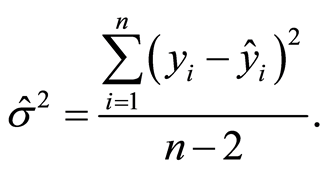

The estimated standard deviation, or standard error, is found by replacing  by its estimate

by its estimate

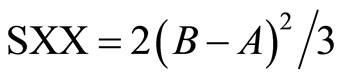

The error variance  is an unknown constant and its estimator cannot be formed until data are collected. Thus in the case of either the theoretical standard deviation or the estimated standard error, the numerator under the radical is unknown and not under the control of the experimenter in the question. Consequently the minimization of the standard deviation or the standard error is achieved by maximizing the quantity

is an unknown constant and its estimator cannot be formed until data are collected. Thus in the case of either the theoretical standard deviation or the estimated standard error, the numerator under the radical is unknown and not under the control of the experimenter in the question. Consequently the minimization of the standard deviation or the standard error is achieved by maximizing the quantity

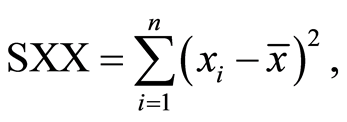

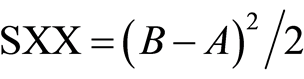

the corrected sum of squares of the x’s.

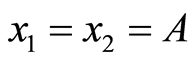

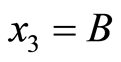

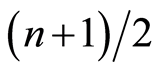

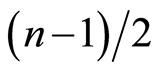

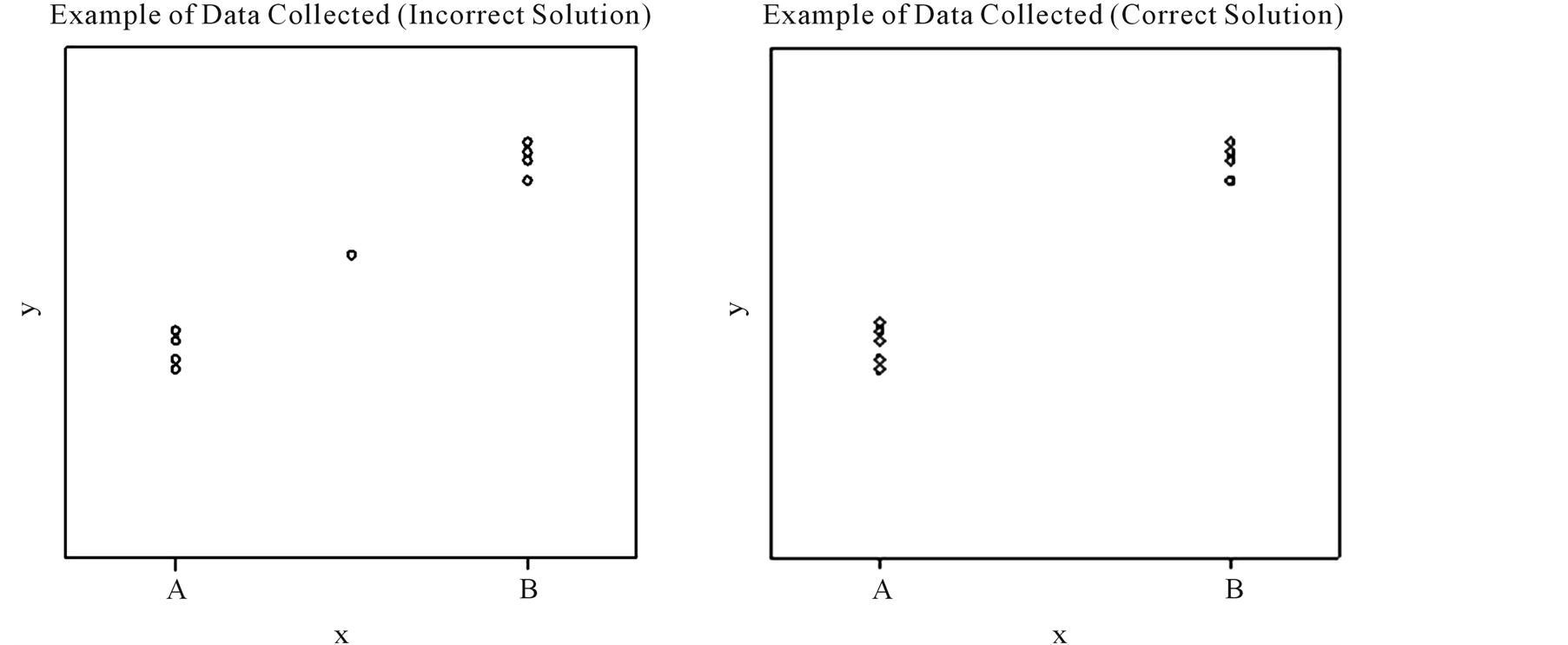

Many texts which include this problem provide no solution. Every discussion that the authors have heard discussed or seen in a solutions manual suggests, without proof, that in order to maximize SXX if n is even, half of the observations should be taken at A and half at B. Many texts that include a solution ignore the possibility that n is odd, even though no condition on n was provided in the question. When a solution is provided for n odd, every solution we have seen suggested without proof that  observations should be taken at each of A and B with the remaining single observation being taken half way between these values, at

observations should be taken at each of A and B with the remaining single observation being taken half way between these values, at . That this solution is incorrect which can be seen with a simple example where

. That this solution is incorrect which can be seen with a simple example where . The result using the “usual” solution outlined above is to take

. The result using the “usual” solution outlined above is to take ,

,  , and

, and  from whence

from whence  and

and . Alternatively, if we take

. Alternatively, if we take  and

and , we have

, we have  and

and  which are larger than the value obtained using the “usual” solution, showing that the usual solution is not correct. We suppose that the desire for symmetry led to the belief in the incorrect solution; however symmetry has not been neither mentioned nor required for the problem under discussion.

which are larger than the value obtained using the “usual” solution, showing that the usual solution is not correct. We suppose that the desire for symmetry led to the belief in the incorrect solution; however symmetry has not been neither mentioned nor required for the problem under discussion.

In the sequel we show that for n even, the “usual” solution of choosing half of the observations to be taken at A and the other half to be taken at B is correct. For n odd we show that in order to minimize the standard error,  observations should be taken at one end of the interval (either at A or at B) and the remaining

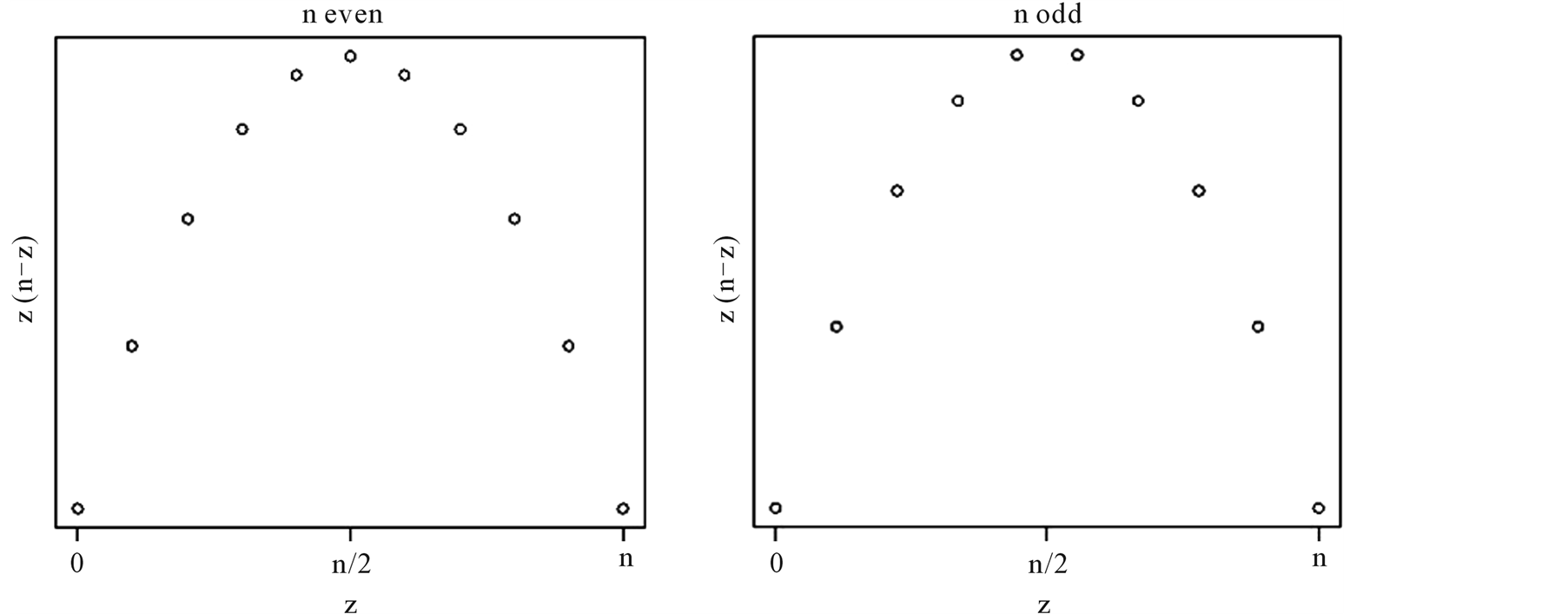

observations should be taken at one end of the interval (either at A or at B) and the remaining  observations should be taken at the other end of the interval. An example of this result is given in Figure 1. Throughout we will assume that the sample size n is a given constant.

observations should be taken at the other end of the interval. An example of this result is given in Figure 1. Throughout we will assume that the sample size n is a given constant.

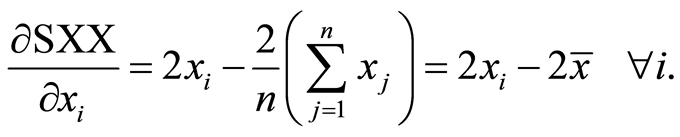

2. The Objective Function; Sum of Squares

Our goal is to find the set of  which maximize

which maximize

Since the  are continuous variables (not in the statistical sense but rather in the algebraic sense) on the interval

are continuous variables (not in the statistical sense but rather in the algebraic sense) on the interval , we may use techniques of calculus in order to find the values that maximize this function (see, e.g., [2] ). We have

, we may use techniques of calculus in order to find the values that maximize this function (see, e.g., [2] ). We have

Figure 1. The graphs above represent n (an odd number) data points collected according to two plans for minimizing the standard error of the slope in simple linear regression. The figure on the left represents the common but incorrect solution whereby one observation is taken in the middle of the interval. In the graph to the right, the number of observations taken at either end of the interval differ by one. Although lacking symmetry, this is the correct solution for minimizing the standard error of the slope.

Setting this equal to zero we have  being stationary points. Of course our variables exist on a closed interval so we must also investigate the endpoints. As a result it must be true that

being stationary points. Of course our variables exist on a closed interval so we must also investigate the endpoints. As a result it must be true that .

.

If  then SXX = 0, which is the smallest possible value of SXX, i.e., choosing

then SXX = 0, which is the smallest possible value of SXX, i.e., choosing  leads to a minimum rather than a maximum. The same is true if observations are taken either all at A or all at B. We would then say it is obvious that at least one observation must be taken at A and at least one observation must be taken at B, but authors saying “it is obvious that...” is what led to this note in the first place. Consider the case where some observations are taken at

leads to a minimum rather than a maximum. The same is true if observations are taken either all at A or all at B. We would then say it is obvious that at least one observation must be taken at A and at least one observation must be taken at B, but authors saying “it is obvious that...” is what led to this note in the first place. Consider the case where some observations are taken at  and the rest at

and the rest at  distinct from A; this is a contradiction as the mean would then not be at

distinct from A; this is a contradiction as the mean would then not be at . Similarly, it is impossible to have some observations at B and the rest at

. Similarly, it is impossible to have some observations at B and the rest at . Accordingly it must be true that at least one observation must be taken at each of A and B.

. Accordingly it must be true that at least one observation must be taken at each of A and B.

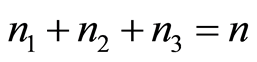

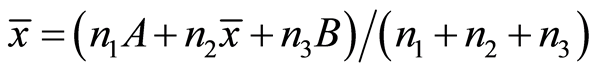

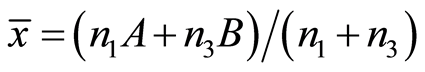

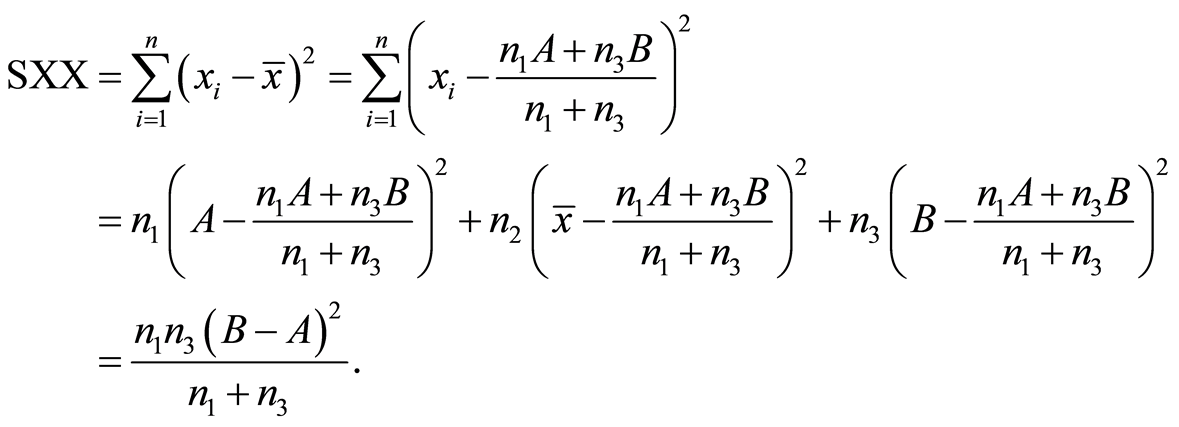

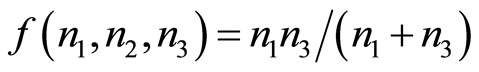

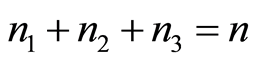

Let  be the number of observations taken at A, n2 be the number of observations taken at

be the number of observations taken at A, n2 be the number of observations taken at , and

, and  be the number of observations taken at B. From the argument in the previous paragraph we have

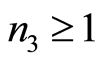

be the number of observations taken at B. From the argument in the previous paragraph we have  for

for ,

,  , all integers, and

, all integers, and , a given constant. Then

, a given constant. Then , the simplification of which leads to

, the simplification of which leads to . Consequently, substituting these values, we have

. Consequently, substituting these values, we have

The quantity  is an arbitrary non-negative constant. Some texts give as their example

is an arbitrary non-negative constant. Some texts give as their example  and

and , some give

, some give  and

and , and still other books use other choices for these given constants. The choice of A and B, as seen in the final formula for SXX, have no bearing on the solutions for

, and still other books use other choices for these given constants. The choice of A and B, as seen in the final formula for SXX, have no bearing on the solutions for ,

,  and

and  which maximize SXX. Thus we shall simply attempt to find parameters

which maximize SXX. Thus we shall simply attempt to find parameters ,

,  and

and  that maximize

that maximize  with the constraints imposed previously that

with the constraints imposed previously that ,

,  and

and  are non-negative integers,

are non-negative integers,  ,

,  , and

, and , a known/given constant.

, a known/given constant.

3. Optimization

The function with constraints given in the previous paragraph may be maximized in any number of ways. Possibilities considered by the authors include the following: taking the variables of interest to be continuous and maximizing the function through the use of calculus, hoping for integer values which would then be the optimal solution [3] ; using integer programming [4] ; and other possibilities. However, it seems that a simple algebraic manipulation may be the most elegant solution.

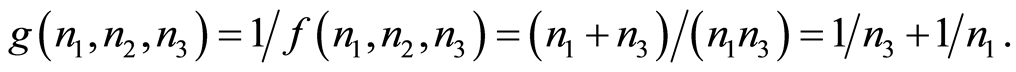

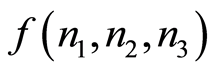

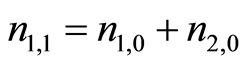

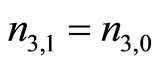

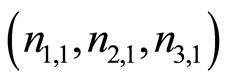

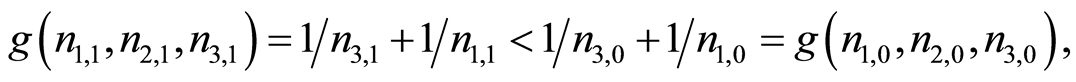

Let

Thus maximizing  is equivalent to minimizing

is equivalent to minimizing . We now show that n2 must be zero. Assume that

. We now show that n2 must be zero. Assume that  is an ordered triple which meets the constraints and which minimizes

is an ordered triple which meets the constraints and which minimizes  with

with . Let

. Let ,

,  , and

, and . Then the ordered triple

. Then the ordered triple  also satisfies the constraints, and furthermore

also satisfies the constraints, and furthermore

which is a contradiction to the assumption that  minimizes

minimizes , hence

, hence .

.

Now one of our constraints reduces to , and maximizing

, and maximizing  reduces to maximizing

reduces to maximizing

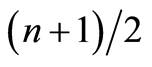

This last is simply a parabola which we need to maximize over . To find the maximum, treat the parabola as a function of a continuous variable z. The maximum occurs when

. To find the maximum, treat the parabola as a function of a continuous variable z. The maximum occurs when , that is, when

, that is, when . As n is integer valued, for n even this implies

. As n is integer valued, for n even this implies  gives the maximum value, while for n odd either of the two points surrounding

gives the maximum value, while for n odd either of the two points surrounding ,

,  or

or , gives the same maximum value. Figure 2 graphically demonstrates this result. The contradiction in the previous paragraph gives

, gives the same maximum value. Figure 2 graphically demonstrates this result. The contradiction in the previous paragraph gives  and this with the original constraint that

and this with the original constraint that , a known/given constant, gives the value of

, a known/given constant, gives the value of .

.

4. Conclusions

For the common homework problem appearing in approximately half of the texts covering calculus-based simple linear regression with which the authors are familiar, and which was posed at the beginning of this paper, we have shown that if n is even, the oft given solution to choose half of the points at which to take observations at either end of the interval is correct. However, for odd n we have shown that the only previously given solution to place one point in the center of the interval and half of the remaining points at each end of the interval is incorrect, and that the correct solution is to choose nearly half, either  or

or , at one end of the interval and the remaining points at the opposite end of the interval.

, at one end of the interval and the remaining points at the opposite end of the interval.

Figure 2. When n is even, the maximum of the objective function occurs at . When n is odd, the maximum value occurs at

. When n is odd, the maximum value occurs at  and

and .

.

We part with the common caveat that this oft given textbook problem is of little use in most realistic applications unless it is known that the true relationship among the data is linear, as the solution affords us no opportunity to check this assumption with the observed data. However, the authors would submit that there is a difference between being “useless in practical situations” and “understanding something fundamental about simple linear regression”. We believe that it is important for a student to understand the theory underlying simple linear regression, and this importance is supported by the inclusion of the problem in a large number of highly cited and best-selling texts. Unfortunately, many of these texts provide no solution, some provide a partial solution and others provide an incorrect solution. No texts with which we are familiar, nor their solutions manuals, provide a complete and correct solution. This common textbook problem affords the student the opportunity to understand what drives the variance of the parameter estimate, and as such deserves a correct solution.

Acknowledgements

The authors wish to thank Dr. Ho Kuen Ng for a useful discussion on optimization.

References

- Weisberg, S. (2005) Applied Linear Regression. 3rd Edition, John Wiley & Sons, Inc., Hoboken. http://dx.doi.org/10.1002/0471704091

- Stewart, J. (2011) Calculus. 7th Edition, Thomson Brooks/Cole, Belmont.

- Greenberg, H. (1971) Integer Programming. Academic Press, New York.

- Li, D. and Sun, X. (2006) Nonlinear Integer Programming. Springer, New York.